The Serializable Isolation Level

Introduction

Much production T-SQL code is written with the implicit assumption that the underlying data will not change during execution. As we saw in the previous article in this series, this is an unsafe assumption because data and index entries can move around underneath us—even during the execution of a single statement.

Where the T-SQL practitioner is aware of the kinds of correctness and data integrity issues that can arise due to concurrent data modifications by other processes, the solution most commonly offered is to wrap the vulnerable statements in a transaction. It is not clear how the same sort of reasoning would be applied to the single-statement case, which is already wrapped in an autocommit transaction by default.

Leaving that aside for a second, the idea of protecting an important area of T-SQL code with a transaction seems to be based on a misunderstanding of the protections offered by the ACID transaction properties. The important element of that acronym for the present discussion is the Isolation property. The idea is that using a transaction automatically provides complete isolation from the effects of other concurrent activities.

The truth of the matter is that transactions below SERIALIZABLE only provide a degree of isolation, depending on the currently effective transaction isolation level. To understand what all this means for our everyday T-SQL coding practices, we will first take a detailed look at the serializable isolation level.

Serializable Isolation

Serializable is the most isolated of the standard transaction isolation levels. It is also the default isolation level specified by the SQL standard, though SQL Server (like most commercial database systems) differs from the standard in this respect. The default isolation level in SQL Server is read committed, a lower isolation level that we will explore later in the series.

The definition of the serializable isolation level in the SQL-92 standard contains the following text (emphasis mine):

A serializable execution is defined to be an execution of the operations of concurrently executing SQL-transactions that produces the same effect as some serial execution of those same SQL-transactions. A serial execution is one in which each SQL-transaction executes to completion before the next SQL-transaction begins.

There is an important distinction to be made here between truly serialized execution (where each transaction actually runs exclusively to completion before the next one starts) and serializable isolation, where transactions are only required to have the same effects as if they were executed serially (in some unspecified order).

To put it another way, a real database system is allowed to physically overlap the execution of serializable transactions in time (thereby increasing concurrency) so long as the effects of those transactions still correspond to some possible order of serial execution. In yet other words, serializable transactions are potentially serializable rather than being actually serialized.

Logically serializable

Leave aside all physical considerations (like locking) for a moment and think only about the logical processing of two concurrent serializable transactions.

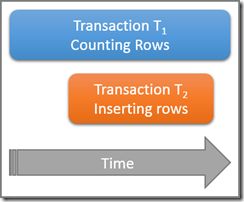

Consider a table that contains a large number of rows, five of which happen to satisfy some interesting query predicate. A serializable transaction T1 starts counting the number of rows in the table that match this predicate. Some time after T1 begins, but before it commits, a second serializable transaction T2 starts. Transaction T2 adds four new rows that also satisfy the query predicate to the table, and commits. The diagram below shows the time sequence of events:

The question is: How many rows should the query in serializable transaction T1 count?

Remember, we are thinking purely about the logical requirements here, so avoid thinking about which locks might be taken and so on.

The two transactions physically overlap in time, which is fine. Serializable isolation only requires that the results of these two transactions correspond to some possible serial execution. There are clearly two possibilities for a logical serial schedule of transactions T1 and T2:

Using the first possible serial schedule (T1 then T2) the T1 counting query would see five rows because the second transaction does not start until the first one completes. Using the second possible logical schedule, the T1 query would count nine rows because the four-row insert logically completed before the counting transaction began.

Both answers are logically correct under serializable isolation. In addition, no other answer is possible (so transaction T1 could not count seven rows, for example). Which of the two possible results is actually observed depends on precise timing and a number of implementation details specific to the database engine in use.

Note that we are not concluding that the transactions are actually somehow reordered in time. The physical execution is free to overlap as shown in the first diagram, so long as the database engine ensures the results reflect what would have happened had they executed in one of the two possible serial sequences.

Concurrency phenomena

In addition to logical serialization, the SQL standard also mentions that a transaction operating at the serializable isolation level must not experience certain concurrency phenomena. It must not read uncommitted data (no dirty reads) and once data has been read a repeat of the same operation must return exactly the same set of data (repeatable reads with no phantoms).

The standard makes a point of saying that those concurrency phenomena are excluded at the serializable isolation level as a direct consequence of requiring the transaction to be logically serializable. In other words, the serializability requirement is sufficient on its own to avoid the dirty read, non-repeatable read, and phantom concurrency phenomena. By contrast, avoiding the three concurrency phenomena alone is not sufficient to guarantee serializability, as we will see shortly.

Intuitively, serializable transactions avoid all concurrency-related phenomena because they are required to act as if they had executed in complete isolation. In that sense, the serializable transaction isolation level matches the common expectations of T-SQL practitioners quite closely.

Implementations

SQL Server happens to use a locking implementation of the serializable isolation level where physical locks are acquired and held to the end of the transaction (hence the deprecated table hint HOLDLOCK as a synonym of SERIALIZABLE).

This strategy is not quite enough to provide a technical guarantee of full serializability because new or changed data could appear in a range of rows previously processed by the transaction. This concurrency phenomenon is known as a phantom and can result in effects which could not have occurred in any serial schedule.

To ensure protection against the phantom concurrency phenomenon, locks taken by SQL Server at the serializable isolation level may also incorporate key-range locking to prevent new or changed rows from appearing between previously-examined index key values. Range locks are not always acquired under the serializable isolation level—all we can say in general is that SQL Server always acquires sufficient locks to meet the requirements of the isolation level. In fact, locking implementations quite often acquire more, and stricter, locks than are really needed to guarantee serializability but I digress.

Locking is just one of the possible physical implementations of the serializable isolation level. We should be careful to mentally separate the specific behaviours of the SQL Server locking implementation from the logical definition of serializable.

As an example of an alternative physical strategy, see the PostgreSQL implementation of serializable snapshot isolation though this is just one alternative. Each different physical implementation has its own strengths and weaknesses. As an aside, note that Oracle still does not provide a fully compliant implementation of the serializable isolation level. It has an isolation level named serializable, but it does not truly guarantee that transactions will execute according to some possible serial schedule. Oracle instead provides snapshot isolation when serializable is requested, in much the same way PostgreSQL did before serializable snapshot isolation (SSI) was implemented.

Snapshot isolation does not prevent concurrency anomalies like write skew, which is not possible under truly serializable isolation. If you are interested, you can find examples of write skew and other concurrency effects allowed by snapshot isolation at the SSI link above. We will also discuss the SQL Server implementation of snapshot isolation (SI) level later in this series.

A point-in-time view?

One reason I have spent time talking about the differences between logical serializability and physically serialized execution is that it is otherwise easy to infer guarantees that might not actually exist. For example, if you think of serializable transactions as actually executing one after the other, you might infer that a serializable transaction will necessarily see the database as it existed at the start of the transaction, providing a point-in-time view.

In fact, this is an implementation-specific detail. Recall the previous example, where serializable transaction T1 might legitimately count 5 or 9 rows. If a count of 9 is returned, the first transaction clearly sees rows that did not exist at the moment the transaction started. This result is possible in SQL Server but not in PostgreSQL SSI—though both implementations comply with the logical behaviours specified for the serializable isolation level.

In SQL Server, serializable transactions do not necessarily see the data as it existed at the start of the transaction. Rather, the details of the SQL Server implementation mean that a serializable transaction sees the latest committed data, as of the moment that the data was first locked for access. In addition, the set of latest-committed data ultimately read is guaranteed not to change its membership before the transaction ends. One could say the SQL Server implementation provides a point-in-time view of the data accessed during the transaction as it was at the end of the transaction.

Next time

The next part in this series covers the repeatable read isolation level, which provides weaker transaction isolation guarantees than serializable.