The ACID Properties of Statements & Transactions

Introduction

Any programmer will tell you that writing safe multi-threaded code can be hard. It requires great care and a good understanding of the technical issues involved. As a database person, you might think that these sorts of difficulties and complications do not apply when writing T-SQL. So, it may come as a bit of a shock to realize that T-SQL code is also vulnerable to the kind of race conditions and other data integrity risks most commonly associated with multi-threaded programming. This is true whether we are talking about a single T-SQL statement, or a group of statements enclosed in an explicit transaction.

At the heart of the issue is the fact that database systems allow multiple transactions to execute at the same time. This is a well-known (and very desirable) state of affairs, yet a great deal of production T-SQL code still quietly assumes that the underlying data does not change during the execution of a transaction or a single DML statement like SELECT, INSERT, UPDATE, DELETE, or MERGE.

Even where the code author is aware of the possible effects of concurrent data changes, the use of explicit transactions is too often assumed to provide more protection than is actually justified. These assumptions and misconceptions can be subtle, and are certainly capable of misleading even experienced database practitioners.

Now, there are cases where these issues will not matter much in a practical sense. For example, the database might be read-only, or there might be some other genuine guarantee that no one else will change the underlying data while we are working with it. Equally, the operation in question may not require results that are exactly correct—our data consumers might be perfectly happy with an approximate result (even one that does not represent the committed state of the database at any single point in time).

Concurrency Problems

The question of interference between concurrently executing tasks is a familiar problem to application developers working in programming languages like C#. The solutions are many and varied, but generally involve using atomic operations or obtaining a mutually-exclusive resource (such as a lock) while a sensitive operation is in progress. Where proper precautions are not taken, the likely results are corrupted data, an error, or even a crash.

Many of the same concepts (for example, atomic operations and locks) exist in the database world, but unfortunately they often have crucial differences in meaning. Most database people are aware of the ACID properties of database transactions, where the A stands for atomic. SQL Server also uses locks (and other mutual-exclusion devices internally). Neither of these terms mean quite what an experienced C# programmer would reasonably expect and many database professionals have a confused understanding of these topics as well.

To reiterate, sometimes these issues will not be a practical concern. If you write a query to count the number of active orders in a database system, how important is it if the count is a bit off? Or if it reflects the state of the database at some other point in time? Or a mixture of times?

It is common for real systems to make a trade-off between concurrency and consistency (even if the designer was not conscious of it at the time— informed trade-offs are perhaps a slightly rarer animal). Real systems often work well enough, with any anomalies short-lived or regarded as unimportant. A user seeing an inconsistent state on a web page will often resolve the issue by refreshing the page. If the issue is reported, it will most likely be closed as ‘Not Reproducible’. I am not saying this is a desirable state of affairs, just recognising that it happens.

Nevertheless, it is tremendously useful to understand concurrency issues at a fundamental level. Being aware of them enables us to write correct (or informed correct-enough) T-SQL as the circumstances require. More importantly, it allows us to avoid writing T-SQL that could compromise the logical integrity of our data.

But SQL Server provides ACID guarantees!

Yes, it does, but they are not always what you would expect, and they do not protect everything. More often than not, humans read far more into ACID than is justified.

The most frequently misunderstood components of the ACID acronym are the words Atomic, Consistent, and Isolated—we will come to those in a moment. The other one, Durable, is intuitive enough so long as you remember it applies only to persistent (recoverable) user data.

With all that said, SQL Server 2014 begins to blur the boundaries of the Durable property somewhat with the introduction of delayed durability and in-memory OLTP schema-only durability. I mention them only for completeness and will not cover these new features any further in this series.

Let’s move on to the more problematic ACID properties.

Atomic

Many programming languages provide atomic operations that can be used to protect against race conditions and other undesirable concurrency effects, where multiple threads of execution may access or modify shared data structures. For the application developer, an atomic operation comes with an explicit guarantee of complete isolation from the effects of other concurrent processing in a multi-threaded program.

An analogous situation arises in the database world where multiple T-SQL queries concurrently access and modify shared data (i.e. the database) from different threads. Note that we are not talking about parallel queries here—ordinary single-threaded queries are routinely scheduled to run concurrently within SQL Server on separate worker threads.

Unfortunately, the atomic property of SQL transactions only guarantees that data modifications performed within a transaction succeed or fail as a unit. Nothing more than that. There is certainly no guarantee of complete isolation from the effects of other concurrent processing. Note also in passing that the atomic transaction property says nothing about any guarantees for reading data.

Single statements

There is also nothing special about a single statement in SQL Server. Where an explicit containing transaction (BEGIN TRAN...COMMIT TRAN) does not exist, a single DML statement still executes within an autocommit transaction. The same ACID guarantees apply to a single statement and the same limitations as well. In particular, a single statement comes with no special guarantees that data will not change while it is in progress.

Consider the following toy AdventureWorks query:

SELECT

TH.TransactionID,

TH.ProductID,

TH.ReferenceOrderID,

TH.ReferenceOrderLineID,

TH.TransactionDate,

TH.TransactionType,

TH.Quantity,

TH.ActualCost

FROM Production.TransactionHistory AS TH

WHERE TH.ReferenceOrderID =

(

SELECT TOP (1)

TH2.ReferenceOrderID

FROM Production.TransactionHistory AS TH2

WHERE TH2.TransactionType = N'P'

ORDER BY

TH2.Quantity DESC,

TH2.ReferenceOrderID ASC

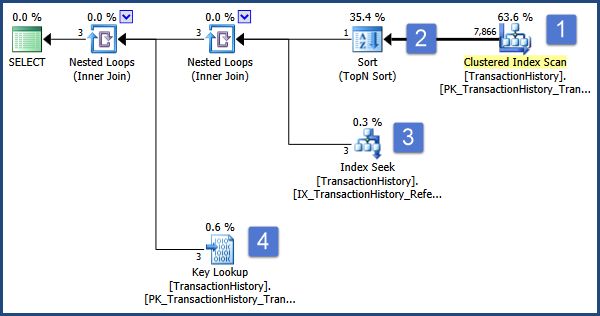

);The query is intended to display information about the Order that is ranked first by Quantity. The execution plan is as follows:

The main operations in this plan are:

- Scan the table to find rows with the required transaction type

- Find the Order ID that sorts highest according to the specification in the subquery

- Find the rows (in the same table) with the selected Order ID using a nonclustered index

- Look up the remaining column data using the clustered index

An impossible result

Now imagine that a concurrent user modifies Order 495, changing its Transaction Type from ‘P’ to ‘W’ and commits that change to the database. As luck would have it, this modification goes through while our query is performing the sort operation (step 2).

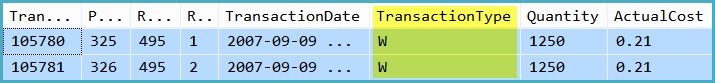

When the sort completes, the index seek at step 3 finds the rows with the selected Order ID (which happens to be 495) and the key lookup at step 4 fetches the remaining columns from the base table (where the Transaction Type is now ‘W’).

This sequence of events means our query produces an apparently impossible result:

Instead of finding orders with transaction type ‘P’ as the query specified, the results show transaction type ‘W’!

The root cause is clear: Our query implicitly assumed the data could not change while our single-statement query was in progress. The window of opportunity in this case was relatively large due to the blocking sort, but the same sort of race condition can occur at any stage of query execution, in general. Naturally, the risks are usually higher with increased levels of concurrent modifications, larger tables, and where blocking operators appear in the query plan.

Myths and chaos

Another persistent myth in the same general area is that MERGE is to be preferred over separate INSERT, UPDATE, and DELETE statements because the single-statement MERGE is atomic. That’s nonsense, of course. We will come back to this sort of reasoning later on in the series.

The general message at this point is that unless explicit steps are taken to ensure otherwise, data rows and index entries can change, move position, or disappear entirely at any time during the execution process. A mental picture of constant and random change in the database is a good one to bear in mind while writing T-SQL queries.

Consistency

The second word from the ACID acronym also has a range of possible interpretations.

In a SQL Server database, Consistency means only that a transaction leaves the database in a state that does not violate any active constraints. It is important to fully appreciate how limited that statement is: The only ACID guarantees of data integrity and logical consistency are those provided by active constraints.

SQL Server provides a limited range of constraints to enforce logical integrity, including PRIMARY KEY, FOREIGN KEY, CHECK, UNIQUE, and NOT NULL. These are all guaranteed to be satisfied at the time a transaction commits. In addition, SQL Server guarantees the physical integrity of the database at all times. One might argue that data types are also constraints and make other observations along similar lines. While true, no one would be happy about SQL Server storing a character string in an integer attribute or anything like that so I think we can take that as read.

Regardless, the built-in constraints are not always sufficient to enforce all the business and data integrity rules we need. It is certainly possible to be creative with the standard facilities, but these quickly become complex and may result in the storage of duplicated data. As a consequence, most real databases contain at least some T-SQL routines written to enforce additional rules in stored procedures and triggers. The responsibility for ensuring this code works correctly lies entirely with the author—the Consistency property provides no specific protections.

To emphasise the point, pseudo-constraints written in T-SQL have to perform correctly no matter what concurrent modifications might be occurring. An application developer might protect a sensitive operation like that with a lock statement. The closest thing T-SQL programmers have to that facility for at-risk stored procedure and trigger code is the comparatively rarely-used sys.sp_getapplock system stored procedure. That is not to say it is the only (or even preferred) option, just that it exists and can be the right choice in some circumstances.

Isolation

This is easily the most often misunderstood of the ACID transaction properties.

In principle, a completely isolated transaction executes as the only task executing against the database during its lifetime. Other transactions can only start once the current transaction has completely finished (committed or rolled back). Executed this way, a transaction would truly be an atomic operation in the strict sense that a non-database person would ascribe to the phrase.

In practice, database transactions operate instead with a degree of isolation specified by the currently effective transaction isolation level (which applies equally to stand-alone statements, remember). This compromise (the degree of isolation) is the practical consequence of the trade-offs between concurrency and correctness I mentioned earlier. A system that literally processed transactions one-by-one—with no overlap in time—would provide complete isolation but overall system throughput would likely be poor.

Next Time

The next part in this series will continue the coverage of concurrency issues, ACID properties, and transaction isolation with a detailed look at the serializable isolation level—another example of something that may not mean what you think it does.