How to Survive a Rollback (when logging)

Introduction

Logging interesting events to a database table can be very helpful when it comes to diagnosing why an error or other unexpected behaviour occurred after the fact.

For severe errors, a popular pattern uses a TRY...CATCH block. The CATCH block rolls back any open transaction, logs details, then rethrows the error to the caller.

If a chain of procedure calls is involved, each level logs its own local context. This information normally comes from local variables (perhaps including table variables), since their contents survive a rollback.

This amount of information is often sufficient to track down and remedy the cause.

The Problem

Sometimes, more context is needed to determine why a problem occurred. This context can be provided by routine logging earlier in the transaction. These events may not have directly caused the problem, but they are frequently helpful for diagnosis.

The issue is that this infomation is lost when the transaction rolls back.

If we are lucky, the error is caught in a context that has all the information needed to diagnose the problem later. More often, earlier work was performed in a different stored procedure, so the detail is not available at the time of the error. Any details logged are lost when the transaction aborts.

In another common scenario, an external application is responsible for the transaction. When the application detects an anomaly (perhaps a business rule violation), it logs the information it has then rolls back the transaction. This rollback wipes out any routine logging performed by earlier procedure calls.

In any case, the problem isn’t so much with the error handling itself, it is the loss of the extra context provided by earlier routine logging.

The Usual Solution

It would be nice to be able to write routine logging information to a database table in a way that survived any future error and rollback. Other database engines provide this facility via nested or autonomous transactions.

Whichever terminology is used, the idea is the same: Commit database changes independently of any containing transaction. If the outer transaction fails, the inner transaction still commits successfully. This is perfect for logging, which we always want to succeed.

SQL Server workaround

SQL Server does not provide this feature directly, but it can be simulated as the Microsoft SQL Programmability & API Development Team described in How to create an autonomous transaction in SQL Server 2008.

While that entry is from August 2008, it still offers the two main alternatives available today:

- Use a SQLCLR procedure to create a new database connection (with

enlist = falseto avoid joining the active transaction). - Use a ‘loopback’ linked server (with

remote proc transaction promotion = falseto avoid a distributed transaction).

In either case, the effect is that logging is performed in a transaction that commits independently of the original.

Drawbacks

Neither solution is perfect.

Not everyone can—or wants to—enable SQLCLR. It is not available in Azure SQL Database and sometimes regarded as a security risk by DBAs on other platforms.

The ‘loopback’ linked server operation will fail if it is called from a distributed transaction. You may not be using distributed transactions today, but can you ensure your code will never be called in that context?

Note: The documentation contains a warning about ‘loopback’ linked servers (emphasis added):

Linked servers can be defined to point back (loop back) to the server on which they are defined. Loopback servers are most useful when testing an application that uses distributed queries on a single server network. Loopback linked servers are intended for testing and are not supported for many operations, such as distributed transactions.

That said, both methods have been widely used for many years to enable logging to succeed when a containing transaction fails. The linked server method is more popular in my experience, despite the documentation’s warning.

Another Option

There are other creative ways to implement routine logging that survives a transaction rollback. Any method that escapes the confines of the SQL Server transaction will do.

One such idea is to write log data to an external file. File system changes certainly survive a database rollback, but SQL Server doesn’t make it particularly easy to implement, assuming xp_cmdshell does not appeal.

User-defined events

My initial idea was to use sp_trace_generateevent:

Creates a user-defined event. The event can be collected using SQL Trace or Extended Events.

ℹ️ Note

This stored procedure is not deprecated. All other SQL Trace related stored procedures are deprecated.

To capture the events fired by this stored procedure using Extended Events, add the

user_infoevent to an event session.

This procedure allows us to use one of nine available user events, writing the data to any of the usual Extended Events targets, including files. We are provided with an nvarchar(128) parameter to record the type of information logged, and a varbinary(8000) parameter to hold custom event data. This should be sufficient for most purposes.

Logging to a file isn’t exactly what I was after though. It adds a dependency outside the database, with all the attendant management, availability, and recovery concerns.

We need another ingredient.

Event notifications

Event notifications were introduced in SQL Server 2005. The description can be intimidating, perhaps because it mentions Service Broker, which has a reputation for setup complexity:

Event notifications send information about events to a Service Broker service. Event notifications execute in response to a variety of Transact-SQL data definition language (DDL) statements and SQL Trace events by sending information about these events to a Service Broker service.

Happily, custom events produced by sp_trace_generateevent are among the limited number of trace events supported by Event Notifications. The available events are named USERCONFIGURABLE_0 through USERCONFIGURABLE_9.

Service broker

We will only need a minimal Service Broker setup sufficient to receive Event Notifications, so much of the usual complexity can be avoided. Moreover, there is an important advantage (emphasis added):

If an event transaction that activates a notification is rolled back, the sending of the event notification is also rolled back. Event notifications do not fire by an action defined in a trigger when the transaction is committed or rolled back inside the trigger. Because trace events are not bound by transactions, event notifications based on trace events are sent regardless of whether the transaction that activates them is rolled back.

This is exactly the sort of autonomous transaction behaviour we need.

Solution Outline

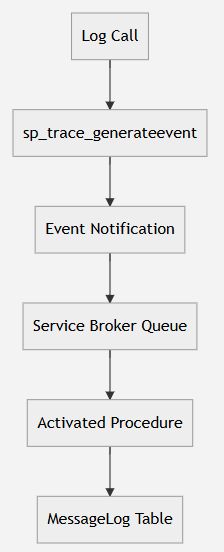

In outline, the idea is to generate log entries through the following mechanisms:

-

sp_trace_generateeventto send aUSERCONFIGURABLE_9custom event.- Identified by the

@userinfo nvarchar(128)parameter. - Log data provided in the

@userdata varbinary(8000)parameter. - The log data can use any convenient underlying structure. I chose JSON for this example.

- Identified by the

-

An Event Notification triggered by the

USERCONFIGURABLE_9custom event. -

A Service Broker queue, service, and activated stored procedure to receive and store log data in the persistent database.

Visually:

This gives us a fully supported, asynchronous, and reliable way to log messages that will survive a transaction rollback.

Example Implementation

Custom events are created at the server level, which means the associated Event Notification will originate in msdb. It makes sense therefore to create the Service Broker queue, service, and activated stored procedure in msdb.

Event Notification, Queue, and Service

The Event Notification and Service Broker elements are very straightforward to set up:

USE msdb;

GO

-- Initially disabled until we have everything ready

CREATE QUEUE dbo.MessageLogQueue

WITH

STATUS = OFF,

RETENTION = OFF;

-- Service broker service

CREATE SERVICE MessageLogService

ON QUEUE MessageLogQueue

([http://schemas.microsoft.com/SQL/Notifications/PostEventNotification]);

-- Event notification on the custom event

CREATE EVENT NOTIFICATION

MessageLogEvents

ON SERVER

FOR USERCONFIGURABLE_9

TO SERVICE

'MessageLogService',

'current database';

GOUser database elements

We need two items in each user database we want to enable for autonomous logging:

First, a table to store the logged information:

-- User database

USE Sandpit;

GO

CREATE TABLE dbo.MessageLog

(

LogTime datetime2(3) NOT NULL,

ModuleName nvarchar(257) NULL,

ProcessID smallint NOT NULL,

RequestID smallint NOT NULL,

TransactionID integer NOT NULL,

LogEntry varchar(7000) NOT NULL,

EntryTime datetime2(3) NOT NULL

DEFAULT SYSUTCDATETIME(),

INDEX [CX dbo.MessageLog LogTime]

CLUSTERED (LogTime)

WITH (DATA_COMPRESSION = ROW)

);

GOSecond, a stored procedure to generate custom events. The optional @ProcID parameter is for the caller to provide @@PROCID, identifying the calling module (if any):

-- User database

USE Sandpit;

GO

CREATE PROCEDURE dbo.LogMessage

@LogEntry varchar(7000),

@ProcID integer = 0

AS

BEGIN

DECLARE

@rc integer = -999,

@data varbinary(8000);

-- Construct the user event payload

SET @data =

TRY_CONVERT

(

varbinary(8000),

(

SELECT

ProcessID = @@SPID,

RequestID = CURRENT_REQUEST_ID(),

TransactionID = CURRENT_TRANSACTION_ID(),

LogTime = CONVERT(datetime2(3), SYSUTCDATETIME()),

DatabaseName = DB_NAME(),

ModuleName =

QUOTENAME(OBJECT_SCHEMA_NAME(@ProcID)) +

N'.' +

QUOTENAME(OBJECT_NAME(@ProcID)),

LogEntry = @LogEntry

FOR JSON PATH,

WITHOUT_ARRAY_WRAPPER

)

);

-- Check JSON packaging was successful

IF @data IS NULL

BEGIN

RAISERROR

(

'Unable to generate event JSON',

16,

1

);

RETURN -2;

END;

-- Generate the custom event

IF ISJSON(TRY_CONVERT(nvarchar(max), @data)) = 1

BEGIN

EXECUTE @rc =

sys.sp_trace_generateevent

-- USERCONFIGURABLE_9

@event_id = 91,

-- Identify this as a message log event

@user_info = N'{Event Notification: MessageLog}',

-- Log payload

@user_data = @data;

END;

-- Check for problems sending the custom event

IF @rc <> 0

BEGIN

RAISERROR

(

'sp_trace_generateevent failed, result code = %i',

16,

1,

@rc

);

RETURN @rc;

END;

-- Done

RETURN @rc;

END;

GOMost of the code is concerned with packaging some example log data into JSON format and converting it to varbinary(8000) for sp_trace_generateevent.

Note: The log message is limited to 7000 bytes to ensure the whole package fits in varbinary(8000). If you choose to log additional fixed data, reduce the maximum log message length so the JSON still fits in varbinary(8000).

I chose JSON over XML for the example because OPENJSON has a nice WITH clause to specify the layout. This is just a personal preference; structured text or XML could be made to work just as well.

As a reminder, both the log table and stored procedure need to be present in any user database using this example autonomous logging scheme. You could obviously implement this differently using a central logging location, or even sending the events to a remote server.

Activated stored procedure

The activated stored procedure in msdb runs automatically whenever Service Broker has messages that need processing:

USE msdb

GO

-- Activation procedure to handle received event notifications

CREATE PROCEDURE dbo.MessageLog_Activated

AS

SET NOCOUNT ON;

BEGIN TRY

BEGIN TRANSACTION;

-- Working variables

DECLARE

@Handle uniqueidentifier,

@MessageType nvarchar(256),

@XML xml,

@JSON nvarchar(max),

@SQL nvarchar(max),

@DatabaseName sysname;

-- Retrieve a message from the queue, if available

RECEIVE TOP (1)

@Handle = [conversation_handle],

@MessageType = message_type_name,

@XML = TRY_CONVERT(xml, message_body)

FROM dbo.MessageLogQueue;

IF @Handle IS NOT NULL

BEGIN

-- Check it is an Event Notification

IF @MessageType =

N'http://schemas.microsoft.com/SQL/Notifications/EventNotification'

BEGIN

-- Specifically for us

IF N'{Event Notification: MessageLog}' =

@XML.value

(

'(/EVENT_INSTANCE/TextData)[1]',

'nvarchar(128)'

)

BEGIN

-- Convert the BinaryData message payload to JSON

SET @JSON =

TRY_CONVERT

(

nvarchar(max),

@XML.value

(

'(/EVENT_INSTANCE/BinaryData)[1]',

'varbinary(8000)'

)

);

-- Check the JSON is valid

IF @JSON IS NULL OR ISJSON(@JSON) = 0

BEGIN

RAISERROR

(

'Invalid JSON message %s',

16,

1,

@JSON

);

RETURN -1;

END;

-- Target database for the log message

SET @DatabaseName =

JSON_VALUE(@JSON, '$.DatabaseName');

-- Check the database is visible

IF DB_ID(@DatabaseName) IS NULL

BEGIN

RAISERROR

(

'Cannot access database, DatabaseName = %s',

16,

1,

@DatabaseName

);

RETURN -2;

END;

-- Dynamic SQL to insert the log message to

-- the target database

SET @SQL =

CONCAT

(

N'

INSERT ',

QUOTENAME(PARSENAME(@DatabaseName, 1)),

N'.dbo.MessageLog

(

LogTime,

ModuleName,

ProcessID,

RequestID,

TransactionID,

LogEntry

)

SELECT

LogTime,

ModuleName,

ProcessID,

RequestID,

TransactionID,

LogEntry

FROM OPENJSON(@JSON)

WITH

(

LogTime datetime2(3) ''$.LogTime'',

ModuleName nvarchar(257) ''$.ModuleName'',

ProcessID smallint ''$.ProcessID'',

RequestID smallint ''$.RequestID'',

TransactionID integer ''$.TransactionID'',

LogEntry varchar(7000) ''$.LogEntry''

);

'

);

-- Run the dynamic insert

EXECUTE sys.sp_executesql

@stmt = @SQL,

@params = N'@JSON nvarchar(max)',

@JSON = @JSON;

END; -- {Event Notification: MessageLog}

END; -- @MessageType = @EventNotification

ELSE IF @MessageType IN

(

N'http://schemas.microsoft.com/SQL/ServiceBroker/EndDialog',

N'http://schemas.microsoft.com/SQL/ServiceBroker/Error'

)

BEGIN

END CONVERSATION @Handle;

END;

ELSE

BEGIN

RAISERROR

(

'Received unexpected message %s',

16,

1,

@MessageType

);

END;

END; -- @Handle IS NOT NULL

-- Success

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

IF XACT_STATE() <> 0 ROLLBACK;

THROW;

END CATCH;

GOThis procedure only exists in msdb and is responsible for writing each event to the correct target database.

Note: Activated stored procedures are usually strongly constrained to the local database context due to the EXECUTE AS clause in the queue definition.

Using msdb works around this limitation without having to sign the activated stored procedure to write to log tables in other databases. Microsoft marks msdb as TRUSTWORTHY and the database is owned by sa. This allows the activated stored procedure to escape the sandbox.

Final step

With all the elements in place, we can now enable the Service Broker queue and associate the activated stored procedure with it:

USE msdb;

GO

-- Activate

ALTER QUEUE dbo.MessageLogQueue

WITH

STATUS = ON,

ACTIVATION

(

STATUS = ON,

PROCEDURE_NAME = MessageLog_Activated,

MAX_QUEUE_READERS = 1,

EXECUTE AS OWNER

);Depending on the expected log volume and number of databases involved, you might choose to allow a higher number of queue readers.

Testing

The following code creates a procedure and logs a test message, all within a transaction that is rolled back:

BEGIN TRANSACTION;

GO

CREATE OR ALTER PROCEDURE dbo.Test

AS

BEGIN

SET NOCOUNT ON;

EXECUTE dbo.LogMessage

@LogEntry = 'Test message',

@ProcID = @@PROCID;

END;

GO

EXECUTE dbo.Test;

GO

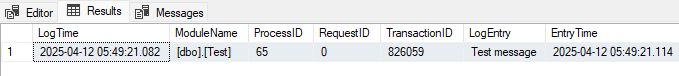

ROLLBACK TRANSACTION;Querying the logging table confirms the message survived the rollback:

SELECT

LogTime,

ModuleName,

ProcessID,

RequestID,

TransactionID,

LogEntry,

EntryTime

FROM dbo.MessageLog;

Notice that even though this was the very first message logged after a fresh installation, the message arrived in the destination table 32 milliseconds after being submitted.

Service Broker guarantees message delivery, even after a failover, instance restart, or even database restore (assuming transaction logs are intact).

Cleaning up

Once you have finished with the example, the following script will clean everything up:

USE msdb;

GO

-- Clean up autonomous logging components

IF EXISTS

(

SELECT *

FROM sys.server_event_notifications

WHERE [name] = N'MessageLogEvents'

)

BEGIN

DROP EVENT NOTIFICATION

MessageLogEvents

ON SERVER;

END;

IF EXISTS

(

SELECT *

FROM sys.services

WHERE [name] = N'MessageLogService'

)

BEGIN

DROP SERVICE MessageLogService;

END;

IF OBJECT_ID(N'dbo.MessageLogQueue', 'SQ') IS NOT NULL

BEGIN

DROP QUEUE dbo.MessageLogQueue;

END;

DROP PROCEDURE IF EXISTS

dbo.MessageLog_Activated;

GO

-- For each user database

USE Sandpit;

GO

DROP PROCEDURE IF EXISTS

dbo.LogMessage;

DROP TABLE IF EXISTS

dbo.MessageLog;

GOFinal Thoughts

Using a SQLCLR procedure or ‘loopback’ linked server to simulate autonomous SQL Server transactions is still a simpler option, if you can accept the limitations.

I present this option as an interesting alternative that the reader is encouraged to try out and customise to their own needs and preferences. For high log volumes, monitor queue performance and consider increasing MAX_QUEUE_READERS as necessary.

I like the idea of using less well-known features like custom trace events and Event Notifications to simplify what could otherwise be a moderately complex, fully Service Broker scheme.

Using msdb is a convenience for ease of demonstration. It simplifies several implementation aspects, but not everyone will be comfortable with this arrangement.

Choosing a different (new or existing) database to host the central services is an option, but module signing will be needed to allow the activated stored procedure permissions to write to other databases. You will also need to ensure ENABLE_BROKER is set for the database (msdb always has this enabled).

Perhaps one day SQL Server will support autonomous transactions natively. Until then, this is another option to consider.