Updating in Batches using an API Cursor

My previous article showed how to batch a monolithic DELETE statement using an API cursor. This article covers batched updates with a similar technique, though many of the details are new and specific to API cursor updates.

As a reminder, batching can reduce resource usage, improve concurrency, and avoid overwhelming the transaction log, especially in large-scale operations.

Objective

Today’s task involves updating a temporary table that currently holds product IDs found in the AdventureWorks sample database.

The temporary table has a second column holding the number of matching TransactionHistory rows for each ProductID, but all entries are currently NULL:

DROP TABLE IF EXISTS #P;

GO

SELECT

P.ProductID,

HistoryCount = CONVERT(bigint, NULL)

INTO #P

FROM Production.Product AS P;Updating All at Once

AdventureWorks is a small database, so it would be trivial to write a single UPDATE statement to achieve the desired outcome:

UPDATE #P

SET HistoryCount =

(

SELECT COUNT_BIG(*)

FROM Production.TransactionHistory AS TH

WHERE TH.ProductID = #P.ProductID

);Still, I want you to imagine the database is much larger and the update is more expensive to perform. For that and the other practical reasons I described last time, a decision has been made to perform a batched update.

This task is made more difficult by the temporary table being a heap with no indexes. We are also told we are not allowed to create new indexes.

Using a T-SQL Cursor

We could rewrite the update using a T-SQL cursor, but this would mean updating one row at a time:

SET NOCOUNT, XACT_ABORT ON;

SET STATISTICS XML OFF;

DECLARE

@ProductID integer,

@HistoryCount bigint;

DECLARE

@Update CURSOR;

SET @Update = CURSOR

LOCAL

FORWARD_ONLY

DYNAMIC

SCROLL_LOCKS

TYPE_WARNING

FOR

SELECT

ProductID,

HistoryCount

FROM #P

FOR UPDATE

OF HistoryCount;

OPEN @Update;

WHILE 1 = 1

BEGIN

FETCH NEXT

FROM @Update

INTO @ProductID, @HistoryCount;

IF @@FETCH_STATUS = -1 BREAK;

UPDATE #P

SET HistoryCount =

(

SELECT COUNT_BIG(*)

FROM Production.TransactionHistory AS TH

WHERE TH.ProductID = @ProductID

)

WHERE CURRENT OF @Update;

END;One thing to notice is the WHERE CURRENT OF syntax on the UPDATE. This allows the per-row update to seek into the heap (which has no indexes, remember) using the bookmark RID for the current row, which is saved in the cursor’s worktable (CWT):

WHERE CURRENT OF cursor syntax

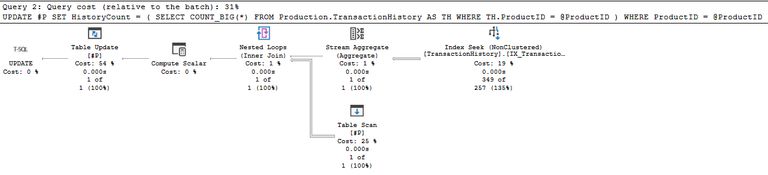

We might have been tempted to write the per-row update as:

UPDATE #P

SET HistoryCount =

(

SELECT COUNT_BIG(*)

FROM Production.TransactionHistory AS TH

WHERE TH.ProductID = @ProductID

)

WHERE ProductID = @ProductID;The problem is that would require a full scan of the temporary table for each single row update, because there is no index on ProductID:

All that aside, even the optimized T-SQL cursor is deemed too slow for our needs. While a T-SQL cursor would work correctly, its row-by-row nature means it is less efficient than updating in batches.

Let’s look at using an API cursor instead.

Using an API Cursor

Opening the API cursor and fetching the first batch of (say) 50 rows uses syntax very similar to my previous article, so I won’t repeat all the descriptions and reasoning here:

DECLARE @cursor integer;

DECLARE @P table

(ProductID integer NULL);

-- Open the cursor, suppressing the empty result set

INSERT TOP (0) @P

EXECUTE sys.sp_cursoropen

@cursor OUTPUT,

N'SELECT ProductID FROM #P;',

-- DYNAMIC,

-- CHECK_ACCEPTED_TYPES, DYNAMIC_ACCEPTABLE

163842,

-- SCROLL_LOCKS,

-- CHECK_ACCEPTED_OPTS, SCROLL_LOCKS_ACCEPTABLE

163842;

-- Fetch the next batch into the cursor

INSERT TOP (0) @P

EXECUTE sys.sp_cursorfetch

@cursor,

-- optype = NEXT

2,

-- rownum; required but ignored for this optype

0,

-- Batch size

50;

-- ===================

-- Update will go here

-- ===================

-- Close the cursor

EXECUTE sys.sp_cursorclose @cursor;See my previous article and the API cursor extended stored procedure documentation for sp_cursoropen and sp_cursorfetch for details of the bit flags used above.

Now we have the first 50 rows in the cursor, we need to work out how to perform the update.

API Cursor Update Syntax

The documentation for sp_cursor covers how to use it for update operations, but while the information is all there, it is not a model of clarity. A brief digression to address this.

Named parameters

The first syntax option is used when setting one or more columns to a single value. The single value can be a literal, a variable (including some intrinsics like @@SPID), but not an expression or function call. These are just the normal limitations of T-SQL procedure parameters.

The unique thing about this first syntax is you name the parameter the same as the column you want to update, prefixed by @.

For example, to set the HistoryCount column to zero for all rows in the current batch:

EXECUTE sys.sp_cursor

@cursor,

-- optype = UPDATE

1,

-- rownum; 0 = all rows in the buffer

0,

-- Target table name

N'#P',

-- Column to update

@HistoryCount = 0;To update multiple columns, add new @-prefixed column name parameters to the call.

The primary advantage of this syntax is you can provide a literal or variable matching the column’s data type without expressing it as a string.

The target table name is optional when there is only one table in the cursor’s source statement, but only in the sense that it can be an empty string. Passing NULL produces an error, as does omitting the parameter.

String syntax

The second update syntax is much more powerful, but requires a string, and the parameter is not named for the target column. The string could be as simple as 'HistoryCount = 0', but much more flexibility is available.

For our present purposes, we can write the following:

EXECUTE sys.sp_cursor

@cursor,

-- optype = UPDATE

1,

-- All rows in the buffer

0,

-- Target table name

N'#P',

-- Column change specification

N'

HistoryCount =

(

SELECT COUNT_BIG(*)

FROM Production.TransactionHistory AS TH

WHERE TH.ProductID = #P.ProductID

)

';Notice I am referencing a row in the source table by prefixing the column name with #P, making this a correlated subquery.

The change specification is essentially a SET clause that gets built into a complete update statement. This might remind you of constructing dynamic SQL.

You can write the SET in the change specification explicitly if you like, and even add UPDATE #P to that if you really enjoy typing:

-- Also valid syntax for the change spec string

N'

UPDATE #P

SET HistoryCount =

(

SELECT COUNT_BIG(*)

FROM Production.TransactionHistory AS TH

WHERE TH.ProductID = #P.ProductID

)

';If you have more than one column to update, each requires a separate string passed as an extra unnamed parameter. With multiple columns, only the first string can include SET, and none are allowed the optional UPDATE prefix.

We now have everything we need to perform the batched update using an API cursor.

Completed update script

Bringing everything together for a full demo:

SET NOCOUNT, XACT_ABORT ON;

-- No execution plans

SET STATISTICS XML OFF;

GO

DROP TABLE IF EXISTS #P;

GO

-- Table to update

SELECT

P.ProductID,

HistoryCount = CONVERT(bigint, NULL)

INTO #P

FROM Production.Product AS P;

GO

-- API cursor implementation

DECLARE

@cursor integer,

@fetch_buffer_count integer;

-- Used to consume result sets and avoid row count messages

DECLARE @P table

(ProductID integer NULL);

-- Open a dynamic cursor

INSERT TOP (0) @P

EXECUTE sys.sp_cursoropen

@cursor OUTPUT,

N'SELECT ProductID FROM #P;',

-- DYNAMIC,

-- CHECK_ACCEPTED_TYPES, DYNAMIC_ACCEPTABLE

163842,

-- SCROLL_LOCKS,

-- CHECK_ACCEPTED_OPTS, SCROLL_LOCKS_ACCEPTABLE

163842;

-- Fetch and update loop

WHILE 1 = 1

BEGIN

-- Fetch and lock the next batch of rows

INSERT TOP (0) @P

EXECUTE sys.sp_cursorfetch

@cursor,

-- optype = NEXT

2,

-- rownum; required but ignored for this optype

0,

-- Batch size

50;

-- Get the number of rows fetched

SELECT

@fetch_buffer_count = fetch_buffer_size

FROM sys.dm_exec_cursors(@@SPID)

WHERE

cursor_id = @cursor;

-- Finished?

IF @fetch_buffer_count = 0 BREAK;

-- Perform a set-based update on the current batch

INSERT TOP (0) @P

EXECUTE sys.sp_cursor

@cursor,

-- optype = UPDATE

1,

-- All rows in the buffer

0,

-- Target table name

N'#P',

-- Column change specification

-- Note the correlation to #P.ProductID

N'

HistoryCount =

(

SELECT COUNT_BIG(*)

FROM Production.TransactionHistory AS TH

WHERE TH.ProductID = #P.ProductID

)

';

END;

-- Close the cursor

EXECUTE sys.sp_cursorclose @cursor;

GO

-- Show the results

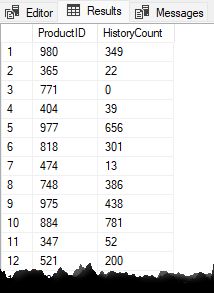

SELECT * FROM #P;Result

This script correctly updates the HistoryCount column in the target table, 50 rows at a time:

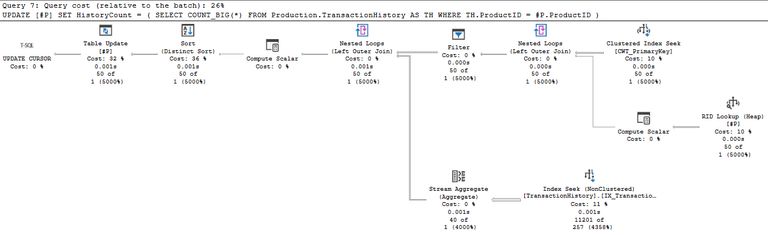

The execution plan for each batch update is:

Notice the final form of the cursor’s constructed UPDATE statement shown in the header.

As with the batched delete I described last time, this is a set-based plan changing up to 50 rows on each execution. The cursor does not submit a separate update for each row.

While API cursors are a little more complex—and less well documented—than their T-SQL counterparts, they can offer a scalable way to handle large updates efficiently without crafting custom batching logic. This is especially true for unindexed tables.

Thanks for reading.