Deleting in Batches using an API Cursor

Introduction

Splitting an expensive operation on a large number of rows into a series of smaller batches is a popular technique when concurrency and transaction log management are more important than raw speed.

You may already be familiar with Michael J. Swart’s popular blog posts on the topic:

Michael covers efficiently locating batch start and end points using an index on the underlying table in the first post. In the second, he addresses some common questions around different indexing strategies and considerations.

You may also have your own favourite method for batching large operations.

The example in Michael’s posts is deleting rows in batches. In this article, I’m going to describe another way to perform this task using a SQL Server API cursor.

Demo

Michael used the Microsoft Contoso BI Demo Dataset for Retail Industry sample. Only the ContosoRetailDW database backup (.bak) is needed. Keep the backup handy because the test is destructive, unless you remember to wrap it in a transaction and roll back.

The task is to delete all rows from the FactOnlineSales table for a particular customer. The code Michael uses to delete rows in batches of 1,000 rows is:

DECLARE

@LargestKeyProcessed INT = -1,

@NextBatchMax INT,

@RC INT = 1;

WHILE (@RC > 0)

BEGIN

SELECT TOP (1000) @NextBatchMax = OnlineSalesKey

FROM FactOnlineSales

WHERE OnlineSalesKey > @LargestKeyProcessed

AND CustomerKey = 19036

ORDER BY OnlineSalesKey ASC;

DELETE FactOnlineSales

WHERE CustomerKey = 19036

AND OnlineSalesKey > @LargestKeyProcessed

AND OnlineSalesKey <= @NextBatchMax;

SET @RC = @@ROWCOUNT;

SET @LargestKeyProcessed = @NextBatchMax;

ENDTo cut straight to the chase, here’s a minimal implementation using an API cursor:

DECLARE

@cursor integer,

@SQL nvarchar(max) =

N'

SELECT OnlineSalesKey

FROM dbo.FactOnlineSales

WHERE CustomerKey = 19036;

';

EXECUTE sys.sp_cursoropen @cursor OUTPUT, @SQL, 2, 2;

WHILE 1 = 1

BEGIN

EXECUTE sys.sp_cursorfetch @cursor, 2, 0, 1000;

IF @@ROWCOUNT = 0 BREAK;

EXECUTE sys.sp_cursor @cursor, 2, 0;

END;

EXECUTE sys.sp_cursorclose @cursor;It’s not necessary (or recommended) at this stage, but if you choose to run the API cursor script now, please turn off SSMS actual execution plans. There are 45,100 rows to delete so you’ll get 46 sets of plans, which is generally a challenge for 32-bit SSMS memory limits and may even cause an application crash.

The API cursor implementation is quite compact, but not entirely self-documenting. The broad idea is to:

- Open a cursor over the rows we want to delete (

sp_cursoropen). - Fetch 1,000 rows at a time into the cursor (

sp_cursorfetch). - Delete the current batch of rows from the underlying table (

sp_cursor). - Repeat from step 2 until we run out of rows to delete.

Step 2 highlights the most important benefit of an API cursor over T-SQL cursors: API cursors can be block (or ‘fat’) cursors.

Block cursors allow multiple rows to be processed by a single cursor call.

A More Complete Implementation

There are a number of issues with the simple API block cursor implementation presented just above. The two most important are:

- Unwanted result sets are produced by each extended stored procedure call.

- There is a

(1 row affected)message for each deleted row.

Both issues affect performance, the second one dramatically so. Happily, they can be addressed by inserting the result of the extended stored procedure calls into a table using INSERT...EXEC.

Note: The “row(s) affected” messages returned by sp_cursor cannot be suppressed with SET NOCOUNT ON because cursor libraries rely on them for correct operation.

The improved implementation (with code comments) is:

SET NOCOUNT, XACT_ABORT ON;

SET STATISTICS XML OFF;

DROP TABLE IF EXISTS #Audit;

CREATE TABLE #Audit

(

OnlineSalesKey integer NOT NULL PRIMARY KEY

);

DECLARE

@cursor integer,

@stmt nvarchar(max) =

N'

SELECT

OnlineSalesKey

FROM dbo.FactOnlineSales

WHERE

CustomerKey = @ck;

',

@scrollopt integer =

-- DYNAMIC, PARAMETERIZED_STMT,

-- CHECK_ACCEPTED_TYPES, DYNAMIC_ACCEPTABLE

2 | 4096 | 32768 | 131072,

@ccopt integer =

-- SCROLL_LOCKS, CHECK_ACCEPTED_OPTS,

-- SCROLL_LOCKS_ACCEPTABLE

2 | 32768 | 131072,

@paramdef nvarchar(4000) = N'@ck integer',

@rowcount integer = 0,

@batch_size integer = 1000,

@fetch_buffer_count integer;

-- Open the cursor, suppressing the empty result set

INSERT TOP (0) #Audit

EXECUTE sys.sp_cursoropen

@cursor OUTPUT,

@stmt,

@scrollopt OUTPUT,

@ccopt OUTPUT,

@rowcount OUTPUT,

@paramdef,

@ck = 19036;

-- Fetch and delete loop

WHILE 1 = 1

BEGIN

-- To ensure #Audit contains only successfully deleted rows

BEGIN TRANSACTION;

-- Fetch the next batch

INSERT TOP (0) #Audit

EXECUTE sys.sp_cursorfetch

@cursor,

2, -- fetchtype NEXT

0, -- rownum, required but ignored for NEXT

@batch_size; -- numrows, maximum rows to fetch

-- Get the number of rows in the cursor

SELECT

@fetch_buffer_count = fetch_buffer_size

FROM sys.dm_exec_cursors(@@SPID)

WHERE

cursor_id = @cursor;

-- Finished?

IF @fetch_buffer_count = 0

BEGIN

-- Complete the transaction

ROLLBACK TRANSACTION;

-- Exit the WHILE loop

BREAK;

END;

-- Do the DELETE, suppressing row count messages

INSERT TOP (0) #Audit

EXECUTE sys.sp_cursor

@cursor,

2, -- optype = DELETE

0; -- rownum = all rows in the buffer

COMMIT TRANSACTION;

END;

-- Close the cursor

EXECUTE sys.sp_cursorclose @cursor;While an improved implementation in many important respects, it is not completely finished. For example, you could add a TRY...CATCH block to ensure the cursor is closed if an error occurs. API cursors are always global, so they remain allocated and active until closed or the connection is terminated. The focus here is to explain the operation and optimization of API cursors, not to provide fully production-quality code.

That said, let’s now cover the improvements one at a time and look at the execution plans.

Parameterized cursor open

I have taken the opportunity to parameterize the cursor’s opening statement.

DECLARE

@cursor integer,

@stmt nvarchar(max) =

N'

SELECT

OnlineSalesKey

FROM dbo.FactOnlineSales

WHERE

CustomerKey = @ck;

',

@scrollopt integer =

-- DYNAMIC, PARAMETERIZED_STMT,

-- CHECK_ACCEPTED_TYPES, DYNAMIC_ACCEPTABLE

2 | 4096 | 32768 | 131072,

@ccopt integer =

-- SCROLL_LOCKS, CHECK_ACCEPTED_OPTS,

-- SCROLL_LOCKS_ACCEPTABLE

2 | 32768 | 131072,

@paramdef nvarchar(4000) = N'@ck integer',

@rowcount integer = 0,

@batch_size integer = 1000,

@fetch_buffer_count integer;

-- Open the cursor, suppressing the empty result set

INSERT TOP (0) #Audit

EXECUTE sys.sp_cursoropen

@cursor OUTPUT,

@stmt,

@scrollopt OUTPUT,

@ccopt OUTPUT,

@rowcount OUTPUT,

@paramdef,

@ck = 19036;There’s no particular advantage to doing so here—simple parameterization would be applied anyway—but the documentation for sp_cursoropen doesn’t describe how to parameterize the cursor opening call at all well. The required syntax is quite similar to sp_executesql.

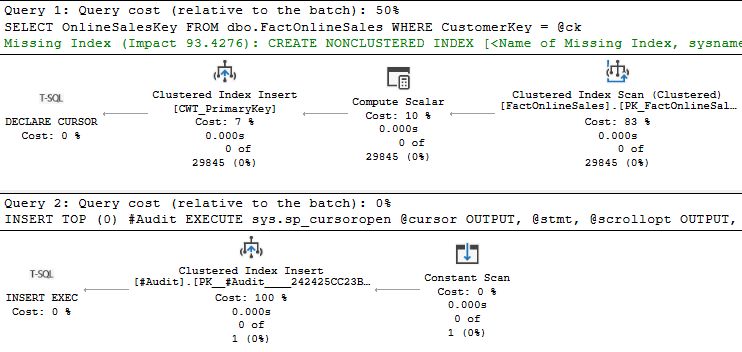

The execution plan is:

This completes very quickly because no rows are retrieved during this open call.

Note: The TOP (0) does not affect the number of rows processed by the cursor in any of the API calls (first plan above); it only applies to the extended stored procedure’s final output result set captured by INSERT...EXEC (second plan).

Block fetch

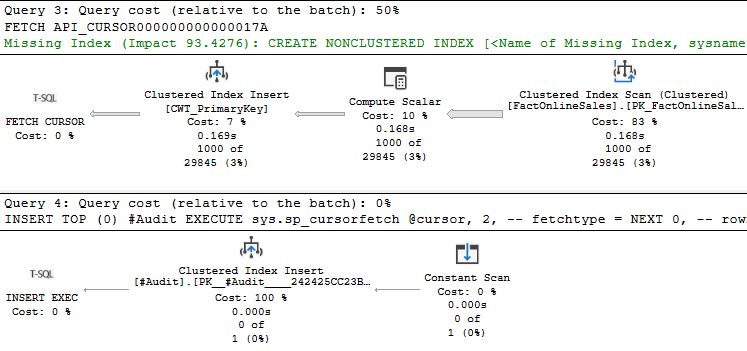

The sp_cursorfetch call finds up to 1,000 matching rows and inserts them into the block cursor’s worktable:

-- Fetch the next batch

INSERT TOP (0) #Audit

EXECUTE sys.sp_cursorfetch

@cursor,

2, -- fetchtype NEXT

0, -- rownum, required but ignored for NEXT

@batch_size; -- numrows, maximum rows to fetch

The Compute Scalar operator determines the cursor worktable (CWT) row ID using an internal intrinsic function:

[Expr1004] = Scalar Operator(CWT_ROWID())I chose not to add fetched OnlineSalesKey values to the #Audit table by retaining the TOP (0) on the INSERT...EXEC. This was so I could show you how to obtain the number of rows fetched into the cursor from the sys.dm_exec_cursors DMV using the API cursor’s handle.

-- Get the number of rows in the cursor

SELECT

@fetch_buffer_count = fetch_buffer_size

FROM sys.dm_exec_cursors(@@SPID)

WHERE

cursor_id = @cursor;Removing the TOP (0) from the sp_cursorfetch call would add fetched rows to the #Audit table and allow a check for no more rows using @@ROWCOUNT or ROWCOUNT_BIG() instead of the DMV.

You could use the @fetch_buffer_count to provide progress reporting via RAISERROR messages. For example:

RAISERROR

(

'Processed %i rows', 0, 1,

@fetch_buffer_count

)

WITH NOWAIT;Set delete

The sp_cursor call deletes rows from the underlying table using the cursor’s worktable as a source. Specifying zero for the @rownum parameter applies the requested DELETE operation to all rows in the fetch buffer.

-- Do the DELETE, suppressing row count messages

INSERT TOP (0) #Audit

EXECUTE sys.sp_cursor

@cursor,

2, -- optype = DELETE

0; -- rownum = all rows in the bufferNote: This is a set-based DELETE. The API cursor does not delete rows one at a time using separate statements.

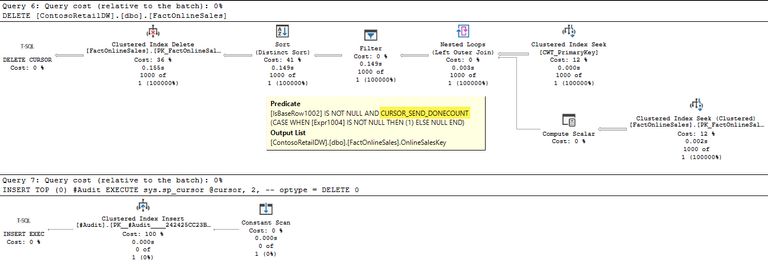

The execution plan is:

The interesting feature of the DELETE plan is the internal intrinsic call to CURSOR_ in the Filter operator (of all places).

This is responsible for the (1 row affected) messages, which can cause ASYNC_NETWORK_IO waits that cannot be suppressed using SET NOCOUNT ON. The INSERT...EXEC addresses this issue as well as preventing each sp_cursor call returning an empty result set.

Other Details

While the foregoing covers how the improved API cursor implementation works at a high level, there are a few other aspects I’d like to address.

Cursor type

The first choice I had to make was the type of cursor to use. The three basic API cursor choices are KEYSET, DYNAMIC, or STATIC.

You might be used to preferring STATIC cursors in T-SQL—at least when you expect to process every row in the cursor. A static cursor isn’t an option here because this type is always read only. We need to delete rows through the cursor, so it needs to be updateable.

API cursors do also provide FAST_FORWARD and FORWARD_ONLY types, but they differ from the T-SQL cursor implementation.

FAST_FORWARD API cursors support AUTO_FETCH (to return the first batch when the cursor is opened) and AUTO_CLOSE (closing the cursor automatically when the end is reached), but are always forward only and read only. The optimizer chooses between dynamic and static implementations when FAST_FORWARD is specified.

FORWARD_ONLY API cursors are forward only as the name suggests, but are always dynamic. This differs from T-SQL, where “forward only” is an attribute that can be applied to static, keyset, and dynamic cursors.

A KEYSET cursor would work, but this adds unnecessary latency and overhead by saving the complete set of row keys to delete in the worktable when the cursor is opened.

The FactOnlineSales table has 12,582,129 rows with only a unique clustered index associated with the OnlineSalesKey primary key column, so a full scan will be required. Doing this full scan all at once when opening the cursor defeats some of the purpose of batching.

This leaves us with a DYNAMIC cursor. This type doesn’t add anything to the cursor’s worktable during sp_cursoropen, so the call returns almost immediately.

I could also have specified a FORWARD_ONLY cursor as noted above, but I was trying to keep things simple.

Note: A dynamic cursor over a seek or scan does not start from scratch on every fetch. The cursor saves its scan position and continues from the saved point when asked for the next set of rows. This is a crucial concept to understand.

Cursor conversion

If we were to simply request a DYNAMIC cursor, the server might change the delivered cursor type. This happens when the underlying query or table does not support the requested type.

Dynamic cursors have many execution engine restrictions because the execution plan must be capable of stopping, saving its position, and moving an arbitrary number of rows in either direction on the next fetch. This implies a pipelined plan shape with only operators capable of flexible repositioning.

To avoid possible conversion, I specified the only acceptable cursor option as dynamic via the opening call’s @scrollopt parameter.

@scrollopt integer =

-- DYNAMIC, PARAMETERIZED_STMT,

-- CHECK_ACCEPTED_TYPES, DYNAMIC_ACCEPTABLE

2 | 4096 | 32768 | 131072,With this addition, if the server cannot deliver the required dynamic plan, it will throw a runtime error instead of changing to a keyset. Setting XACT_ABORT ON ensures this error will roll back any open transaction and terminate execution.

Cursor concurrency

To open the cursor, we also need to decide whether the updateable cursor should use optimistic or pessimistic concurrency.

The optimistic approach uses a rowversion or (engine-supplied) checksum value to detect when someone else changes the underlying table row(s) after we read them into our cursor. If the check determines we are using out-of-date data, our changes will fail with a concurrency violation error.

The pessimistic option takes and holds update (U) locks on base table rows copied into the cursor until the cursor moves on. These locks mean that changes to rows in the current fetch buffer are guaranteed to succeed. The U locks mean no one else can update or delete these particular base table rows while we have them in the cursor’s buffer.

For our purposes, it makes sense to use the pessimistic approach. We’re going to delete the current batch of rows anyway, so taking U locks while reading is a small price to pay for guaranteed success.

In the absence of a rowversion column, optimistic concurrency would require computing a checksum and checking it at runtime. This simply adds overhead we don’t need.

Note: We never lock more than 1,000 rows at any one time, so lock escalation will not be triggered.

The scroll locks are scoped to the cursor, not any containing user transaction.

To ensure the requested concurrency option is delivered, I set the @ccopt parameter so that only SCROLL_LOCKS are acceptable.

@ccopt integer =

-- SCROLL_LOCKS, CHECK_ACCEPTED_OPTS,

-- SCROLL_LOCKS_ACCEPTABLE

2 | 32768 | 131072,Auditing and roll back

When splitting a (previously atomic) operation into batches, it is important to consider recovery if something goes wrong during a later batch. Erik Darling discusses this in Batching Modification Queries Is Great Until…, where his solution is to save pre-change rows to a table using the OUTPUT clause.

The equivalent in our API cursor implementation is the #Audit table, populated directly from the sp_cursorfetch call.

It would be better to do this in the sp_cursor call that performs the delete. Unfortunately, the sp_cursor result set is always empty for data-changing operations. To ensure rows are only saved to the #Audit table when deletion is successful, the improved script uses a user transaction per batch.

Note: Be careful not to use a table variable to save pre-change rows here. Table variable contents are not affected by user transactions. A roll back due to a failed deletion should remove the just-fetched rows from the audit table.

The #Audit table in my example only saves OnlineSalesKey values. This is sheer laziness on my part. A delete would normally save all non-derived columns for recovery or audit purposes.

Remember to remove the TOP (0) on the sp_cursorfetch call if you want to test saving deleted keys to the temporary table. The TOP (0) clauses on the other extended stored procedure calls are required for good performance, especially on the sp_cursor call.

-- Fetch the next batch and save the fetched rows

-- (TOP (0) removed)

INSERT #Audit

EXECUTE sys.sp_cursorfetch

@cursor,

2, -- fetchtype NEXT

0, -- rownum, required but ignored for NEXT

@batch_size; -- numrows, maximum rows to fetchPerformance

Comparing performance is not a primary goal of this article, but people are bound to be curious.

On a freshly restored copy of the ContosoRetailDW database on SQL Server 2022 CU18 Developer Edition, I found the API cursor to be a bit faster overall:

| Swart Method | API Cursor |

|---|---|

| 2.5s | 2.2s |

Both methods correctly deleted all the qualifying rows.

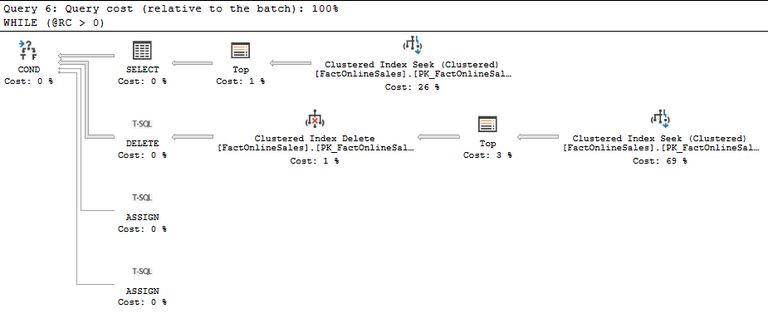

This is a slightly surprising result at first glance because the API cursor approach has worktable overhead and a less efficient delete plan than the Swart method:

The explanation is that Michael’s method ends up applying the CustomerKey test as a residual predicate twice, once in the SELECT query (to find the current batch’s index end point) and again in the DELETE to qualify rows in the range.

The API cursor only fetches rows that qualify for deletion and holds U locks on them until the delete is performed (via the SCROLL_LOCKS required cursor option).

Final Thoughts

The API cursor extended stored procedures were largely undocumented back in the SQL Server 2000 days. There were no detailed documentation pages for the procedures. When they were mentioned, it was alongside warnings about only being intended for use in OLEDB, ODBC, or DB-Library applications via library facilities. They were not to be called directly from applications.

The situation has changed over the years, with fairly detailed documentation for each extended stored procedure now available. The documentation isn’t always comprehensive or even correct in every detail, but that’s hardly unique to API cursors.

Still, some people have reservations about using API cursors from T-SQL. It’s certainly an unusual and somewhat advanced approach that won’t suit everyone.

Writing your own batching code is certainly possible. API cursors appeal to me because SQL Server takes care of ensuring the batches are split correctly. This is not so difficult to achieve manually when the underlying table has a suitable unique index, but it can become challenging to write correctly and efficiently when the index has multiple columns or is non-unique.

Interestingly, the dynamic API cursor shown in this article functions correctly even when the target table is a heap with no indexes at all. The cursor batches deletes using RID bookmarks, which is not a practical proposition when using manual batching because we cannot seek to an RID.

One can always create a temporary index for batching purposes—ONLINE if your edition permits it—but batching unindexed tables without additional effort is a unique API cursor capability.

Thanks for reading.