Parameter Sensitive Plan Optimization Thresholds

This article was originally published on 𝕏.

Introduction

One of the frustrations with the new Parameter Sensitive Plan Optimization (PSPO) feature in SQL Server 2022 is getting it to kick in at all when testing it out.

The official documentation does a pretty good job of listing the goals and limitations of this Intelligent Query Processing feature, but it doesn’t document many of the thresholds, presumably because they want to be able to change these in future without preserving backward compatibility.

Fair enough, and I wouldn’t expect them to detail every last internal knob and switch setting either. That said, hidden thresholds don’t help with diagnosing why a particular query isn’t getting the PSPO treatment when it seems it would benefit.

There are a couple of Extended Events available, but I have not found these to be fully reliable and there is very little documentation, as usual. You might get one of the failure reasons listed in sys.dm_xe_, but it’s not always obvious what they mean.

SELECT map_value

FROM sys.dm_xe_map_values

WHERE [name] = N'psp_skipped_reason_enum'

ORDER BY map_key;One you’ll see quite often is SkewnessThresholdNotMet. The documentation does talk a bit about how you can affect ‘skewness thresholds’ by tweaking the cardinality estimation model, but it doesn’t say what skewness is or what magic numbers are involved.

This isn’t going to be a very long or comprehensive article, but I do want to explore a few undocumented thresholds and show perhaps the simplest possible example of a query that does trigger PSPO.

Simplicity

First, the simple example.

The script below creates a heap table with no indexes and a single nullable integer column creatively named i. One row has the value 69. A further 100,000 rows contain the number 420. The i values aren’t important.

DROP TABLE IF EXISTS dbo.Skewed;

GO

SELECT i = CONVERT(integer, 69)

INTO dbo.Skewed

UNION ALL

SELECT CONVERT(integer, 420)

FROM GENERATE_SERIES(1, 100 * 1000);The code below can be used to trigger PSPO:

DECLARE

@SQL nvarchar(4000) =

N'

SELECT COUNT_BIG(*)

FROM dbo.Skewed AS S

WHERE S.i = @i;

',

@ParamDef nvarchar(4000) =

N'@i integer';

EXECUTE sys.sp_executesql

@SQL,

@ParamDef,

@i = 69;

EXECUTE sys.sp_executesql

@SQL,

@ParamDef,

@i = 420;You could use a stored procedure with a formal parameter instead if you prefer that to dynamic SQL.

Multi-plan

In any case, the example produces a dispatcher multi-plan. The first invocation gets query variant ID #1, and the second gets ID #3.

If you run the whole setup again, but with only 99,999 rows for the second value, PSPO will not occur, and you’ll just get an ordinary plan.

One of the undocumented thresholds is that the frequency ratio of the most and least common values in the associated statistics must be at least 100,000.

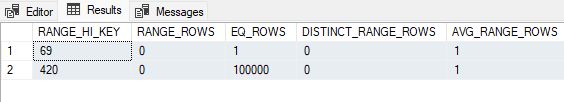

DBCC SHOW_STATISTICS (N'dbo.Skewed', i) WITH HISTOGRAM;

If the least common value in the statistics had EQ_ROWS of 16, we would need the most common value to have EQ_ROWS of at least 1.6 million (100,000 times larger).

Otherwise, you’ll see SkewnessThresholdNotMet in the Extended Event parameter_.

All this assumes full scan statistics. The usual adjustments are made if the statistics are sampled or there have been changes to the table since statistics were gathered. Nevertheless, the factor of 100,000 is currently hard coded as the minimum frequency ratio that must be met to engage PSPO.

Here’s the setup code and plan for the 99,999-row test run that does not get PSPO:

DROP TABLE IF EXISTS dbo.Skewed;

GO

SELECT i = CONVERT(integer, 69)

INTO dbo.Skewed

UNION ALL

SELECT CONVERT(integer, 420)

FROM GENERATE_SERIES(1, 100 * 1000 - 1); -- 99,999 rows now

Trivial

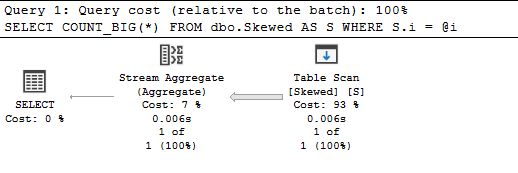

The other thing I like about that example is that it produces a trivial plan!

Both “query variants” get exactly the same plan because there are no cost-based choices to make and only one plan is possible. It’s funny really. If three variants were possible in the example, they would all be a copy of the same trivial plan.

PSPO should probably exclude trivial plans that cost less than the parallelism threshold value, but that isn’t the case today (SQL Server 2022 CU17, January 2025).

Predicate Ranges

You can have up to three plan variants per parameter. Each variant covers a range of value frequencies.

Note: The column data type and particular values involved aren’t important. The important aspect is how often each value occurs in the ‘best’ statistics histogram for the column concerned, as chosen by the query optimizer.

The current design is extremely conservative, being primarily concerned with very uncommon (variant #1) or unusually common (variant #3) values. Everything else gets plan variant #2.

“Unusually common” means anything with more than 10 values, where x is the frequency of the most common entry in the statistics. This is most easily visualised as rounding down to the nearest power of ten.

“Very uncommon” is computed in the same way, taking the least common value as its input, with a minimum threshold of 100.

If there are more than three candidate parameter comparisons, SQL Server chooses the three with the highest skewness ratio. More precisely, it chooses the ones with the highest LOG2 of the ratio. This is a fairly common programming trick when the goal is to compare magnitudes, while avoiding overflows and general floating-point weirdness.

There are indications that SQL Server might use a more sophisticated algorithm in future based on (an approximation of) statistical variance, but it doesn’t seem to be used at the moment.

Syntax issues

The current implementation looks for equality comparisons between a column with base statistics and a parameter marker. It doesn’t literally parse the query text, but you could be forgiven for thinking it did.

Recreate the example table with 100,000 rows for the second value and run this slightly modified version:

DECLARE

@SQL nvarchar(4000) =

N'

SELECT COUNT_BIG(*)

FROM dbo.Skewed AS S

WHERE @i = S.i;

',

@ParamDef nvarchar(4000) =

N'@i integer';

EXECUTE sys.sp_executesql

@SQL,

@ParamDef,

@i = 69;

EXECUTE sys.sp_executesql

@SQL,

@ParamDef,

@i = 420;You won’t see PSPO with that code, because the parameter marker is on the left of the equality comparison!

If you want PSPO, you’ll need to compare a column with a parameter and not vice versa. Funny.

Non-Parameter Predicates

It’s quite common that the parameter-sensitive query will have other predicates that do not reference a parameter. How do these affect PSPO?

If the other predicates are connected to the parameter-sensitive ones by OR, there is no impact. The reasoning here appears to be that a disjunction can never reduce the number of rows qualified by the sensitive predicate. To that extent, other predicates don’t affect whether the sensitive parameter should qualify for PSPO or not.

If the other predicates are conjunctions (connected by AND), they might significantly reduce the number of rows returned. The current version of SQL Server (2022 CU17, January 2025) appears to account for this by computing a cardinality estimate for the non-parameter predicates alone, and disabling PSPO if the resulting cardinality is less than 100,000 rows (there’s that magic number again).

Demo

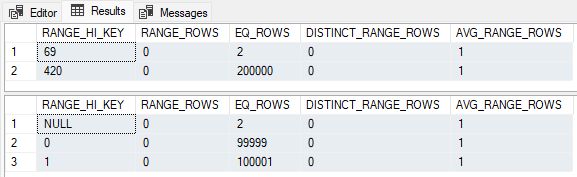

The following variation creates a table with two rows where i = 69 and 200,000 rows where i = 420. This meets the 100,000:1 ratio requirement for an equality parameter predicate on column i.

DROP TABLE IF EXISTS dbo.Skewed;

GO

SELECT

i = CONVERT(integer, 69),

j = CONVERT(integer, NULL)

INTO dbo.Skewed

FROM GENERATE_SERIES(1, 2)

UNION ALL

SELECT

i = CONVERT(integer, 420),

j = IIF

(

GS.[value] < 100 * 1000,

0,

1

)

FROM GENERATE_SERIES(1, 2 * 100 * 1000) AS GS;There is also a second column j, which has two null rows, 99,999 rows where j = 0, and 100,001 rows where j = 1. The statistics are shown below (column i first, j second):

PSPO not used

The PSPO test code is:

DBCC FREEPROCCACHE;

GO

DECLARE

@SQL nvarchar(4000) =

N'

SELECT

c = COUNT_BIG(*)

FROM dbo.Skewed AS S

WHERE

S.i = @i

AND S.j = 0; -- NEW!

',

@ParamDef nvarchar(4000) =

N'@i integer';

EXECUTE sys.sp_executesql

@SQL,

@ParamDef,

@i = NULL;Notice that the test on column j does not use a parameter, so it cannot qualify for PSPO. We can pass any value we like for parameter @i.

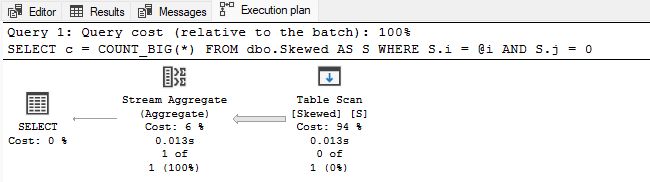

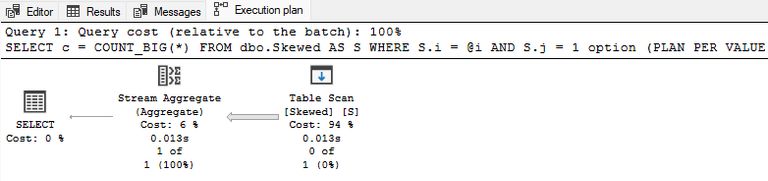

The fact that only 99,999 rows match the predicate j = 0 means that the 100,000 row threshold for the conjuction test is not met, and PSPO is not engaged:

PSPO used

Let’s run the test again with j = 1.

DBCC FREEPROCCACHE;

GO

DECLARE

@SQL nvarchar(4000) =

N'

SELECT

c = COUNT_BIG(*)

FROM dbo.Skewed AS S

WHERE

S.i = @i

AND S.j = 1; -- CHANGED!

',

@ParamDef nvarchar(4000) =

N'@i integer';

EXECUTE sys.sp_executesql

@SQL,

@ParamDef,

@i = NULL;The j = 1 predicate now qualifies at least 100,000 rows so this doesn’t prevent PSPO (note the PSPO option hint):

In general, there might be more than one non-parameter predicate. I used a single equality test on j here for ease of explanation.

The optimizer will estimate cardinality for the conjunctive non-parameter predicates only using its normal techniques. Whether this estimate meets the 100,000-row threshold or not can be affected by settings (e.g. CE model) and hints (e.g. ASSUME_).

The AND test for non-parameter predicates can be disabled with undocumented global trace flag 13551. All the other PSPO tests and thresholds continue to apply when this flag is active.

Compatibility

It is documented that PSPO is only functional at a compatibility level of 160 or above. If set lower, the reason code is CompatLevelBelow160.

This can be relaxed (on SQL Server 2022 only) so that PSPO can occur at any compatibility level with undocumented global trace flag 13550. The flag is not effective at session level, sadly.

Thinking time

The final undocumented threshold I want to mention is the limit of 1000ms on the total compilation time for the statement’s plan. If that is exceeded, you’ll see a skip reason of CompilationTimeThresholdExceeded.

To repeat, these are all undocumented behaviours and thresholds that could change without notice or documentation. I provide them in case they are of interest or help you understand why you’re not getting PSPO when it seems you should.

Final Thoughts

There are plenty of other limitations with this initial (very conservative) implementation, but those have been written about already. Perhaps we will see a more complete solution in SQL Server 2025.

Thanks for reading.