Can a SQL Server Execution Plan Operator Produce More Batches than Rows?

This article was originally published on 𝕏.

I was asked this question recently. The answer is yes.

It sounds counterintuitive, doesn’t it? SQL Server batch mode operators produce batches containing between 64 and 900 rows. How then can a batch mode operator produce more batches than rows?

Demo

Starting with a vanilla copy of the Stack Overflow 2013 database on SQL Server 2022 CU 16 Developer Edition:

EXECUTE dbo.DropIndexes;

GO

ALTER DATABASE StackOverflow2013

SET COMPATIBILITY_LEVEL = 160;

GO

CREATE NONCLUSTERED COLUMNSTORE INDEX n

ON dbo.Users (CreationDate, Id);

CREATE NONCLUSTERED COLUMNSTORE INDEX n

ON dbo.Posts (OwnerUserId, ClosedDate, Id);

GO

SELECT

c = COUNT_BIG(U.Id + P.Id)

FROM dbo.Users AS U

JOIN dbo.Posts AS P

ON P.OwnerUserId = U.Id

AND P.ClosedDate <= U.CreationDate

OPTION

(

MAXDOP 1

);That query happens to produce a small number of rows (20) using an execution plan that features a batch mode hash join. There’s no particular trickery in the query. Points of note:

- I’m using columnstore indexes as a convenient way to get batch execution.

- The

COUNTexpression prevents early aggregation (before the join). - The

MAXDOPhint is there to play around with as you like. The demo doesn’t depend on getting a serial or parallel plan; the behaviour is the same.

Execution plan

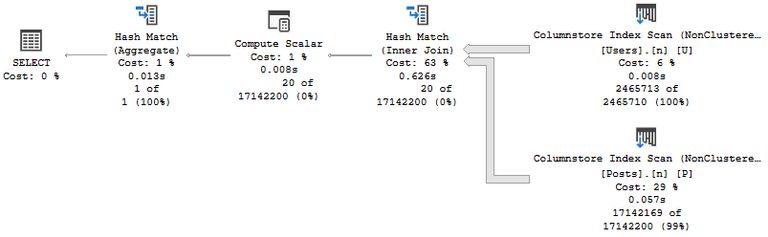

The post-execution (‘actual’) plan is pretty much as you’d expect. All the operators are batch mode. The interesting part is in the number of batches and rows produced by the join:

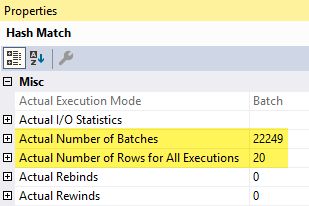

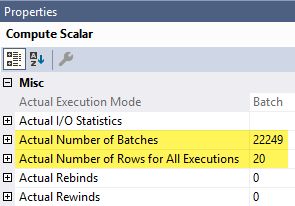

The hash join produced 20 rows in 22,249 batches. How is this possible?

Explanation

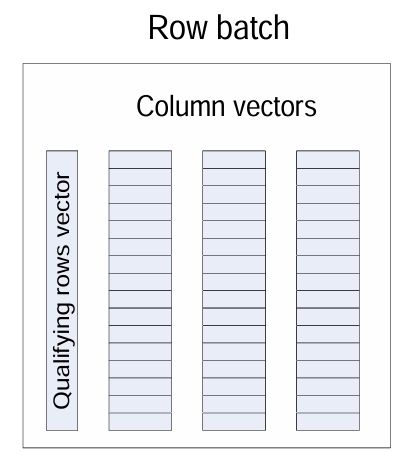

Batches are initially as full as possible when created. They contain a vector for each column or expression result and a qualifying rows vector.

The qualifying rows vector indicates if a row is logically valid or not. Filtering out rows involves changing the status of a bit in this vector. This is much cheaper than constructing a new batch containing just the unfiltered rows.

Filtering

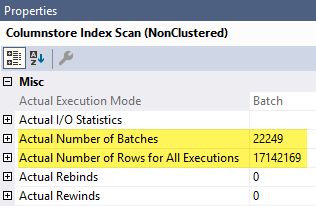

The hash join in the example query has a filtering effect on batches produced by the scan of the Posts table. We can see these batches by looking at the properties of the Posts scan.

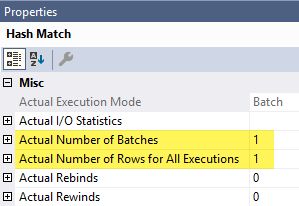

Notice that 22,249 batches are created containing 17,142,169 rows (an average of 770.5 rows per batch).

Any rows that do not join with the Users table are filtered out at the Hash Join by updating the qualifying rows vector. Very few rows end up joining, so most of the rows are logically not present. The original batches still exist; it’s just that many of them no longer have any qualifying rows.

As a result, we see 20 valid rows in 22,249 batches. There are still 17,142,169 rows in the batches overall.

The situation is the same at the Compute Scalar after the join:

The final hash aggregate has an incompatible output, so new batches are created:

Final Thoughts

In general, SQL Server only constructs new batches when it absolutely has to. Batch mode plans are split into regions that share a common batch structure including projected columns and computed expressions. This arrangement makes the code faster and simpler, allowing SIMD instructions for example.

As a side note, the hash join can produce more batches than it receives. This occurs when the operator spills. New batches are needed as part of the spill processing.

Thanks for reading.