A Small Sample of SQL Server Chaos

This article was originally published on 𝕏.

Background

Since SQL Server indexed views don’t allow MIN or MAX aggregates, I recently found myself writing a trigger instead. The trigger’s job was to keep a summary table in sync with a source query (which featured a MAX aggregate).

There’s a cost to running a trigger after every insert, update, or delete (with up to three trigger invocations per merge statement) but fast access to the summary data was worth it in this case. Though a trigger is a bit more expensive than the inline materialised view maintenance automatically added to the source statement’s execution plan by SQL Server, efficient trigger code and good indexing can help with the performance aspect (as always).

Fast code is great of course, but it’s also important the results are correct. I’ve written quite a few indexed view substitute triggers over the years, but not frequently enough to be sure I’ve covered every eventuality after my first effort. Basic tests involving various base table data modifications provide a baseline level of comfort, but consistency problems can still arise with a large number of different concurrent modifications.

Validating trigger correctness under high concurrency typically involves generating a large number of pseudorandom data changes executing from many different connections at the same time using multiple instances of a tool like SQL Query Stress. If the simulated indexed view contents exactly match the base table data after such tests, one can be reasonably confident the code is correct. Cross-table constraints would be an even better guarantee, but Microsoft haven’t delivered that feature yet.

Test Results

Initial testing went well, which was a pleasant surprise. Soon enough though, errors started to appear in the tool’s output. That’s not entirely unexpected since ensuring consistent results under high concurrency tends to expose all sorts of niggly edge cases. It’s still an annoyance because debugging edge cases in trigger code can be tricky and laborious.

What was a surprise though was the nature of the error messages. Here’s an example:

Msg 8705, Level 16, State 1, Line 7

A DML statement encountered a missing entry in index ID 1

of table ID 1009438670, in the database 'Sandpit' due to an

interaction with another transaction.

If you continue to see this error, consider using Repeatable Read

or higher isolation level.

The statement has been terminated.I was fully expecting a unique index key violation error or something of that sort. A message about a missing index entry was quite unexpected.

My first thought was database corruption, so even though DBCC CHECKDB reported no errors, I dropped the database, restarted the instance, and recreated everything from scratch. The same errors kept appearing.

I won’t bore you with every avenue I pursued to track down the source of the problem, not least because this article would become a medium-length book. Let’s look at a simple reproduction instead.

Demo 1: Nonclustered Index

I won’t need a complicated setup or multiple SQL Query Stress instances to show you the problem. Just a simple table and two SSMS query windows will suffice. The following script will produce the scary error in the second session:

IF OBJECT_ID(N'dbo.Chaos', 'U') IS NOT NULL

BEGIN

DROP TABLE dbo.Chaos;

END;

GO

CREATE TABLE dbo.Chaos

(

id integer NOT NULL

INDEX i NONCLUSTERED,

PRIMARY KEY (id)

);

GO

-- Session 1

-- Insert a row, leaving the transaction open

BEGIN TRANSACTION;

INSERT dbo.Chaos (id)

VALUES (1);

-- Session 2 (will block)

DELETE C

FROM dbo.Chaos AS C

TABLESAMPLE SYSTEM (99.999996 PERCENT);

-- Session 1

-- This causes the error in session 2

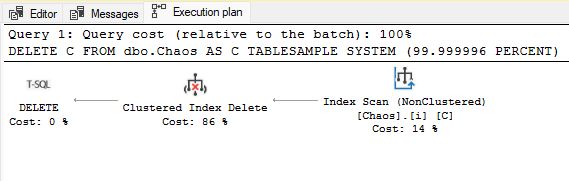

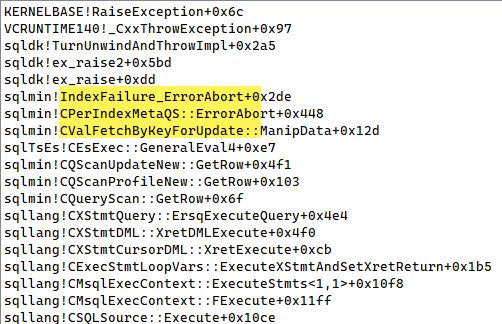

ROLLBACK TRANSACTION;The execution plan for the DELETE statement looks like this:

Note that the second session will block under the default read committed isolation level whether you have read committed snapshot isolation (RCSI) enabled or not. SQL Server doesn’t use versions under RCSI when locating rows to update (but see the Closing Remarks section for an exception).

I’ll show a second demo later without a nonclustered index on the table, but this is a good place to start.

TABLESAMPLE

The obvious curiosity is the TABLESAMPLE clause on the DELETE statement. TABLESAMPLE was introduced in SQL Server 2005. It’s currently only lightly documented on the FROM clause plus JOIN, APPLY, PIVOT (Transact-SQL) page. There’s a little more detail on the dedicated archived documentation page Limiting Result Sets by Using TABLESAMPLE, but it omits some very important details.

I used TABLESAMPLE in my trigger testing because I wanted a quick way to delete a small but variable number of rows in an unpredictable way from multiple concurrent threads. The sampling percentage was 0.5 PERCENT in my testing. I’ve used a number close to 100% in the reproduction to make the error almost certain to occur.

Speaking of the PERCENT value, the current documentation incorrectly states that this is implicitly converted to a float value. It’s not a float, it’s a real. That’s the reason I used the value 99.999996 in the script. Anything even fractionally larger can’t be represented as a real and gets rounded to 100. Specifying 100 PERCENT with TABLESAMPLE results in the clause being ignored completely. Values closer to 100 can be represented as a float.

The issue only reproduces with a TABLESAMPLE percentage less than 100 (and greater than zero). Clearly, the clause is responsible for the error. The question is, why?

Gross weirdness

The TABLESAMPLE clause has some extremely strange locking behaviour. One might even say it has invented its own isolation level. I’m going to call it Chaos, even though that isolation level already exists (but not in SQL Server or the SQL Standard) with different semantics and is almost never used.

Ok, just because someone is bound to ask otherwise: The historical chaos isolation level allows all the behaviours of read uncommitted isolation (notably dirty reads). It also allows dirty writes, but only against other transactions running at the chaos isolation level. A dirty write occurs when a transaction overwrites an uncommitted change made by a different transaction. As a consequence, transactions at the chaos isolation level do not support rollbacks.

All that is for curiosity; it’s not the same behaviour as TABLESAMPLE, which I will describe next.

Locks and versions

When TABLESAMPLE reads from a heap, everything runs normally in accordance with the current isolation level.

When TABLESAMPLE reads from a b-tree index (clustered or nonclustered) it does not issue or respect any PAGE or KEY locks. This includes the case of a nonclustered index on a heap. Table level locks are not affected.

The one exception to that (astonishing) rule for KEY locks is when serializable isolation is requested. In that case, and if a range lock is actually needed, TABLESAMPLE takes a single range lock on the special infinite key value and the range down to (but excluding) the highest key value on the page. The net effect is that the range above the highest index key on the page is locked, but nothing else. This is a nonsense outcome and does not correctly implement serializable semantics. Nevertheless, it’s what SQL Server does.

When the isolation level uses row versions (RCSI or SNAPSHOT), the TABLESAMPLE clause accesses the correct version of each row as expected. Remember though that versions are not used under RCSI when locating rows to modify (except Azure SQL Database, see Closing Remarks). In that event, the TABLESAMPLE behaviour is the same as under locking read committed, with PAGE and KEY locks skipped. There is no indication in execution plans of when an operator might not use versioning under RCSI.

I don’t know what else aside from ‘chaos’ one could call an isolation level that functions correctly on heaps but skips PAGE and KEY locks on index pages. Never mind that it also improperly implements serializable, when that is requested.

Revisiting Demo 1

With that information in hand, run the first part of the script again:

IF OBJECT_ID(N'dbo.Chaos', 'U') IS NOT NULL

BEGIN

DROP TABLE dbo.Chaos;

END;

GO

CREATE TABLE dbo.Chaos

(

id integer NOT NULL

INDEX i NONCLUSTERED,

PRIMARY KEY (id)

);

GO

-- Session 1

-- Insert a row, leaving the transaction open

BEGIN TRANSACTION;

INSERT dbo.Chaos (id)

VALUES (1);In session 2, select from the table using TABLESAMPLE and a REPEATABLE READ isolation level hint (as the error message recommended):

-- Session 2

-- (will not block!)

SELECT

C.id

FROM dbo.Chaos AS C

TABLESAMPLE SYSTEM (99.999996 PERCENT)

WITH (REPEATABLEREAD);This returns the uncommitted row inserted by session 1, despite our instruction to use repeatable read isolation! This happens because index reads with TABLESAMPLE do not issue or respect PAGE or KEY locks.

Running the same statement without the TABLESAMPLE clause will block, waiting to acquire a shared (S) lock on the inserted row, which is protected by an exclusive (X) lock. This is the correct and expected behaviour.

The delete

Let’s also revisit the DELETE plan:

The read by the nonclustered Index Scan operator does not block (as it should) due to the TABLESAMPLE clause. No lock is taken, and it immediately returns the (uncommitted) id column value to the Clustered Index Delete operator. The id is the index row locator that will be used to perform the delete.

The Clustered Index Delete attempts to acquire an update (U) lock on the index id key value (as is normal when locating a row to change). The U lock will be converted to an X lock before any changes are made and logged.

The requested U lock is incompatible with the X lock held by session 1, so session 2 blocks at this point.

The rollback and the error

When the ROLLBACK TRANSACTION command is executed in session 1, the row insertion is rolled back, and the X lock is released. The Clustered Index Delete in session 2 is now able to acquire its U key lock. It then seeks into the clustered index b-tree to locate the row it has been asked to delete.

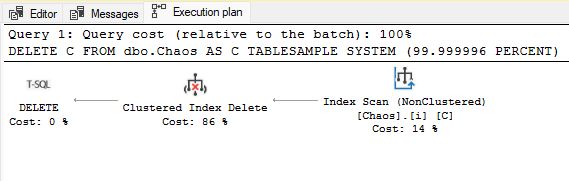

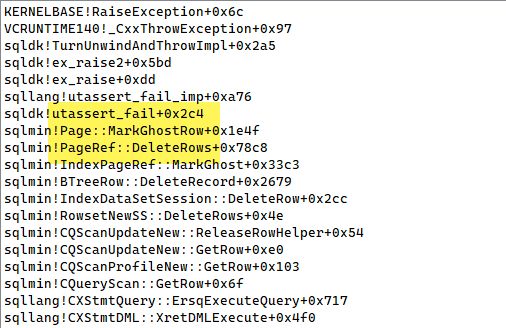

This is the action that fails because the row no longer exists (it is marked as a ghost by the rollback). The call stack at this stage is:

SQL Server interprets this unexpected event as a failure in the index:

Msg 8705, Level 16, State 1, Line 7

A DML statement encountered a missing entry in index ID 1

of table ID 1009438670, in the database 'Sandpit' due to an

interaction with another transaction.

If you continue to see this error, consider using Repeatable Read

or higher isolation level.

The statement has been terminated.One might argue that attempting to delete a non-existent row isn’t an error, but that’s not how SQL Server sees it. The execution plan claimed to have located a row that needed deleting. That row should have been locked (or otherwise protected under SNAPSHOT isolation) to prevent it disappearing before the delete. It simply shouldn’t happen.

It’s quite interesting that SQL Server treats this as a minor error. Only the statement is terminated. Clearly, it’s less serious than a data type conversion error, which terminates the whole batch and any open transaction.

The above error message was added in SQL Server 2019. Running the same reproduction script in SQL Server 2017 produces a more dramatic outcome:

Msg 8646, Level 21, State 1, Line 20

Unable to find index entry in index ID 1, of table 1205579333,

in database 'Sandpit'.

The indicated index is corrupt or there is a problem with the current

update plan.

Run DBCC CHECKDB or DBCC CHECKTABLE.

If the problem persists, contact product support.

Msg 596, Level 21, State 1, Line 19

Cannot continue the execution because the session is in the kill state.

Msg 0, Level 20, State 0, Line 19

A severe error occurred on the current command.

The results, if any, should be discarded.A SQL Server stack dump is generated, and the error log contains the message “CPerIndexMetaQS::ErrorAbort - Index corruption”, which you might recognise from the stack trace. The connection is terminated. Seems like an important error, after all.

Adding a REPEATABLEREAD table hint to the DELETE does not change the outcome of course.

Demo 2: Clustered Index Only

This second example shows a similar problem occurring on a clustered table with no additional nonclustered index:

IF OBJECT_ID(N'dbo.Chaos', 'U') IS NOT NULL

BEGIN

DROP TABLE dbo.Chaos;

END;

GO

CREATE TABLE dbo.Chaos

(

id integer PRIMARY KEY

);

GO

-- Session 1

-- Insert a row, leaving the transaction open

BEGIN TRANSACTION;

INSERT dbo.Chaos (id)

VALUES (1);

-- Session 2

-- (will not block!)

SELECT

C.id

FROM dbo.Chaos AS C

TABLESAMPLE SYSTEM (99.999996 PERCENT)

WITH (SERIALIZABLE);

-- Session 2

-- (will block)

DELETE C

FROM dbo.Chaos AS C

TABLESAMPLE SYSTEM (99.999996 PERCENT)

WITH (SERIALIZABLE);

-- Session 1

-- This causes the error in session 2

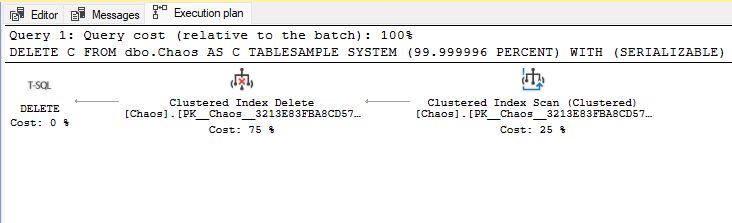

ROLLBACK TRANSACTION;It’s very similar, just with an extra step to show we can read the uncommitted data using TABLESAMPLE even when a SERIALIZABLE hint is used.

Analysis

The execution plan is:

The DELETE blocks for a slightly different reason here. Instead of a nonclustered index returning clustered index keys, the Clustered Index Scan and Clustered Index Delete use rowset sharing. There is no seek at the delete operator to locate the row to delete. We already have the row directly from the prior scan operator (the rowset is ‘shared’). This is an optimisation to save an unnecessary b-tree traversal (and taking a U lock).

The Clustered Index Delete isn’t affected by the TABLESAMPLE, so it doesn’t skip locks. It is blocked trying to acquire the KEY X lock needed to modify the target row. When the ROLLBACK occurs in session 1, the Clustered Index Delete acquires the X lock and attempts to mark the row as a ghost.

Unfortunately, the row is already a ghost due to the rollback. Attempting to mark a ghosted row as a ghost is unexpected, and a retail assertion failure occurs:

Location: Sql\Ntdbms\storeng\drs\include\fixedvarrecord.inl:3779

Expression: FALSE

SPID: 52

Process ID: 29820

Thread ID: 28852

Description: Invalid switch value

Msg 3624, Level 20, State 1, Line 22

A system assertion check has failed.

Check the SQL Server error log for details.

Typically, an assertion failure is caused by a software bug

or data corruption.

To check for database corruption, consider running DBCC CHECKDB.

If you agreed to send dumps to Microsoft during setup,

a mini dump will be sent to Microsoft.

An update might be available from Microsoft in the latest Service Pack

or in a Hotfix from Technical Support.

Msg 596, Level 21, State 1, Line 21

Cannot continue the execution because the session is in the kill state.

Msg 0, Level 20, State 0, Line 21

A severe error occurred on the current command.

The results, if any, should be discarded.No friendly error message this time (even on SQL Server 2022 CU14). A stack dump is created, and the connection is terminated.

The stack trace is:

Closing Remarks

The TABLESAMPLE clause has truly bizarre behaviour that only appears to be safe on heap tables (as long as you don’t touch a nonclustered index) or under full SNAPSHOT isolation, though I have not tested either situation extensively. RCSI seems safe when reading but versions are not used under RCSI when locating rows to modify so the dangers are the same as under non-versioned isolation levels there.

Please vote on my Microsoft feedback:

Retail assertion and errors with TABLESAMPLE on a DML target

Note: The situation is different when Optimized Locking is in use. The TID locks taken are not skipped by TABLESAMPLE. Furthermore, U locks are not taken when searching for rows to modify when lock after qualification (LAQ) is used. In these circumstances, the problems noted in this article do not arise. At the time of writing, Optimized Locking is only available in Azure SQL Database.

I have only scratched the surface in this article. The TABLESAMPLE clause breaks so many fundamental rules and essential internal guarantees that errors are possible almost anywhere in the SQL Server code.

There are good reasons that SQL Server disallows the NOLOCK and READUNCOMMITTED hints on tables that are the target of a modification. If there were a CHAOS hint, it would disallow that as well.

I have presented two similar and easy to demo examples here, but the issues are not limited to a DELETE with a concurrent INSERT. Any modification is at risk, including updates and merges. There may also be cases where a SELECT with TABLESAMPLE will encounter problems in the face of concurrent activity like page splitting.

While testing my indexed view replacement trigger under high concurrency, I encountered many unusual and varied errors, stack dumps, and assertion failures due to TABLESAMPLE across a wide variety of query processor and storage engine components.

TABLESAMPLE really is just horribly broken. If you had never heard of it before today, it might be best to roll back to that save point. You should certainly avoid using it in data modification statements.

Some of you might have wondered early on in this article if the problem was a lack of Halloween Protection. It’s a good thought, but not the case here. In fact, it makes some error scenarios more likely by extending the time between reads and writes. If you’re curious to add an explicit Eager Spool for full phase separation to the plans in this article, you can use undocumented trace flag 8692. It won’t fix the issue of course, but it may expose new and interesting exceptions especially when paired with the weird and ineffective implementation of SERIALIZABLE provided with TABLESAMPLE.

Allocation ordered scans

One final remark that wasn’t important in the context of this article but might well be in other circumstances: Scans performed with TABLESAMPLE (seeks aren’t possible) always use an allocation ordered scan, not an index ordered scan.

If you look at the properties of the data reading operators in the demo plans, you’ll see they have the Ordered: True property. That cannot be guaranteed with an allocation order scan. If a downstream operator depends on ordered input, more errors, stack dumps, and assertion failures may await. Or maybe you’ll get unlucky and silent database corruption will occur.

Thanks for reading.