A Nonclustered Index Update Disaster

This article was originally published on 𝕏.

Introduction

Update execution plans are not something the T-SQL statement writer has much control over. You can affect the data reading side of the plan with query rewrites and hints, but there’s not nearly as much tooling available to affect the writing side of the plan.

Update processing can be extremely complex and reading data-changing execution plans correctly can also be difficult. Many important details are hidden away in obscure and poorly documented properties, or simply not present at all.

In this article, I want to you show a particularly bad update plan example. It has value in and of itself, but it will also give me a chance to describe some less well-known SQL Server details and behaviours.

Demo Setup

To run this demo, I recommend using a test SQL Server instance with 8GB RAM and at least 4GB of log space. Any version from 2008 to 2022 will do, but a small code adjustment is needed before SQL Server 2016, as will be noted at the appropriate stage. The first step is to create a test database:

USE master;

GO

CREATE DATABASE Sandpit;

GO

ALTER DATABASE Sandpit SET

RECOVERY SIMPLE;

GO

ALTER DATABASE Sandpit

MODIFY FILE

(

NAME = N'Sandpit_log',

SIZE = 4GB,

FILEGROWTH = 64MB

);The demo uses a single table with 50,000 rows containing a few simple columns plus a pretty long string. This string represents LOB storage such as might be used for XML, JSON, or general text in a real implementation. For example, the string is about the same length as the maximum size of a Stack Overflow question or answer.

USE Sandpit;

GO

CREATE TABLE dbo.U

(

ID bigint IDENTITY NOT NULL,

SomeKey bigint NOT NULL,

LongString nvarchar(max) NOT NULL,

CONSTRAINT [PK dbo.U ID]

PRIMARY KEY CLUSTERED (ID)

);

INSERT dbo.U

WITH (TABLOCKX)

(

SomeKey,

LongString

)

SELECT TOP (50 * 1000)

SomeKey = 10 * ROW_NUMBER() OVER (ORDER BY @@SPID),

LongString = REPLICATE(CONVERT(nvarchar(max), N'X'), 32770)

FROM sys.all_columns AS AC1

CROSS JOIN sys.all_columns AS AC2

ORDER BY

SomeKey

OPTION (MAXDOP 1);

CHECKPOINT;Populating the demo table should only take a few seconds. Since this is an article about nonclustered index updates, we’ll also need a nonclustered index:

CREATE NONCLUSTERED INDEX

[IX dbo.U SomeKey (LongString)]

ON dbo.U

(SomeKey)

INCLUDE

(LongString)

WITH (MAXDOP = 1);

CHECKPOINT;This non-unique nonclustered index is keyed on the SomeKey column and includes the LongString column at the leaf level. Again, building the index should take only a few seconds.

Test 1: A Simple Update

As a baseline, we’ll update 30,000 rows by decrementing the SomeKey value, recording I/O statistics and the amount of transaction log generated:

BEGIN TRANSACTION;

SET STATISTICS IO ON;

UPDATE dbo.U SET

SomeKey -= 1

WHERE

ID BETWEEN 10000 AND 39999

OPTION

(MAXDOP 1);

SET STATISTICS IO OFF;

SELECT

LogRecords =

FORMAT(T.database_transaction_log_record_count, N'N0'),

LogBytes =

FORMAT(T.database_transaction_log_bytes_used, N'N0'),

LogBytesReserved =

FORMAT(T.database_transaction_log_bytes_reserved, N'N0')

FROM sys.dm_tran_current_transaction AS C

JOIN sys.dm_tran_database_transactions AS T

ON T.transaction_id = C.transaction_id

WHERE

T.database_id = DB_ID();

COMMIT TRANSACTION;

CHECKPOINT;Remove the FORMAT calls if you’re using SQL Server 2008R2 or earlier. They’re only there to make the displayed numbers a bit easier to read.

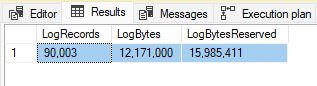

Test 1 results

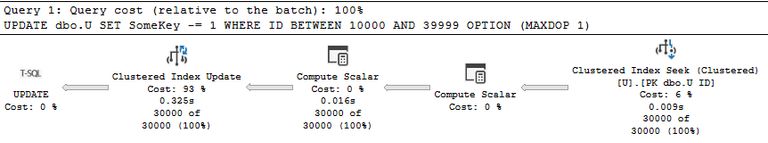

The execution plan is very simple and takes around 325ms to run:

I/O statistics report 178,517 logical reads:

Table 'U'. Scan count 1,

logical reads 178,517, physical reads 0, read-ahead reads 0,

lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

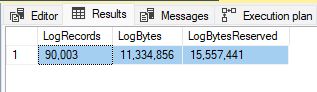

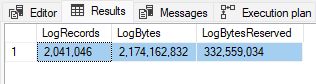

(30000 rows affected)The update generates about 90k transaction log records, just under 11MB in size with an additional log undo reservation of almost 15MB:

Nothing out of the ordinary so far.

Test 2: Adding a Hash Code

To make it faster to find particular records, we now decide to add a hash code for the long string to the nonclustered index key. You might also do this sort of thing as a quick way to detect data changes.

The following code adds a non-persisted computed column for the new hash code to the base table, drops the existing nonclustered index, and replaces it with a new one having the hash code as part of the key. If you’re using SQL Server 2014 or earlier where HASHBYTES is limited to 8,000 bytes of input, use CHECKSUM or BINARY_CHECKSUM instead.

ALTER TABLE dbo.U

ADD HashCode AS

ISNULL

(

CONVERT

(

binary(16),

HASHBYTES

(

'MD5',

LongString

)

),

0x

);

DROP INDEX

[IX dbo.U SomeKey (LongString)]

ON dbo.U;

CREATE NONCLUSTERED INDEX

[IX dbo.U SomeKey, HashCode (LongString)]

ON dbo.U

(SomeKey, HashCode)

INCLUDE

(LongString)

WITH (MAXDOP = 1);

CHECKPOINT;Adding the computed column is an instant metadata-only operation, but building the new nonclustered index with the hash code takes about eight seconds on my laptop. Note the hash code is persisted in the nonclustered index but not the base table.

Test 2 results

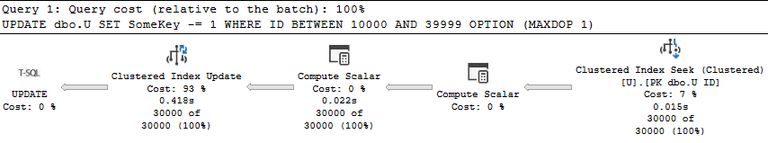

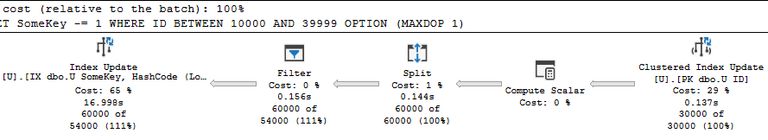

Running the script from test 1 again produces very different results. The execution plan is much more complicated and runs for 17 seconds:

I/O statistics report a slightly higher number of logical reads, but a huge number of LOB reads where there were none before:

Table 'U'. Scan count 1,

logical reads 191,771, physical reads 0, read-ahead reads 31,

lob logical reads 3,570,000, lob physical reads 0, lob read-ahead reads 240,000.

(30000 rows affected)Transaction log activity has also exploded to 2 million log records (up from 90k), over 2GB of log space (up from 11MB), and an additional 317MB of log reservation (up from 15MB).

Leaving aside the performance drop, the logging explosion would obviously be a concern in any environment limited by log throughput or where log records need to be replicated over a network or the internet.

Fixing The Problem

Before I talk about what caused the dramatic change in behaviour, let’s quickly fix the issue by persisting the computed column in the base table:

ALTER TABLE dbo.U

ALTER COLUMN HashCode

ADD PERSISTED;

CHECKPOINT;That takes about five seconds to run because it needs to compute all the hash codes afresh and write them into the base table. Once completed, running the test script again returns performance to close to its original levels. The execution plan also reverts to its simple form:

Table 'U'. Scan count 1,

logical reads 180,459, physical reads 0, read-ahead reads 0,

lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

(30000 rows affected)

Explanation

In test 1, SQL Server chose a narrow (per-row) update plan where the Clustered Index Update operator maintains both the clustered and nonclustered indexes for each row it receives. The change to the clustered index is a simple in-place modification of the existing row because the SomeKey column is not a key column of the clustered index (ID is).

The change to the nonclustered index is more complicated because SomeKey is a key column in that index. Changing a key column means the index row may have to move in the b-tree, so SQL Server implements this as a delete of the existing index row followed by an insert of a new index row.

In principle, deleting the nonclustered index row and inserting a new one ought to be an expensive operation because the index includes the LOB column LongString. So why don’t we see extensive logging and LOB activity here?

A lack of logging

The answer is that the LOB column is stored off-row, in the LOB_DATA allocation unit. Each index row only contains a small in-row blob root structure pointing to the large LOB tree stored elsewhere.

SQL Server can see that the LOB data itself is not being changed by the update, so it can safely copy the small in-row pointer from the old index entry to the new. This optimization means that although the update is physically performed (and logged) as a delete then an insert, the LOB data itself is never rewritten or changed in any way and so doesn’t need to be logged.

Test 2

SQL Server can’t perform the same optimization in the second test due to a limitation. On rowstore tables, a narrow (per-row) update plan cannot be used if a computed column stored in the index requires a scalar computation to be performed. Let me explain that a bit more.

In order to locate the nonclustered index entry to maintain for each updated base table row, the query processor needs the nonclustered index keys. The SomeKey column is available from the base table, but the HashCode computed column is also part of the nonclustered index key and that is not stored in the base table.

So, to find the nonclustered index entry SQL Server would need to evaluate the computed column value. Performing that HASHBYTES (or CHECKSUM) calculation is simply not supported in a narrow (per-row) update plan.

As a result, the optimizer has to fall back to a wide (per-index) update plan. This plan shape is always possible for a rowstore table. Narrow update plans are an optimization available in nearly all situations.

Normally, the optimizer makes a cost-based choice between the narrow and wide alternatives for each index that needs maintenance as part of the overall update statement. Each has benefits in different situations. It’s quite possible to see some nonclustered indexes maintained per-row while others are per-index in the same update plan.

The wide update

In the present case, the wide update plan is the only option available. It is a performance disaster. Here’s the important part of the wide update plan again:

The innocent-looking Compute Scalar operator with zero cost and execution time contains the problematic calculation:

[[Sandpit].[dbo].[U].HashCode] =

Scalar Operator

(

isnull

(

CONVERT

(

binary(16),

hashbytes

(

N'MD5',

[Sandpit].[dbo].[U].[LongString]

),

0

),

0x00000000000000000000000000000000

)

)This calculation is not performed at the indicated position in the execution plan, hence the zero elapsed time. The value it defines is deferred until a later operator requires the result for some purpose. This is generally an optimization to defer computations that are never needed or apply to fewer rows due to the effect of filtering, joining, or aggregation. It’s counterproductive in this case, as we will see.

Split

The Split operator splits the update stream into a delete followed by an insert. Importantly, this means a single update row is turned into two rows, one for a nonclustered index delete and one for an insert. Both rows contain all the information needed to perform the delete or insert.

The Filter operator removes any rows that don’t represent a change to the stored index values (non-updating updates). That doesn’t apply in this case because every row is being modified to change the SomeKey value. Remember we’re dealing with split deletes and inserts now though, so both the delete (old index row) and insert (new index row) pass through the filter.

Index Update

The problem really manifests at the Index Update operator as you can see from the displayed execution time of almost 17 seconds. Despite the name, this operator does not perform any updates. Depending on the value of the action column in the incoming stream, the Index Update operator either deletes an existing nonclustered index row or inserts a new one.

Deleting a nonclustered index row requires the index keys to locate the entry. These are the SomeKey, Hash Code, and ID columns (since the nonclustered index is not unique, the clustered index key is also present). Two of these columns are available from the base table and provided by the Clustered Index Update operator. Note the SomeKey value is different for the delete and insert so the Clustered Index Update emits both SomeKey and SomeKey_OLD in its output list. The Split operator distributes these correctly, so the delete/insert pair correctly implements the original update.

The Index Update operator therefore needs the HashCode value to locate the existing nonclustered index entry to delete. This causes the expression defined by the Compute Scalar to be evaluated for this row. Running HASHBYTES on the long string requires significant computational effort. Once the key value is found, the existing nonclustered index row can be deleted. This is a fully logged operation, including the deleted LOB value.

Worse, the next row received by the Index Update is the corresponding insert to add the updated nonclustered index entry. We’re dealing with a new row now, so the expensive HASHBYTES computation is performed again to locate the index insertion point. Once that’s done, a new nonclustered index entry is constructed, including a new copy of the LOB string. This insert is also fully logged.

Summary

The wide update plan is just a disaster in this example. Remember, test 1 cleverly avoided unnecessary LOB operations in its delete/insert pair by reusing the LOB pointer. The wide update plan computes the expensive hash code twice for each updated row, deletes the old index LOB entry with full logging, then constructs a full LOB copy for the insert and fully logs that as well. No wonder the update took so long to run and generated so much log.

The fix works by persisting the hash code computed column in the base table so the limitation around scalar computations in a narrow update plan no longer applies. The Clustered Index Update operator has the hash code available without performing a computation, so it can directly locate the nonclustered index entry and perform the optimized delete/insert operation (without LOB manipulation) in a narrow update.

The lesson here is not necessarily to always persist computed columns. There are often very good reasons for not doing so. Neither is the message to prefer narrow update plans over wide ones. Update plans are complicated and there are many considerations, some of which I have covered in previous articles (see below).

Thanks for reading.

Related reading

My previous articles: