Don't Mix with Datetime

This article was originally published on 𝕏.

Introduction

Microsoft encourages us not to use the datetime data type:

Avoid using datetime for new work. Instead, use the time, date, datetime2, and datetimeoffset data types. These types align with the SQL Standard, and are more portable. time, datetime2 and datetimeoffset provide more seconds precision. datetimeoffset provides time zone support for globally deployed applications.

Well, ok. Sensible and well-informed people might still choose to use datetime for performance reasons. Common date and time functions have optimised implementations in the SQL Server expression service for the datetime and smalldatetime data types.

The newer data types don’t have this optimized support. They use more general algorithms, often involving a conversion to and from datetimeoffset during intermediate calculations. You can’t usually see these extra steps in execution plans but trust me they are there. If you do heaps of DATEADD or DATEDIFF calculations, you’ll likely be running your own data type performance tests to verify that.

On the other hand, you might choose from among the newer data types for equally good reasons. Perhaps you value smaller storage size, or greater range and precision over raw speed.

Regardless, the point of this article is you should not mix datetime with the newer data and time types. You’ll avoid some quite counterintuitive behaviour and even a bug or two. Let me explain.

Changes in SQL Server 2016

The documented list of Breaking Changes includes:

Under database compatibility level 130, implicit conversions from datetime to datetime2 data types show improved accuracy by accounting for the fractional milliseconds, resulting in different converted values. Use explicit casting to datetime2 datatype whenever a mixed comparison scenario between datetime and datetime2 datatypes exists. For more information, see this Microsoft Support Article.

Unfortunately, the issues aren’t limited to datetime2.

Tick Tock

The datetime documentation says accuracy is:

Rounded to increments of .000, .003, or .007 seconds

The Microsoft Support Article describes the changes under compatibility level 130 when converting from time or datetime2 to datetime as:

Improved precision when you convert to date/time types with higher precision. Be aware that datetime values are stored as ticks that represent 1/300th of a second. The newer time and datetime2 types store a discrete number of digits, where the number of digits matches the precision.

That sounds great. Who doesn’t like improved precision?

The sneaky detail is that datetime values are represented by an integer number of days after 1900-01-01 and the number of ticks after midnight, where a tick is defined as 1/300th of a second.

Before compatibility level 130, the time component was always rounded to .000, .003, or .007 seconds. In compatibility level 130 or above, the exact value is used. One tick is 0.00333… seconds. Two ticks are 0.00666… seconds. Three ticks equal 0.01 seconds.

Notice the decimals in the 3 and 6 cases are infinitely repeating in the final digit because 1/300 and 2/300 are not finitely representable in decimal.

Many datetime values don’t exist

Any datetime value with 3 or 7 displayed in the third decimal place cannot be represented exactly as a datetime2, no matter what precision you choose. The same applies to time and datetimeoffset.

Let me show you an example to illustrate the point:

DECLARE @DT datetime = {TS '1900-01-01 00:00:00.003'};

DECLARE @DT2 datetime2(7) = CONVERT(datetime2(7), @DT);

SELECT TOP (1)

[datetime] = @DT,

[datetime2] = @DT2,

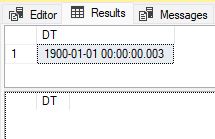

[Match] = IIF(@DT = @DT2, 'Matched', 'Not Matched');![Results under CL120 [top row]and CL130 [bottom row] Results under CL120 (top row) and CL130 (bottom row)](/img/EGVxABnq1m-411.jpeg)

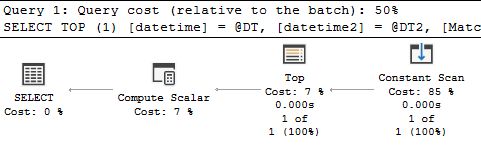

The top row of results was obtained under compatibility level 120. The bottom row ran at compatibility level 130. The TOP (1) is only there so the statement produces an execution plan. Both plans look the same and have the same query plan hash:

However, in the CL120 plan, the Compute Scalar contains:

CASE WHEN CONVERT_IMPLICIT(datetime2(7),[@DT],0)=[@DT2]

THEN 'Matched'

ELSE 'Not Matched' END<In the CL130 plan, it contains simply:

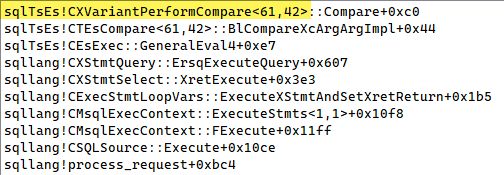

CASE WHEN [@DT]=[@DT2] THEN 'Matched' ELSE 'Not Matched' ENDThere is no implicit conversion. SQL Server performs the comparison directly. This is not a bug. For those of you who enjoy a debugger call stack, the expression evaluation is:

Yes, the data type precedence rules say the datetime value should be converted to datetime2 before comparison, but SQL Server doesn’t exactly do that here.

The subtlety

Don’t be misled by the values shown in the statement output. The CL130 behaviour is really subtle and can’t be explained just by noting that 1900-01-01 00:00:00.003 and 1900-01-01 00:00:00.0033333 do not match.

When SQL Server compares the two values, it compares 1900-01-01 00:00:00.003 as a datetime and 1900-01-01 00:00:00.0033333 as a datetime2.

According to the new “improved accuracy” rules, the exact datetime value is one tick after midnight on January 1, 1900. This is not the same as 0.0033333 seconds after midnight. It’s not 0.003 seconds after midnight either. That’s just normal datetime rounding.

No, one tick after midnight is 0.003… seconds where the ‘3’ is recurring. That is very slightly larger than 0.0033333.

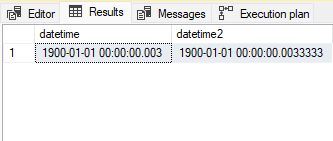

Don’t believe me? Run the following under CL130 or higher:

DECLARE @DT datetime = {TS '1900-01-01 00:00:00.003'};

DECLARE @DT2 datetime2(7) = CONVERT(datetime2(7), @DT);

SELECT TOP (1)

[datetime] = @DT,

[datetime2] = @DT2

WHERE

@DT > @DT2; -- Notice greater than!

Yes, 0.003 is larger than 0.0033333! That should shock you a bit might and make you wonder how much of your code base is broken. Remember, there’s no implicit conversion so you can’t go looking for examples with CONVERT_IMPLICIT in the plan XML.

Nevertheless, this is an accurate result under CL130+ because one datetime tick (1/300th of a second) is indeed longer than 0.0033333 datetime2 seconds because of the endless stream of trailing 3s.

The maximum precision of datetime2 is seven, so we can’t fix this by increasing that. There is simply no datetime2 value that exactly matches a datetime value with a 3 or 7 in the third decimal place. It would require infinite precision.

For completeness, note that 0.0033334 seconds is longer than one tick.

The explicit conversion fix

We can ‘correct’ the example by using an explicit conversion to datetime2:

DECLARE @DT datetime = {TS '1900-01-01 00:00:00.003'};

DECLARE @DT2 datetime2(7) = CONVERT(datetime2(7), @DT);

SELECT TOP (1)

[datetime] = @DT,

[datetime2] = @DT2,

[Match] =

IIF

(

-- Explicit conversion of @DT

CONVERT(datetime2(7), @DT) = @DT2,

'Matched',

'Not Matched'

);Results now show a match under both CL120 and CL130:

![Results with an explicit conversion in CL120 [top row] and CL130 [bottom row] Results with an explicit conversion in CL120 (top row) and CL130 (bottom row)](/img/5SDAnaRdxa-392.jpeg)

As before, CL120 results are in the top row and CL130 results are in the bottom row.

Again, don’t be misled by the displayed values for the CL130 case. The fix works because the accurate datetime value of one tick after midnight is explicitly converted to the closest datetime2(7) representation, 0.0033333 seconds. The same approximation is performed when assigning the DT2 variable, so the values match.

Changing from datetime2(7) to datetime2(3) would not fix the underlying issue here, though the displayed values would be the same (ending in 0.003). The problem is not the displayed values, it’s deep in the comparison internals.

Even Microsoft Gets This Wrong

The new behaviour may be more accurate, but it is certainly confusing and counterintuitive. If you think it’s tough to write correct T-SQL when combining a datetime data type with one of the newer ones in an expression, spare a thought for the SQL Server developers.

The query processor contains a great deal of code for deriving data types from complex expressions, comparing mismatched data types, manufacturing an index seek with mismatched predicates and much more besides. Now make all that work reliably for every combination of types, noting that the CL130 improvements were not limited to dates and times.

Let me show you a bug caused by this change that reproduces up to SQL Server 2022 CU14 when the database compatibility level is 130 or higher:

DECLARE @DT datetime = {TS '1900-01-01 00:00:00.003'};

DECLARE @DT2 datetime2(7) = CONVERT(datetime2(7), @DT);

CREATE TABLE #T

(

DT datetime NOT NULL INDEX i

);

INSERT #T VALUES (@DT);

SELECT DT

FROM #T WITH (FORCESEEK)

WHERE CONVERT(datetime, DT) = @DT2;

SELECT DT

FROM #T WITH (FORCESCAN)

WHERE CONVERT(datetime, DT) = @DT2;Notice the redundant CONVERT to datetime on the DT column. This activates an index-matching helper in the optimizer similar to the well-known practice of converting a column to the date type to match with a seek regardless of the time component. The bug doesn’t reproduce without the redundant conversion. You can use datetimeoffset instead of datetime2 if you like.

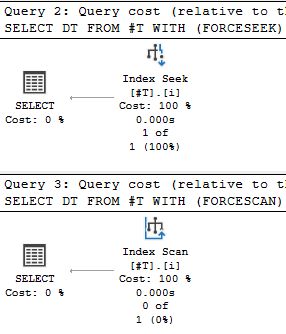

Anyway, the example performs the same comparison twice: once as an index seek (activating the helper) and once as an index scan (no seek helper):

The results are:

The seek plan produces a row but the scan plan does not!

The correct result is not to return the row because one tick after midnight is not the same as 0.0033333 seconds after midnight as we have seen. Using an index or not should never change the query result, so this is a product defect.

The seek plan contains [#T].DT = Scalar Operator([@DT2]).

The scan plan uses CONVERT(datetime,[#T].[DT],0)=[@DT2].

Both involve a mismatched-type comparison between datetime and datetime2. SQL Server just happens to compute the range values correctly in one case and not in the other. As I said, this stuff is subtle and confusing.

Final thoughts

A bug for the same underlying behaviour has been reported on the Feedback site by @MartinSmith_. Give it an upvote if you are able.

Meanwhile, don’t mix datetime with the newer date and time data types, or carefully use exactly the right explicit conversion if you do.

This can be difficult to spot because you might not always make an explicit data type declaration. For example, GETDATE() returns datetime, as does DATEADD when used with a string literal in the datetime range. It really can pay to be obsessive about matching expression data types.

Thanks for reading.

Related article