SQL Server Parallel Index Builds

This article was originally published on 𝕏.

SQL Server doesn’t support parallel modifications to a b-tree index.

That might sound surprising. After all, you can certainly write to the same b-tree index from multiple sessions concurrently. For example, two sessions can happily write alternating odd and even numbers to the same integer b-tree index. So long as both sessions execute on different schedulers and take row locks, there will be no blocking and you’ll get true concurrency.

No, what I mean is: A single session can’t write to a b-tree index using more than one thread. No parallel plan modifications of a b-tree index, in other words. It’s a bit like the lack of parallel backward ordered scans. There’s no reason it couldn’t be implemented, but it hasn’t been so far.

You may have thought SQL Server would use a regular parallel scan to read the index source data, optionally sort it into index key order, then add those rows to the index in parallel. This would indeed work, even without sorting, but SQL Server just can’t do it.

In case you’re wondering, sorting into destination key order is an optimization. The resulting index would still be correct without it, but you’d be inserting rows essentially at random into a b-tree, with all the random I/O and page splitting that would entail.

Ok, you say, but what about parallel index builds? They’ve been around for a long time in premium editions and certainly seem to modify a single b-tree in parallel. Yes, they do seem to, but SQL Server cheats.

The General Idea

To build a b-tree index in parallel, SQL Server splits the work up into disjoint sets according to the index keys. Each thread builds its own b-tree just as if it were a serial index building plan. Once all threads have built their individual indexes, a final step concatenates the partial indexes into the final single b-tree result. There is a little complexity if the interim indexes have different heights, but it’s nothing too tricky to handle.

Implications

This strategy only works if each thread can be given a range of values that is guaranteed not to be seen by any other thread. For that, SQL Server needs statistics. If a suitable statistics object doesn’t already exist on the leading key column of the new index, SQL Server builds temporary statistics using the default sampling rate. If it builds a statistics object for this purpose, it is thrown away as soon as it has been used to generate the disjoint ranges.

The range boundaries determine leading key range values for the index build. SQL Server statistics only provide histograms for the leading key, so that’s the only basis on which ranges can be generated. Your index might have a number of keys, but only the first one is used in determining how parallelism might be employed.

The query processor attempts to generate key value ranges with a roughly equal number of rows so work will be evenly distributed among parallel worker threads. One consequence of this is that a leading index key column with few distinct values may not be able to use the full degree of parallelism (DOP) available to the executing statement.

For example, in a session with an effective available DOP of 16 but only 8 distinct values for the leading key of the index, only a maximum of 8 cores (schedulers) can be employed.

Further, if the statistics show parallelism is unlikely to be effective, SQL Server will build a single-thread index insert plan instead. The reading and sorting part of the plan may still use parallelism, but the b-tree modification itself will be serial. This can happen where there is excessive row count skew among the ranges, for example.

The optimizer will also choose a serial index insert plan (with a possibly parallel scan and sort) if its total cost is less than the parallel index plan.

Producing Ranges

It’s one thing to determine the boundary values for the ranges, but we really need to produce rows in those ranges on different threads. How can that be done in practice?

Most often, we’ll be building a nonclustered index on a heap or clustered table with a different key order, so we can’t just perform a per-thread seek to obtain the disjoint ranges. There’s simply no index to seek into (yet).

The First Idea

In the original SQL Server 2000 implementation, SQL Server would fully scan the base table (heap or clustered index) per thread and apply the range filter appropriate to that thread at the storage engine layer.

This is a level deeper than anything you’ll see directly in even a modern execution plan. You will have seen ‘residual predicates’ pushed down into a scan or seek operator, but that’s still filtering at the query processor level. A storage engine filter is applied earlier, inside the access method performing the seek or scan. This is the most efficient way to apply a range filter, but it’s still a full scan of the entire base table per thread in principle. To repeat, you can’t see these filters directly in an execution plan.

The implementation still exists in modern SQL Server (up to 2022 inclusive). If you want to experience it with an offline index build, enable undocumented trace flag 7349 at session level or above.

Example 1

Using the Stack Overflow 2013 database, which has 52,928,720 rows in the Votes table:

EXECUTE dbo.DropIndexes;

GO

DBCC TRACEON (7349);

GO

CREATE NONCLUSTERED INDEX i

ON dbo.Votes (VoteTypeId)

WITH (MAXDOP = 0);

GO

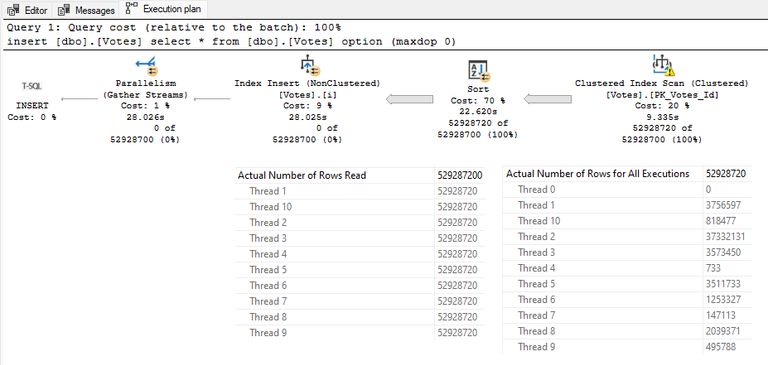

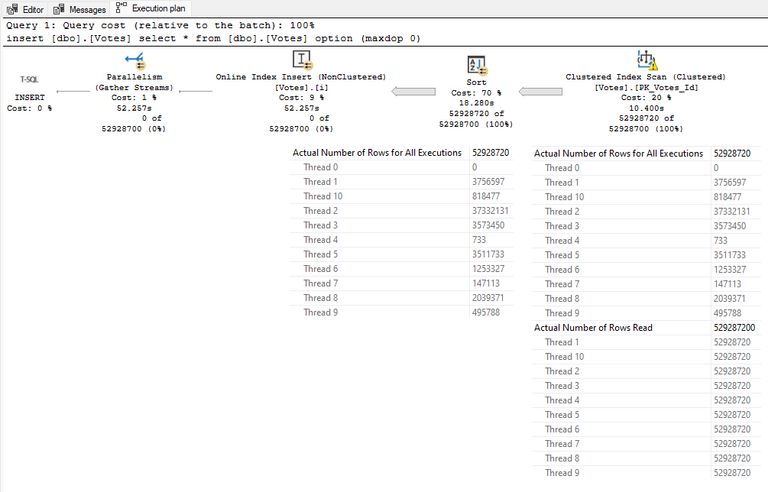

DBCC TRACEOFF (7349);The post-execution (‘actual’) index building plan is:

The row counts shown are for the Clustered Index Scan operator:

- The Actual Number of Rows Read counter reflects the number of rows touched by the storage engine, showing a full scan of the almost 53 million rows by each thread. In total, close to 530 million rows were processed at DOP 10.

- The Actual Number of Rows for All Executions counter shows the number of rows that passed the storage engine range filter for each thread. The ranges aren’t very well distributed in this case, mostly due to the underlying skew in row counts per VoteTypeId value.

The VoteTypeId column only has 14 distinct values in this edition of the Stack Overflow database. My laptop has 16 cores available, but SQL Server reduced the number of ranges to 10 in this case and set DOP for the statement to 10 to match.

The warning triangle on the scan is due to missing statistics on the VoteTypeId column. SQL Server displays this warning when it builds temporary statistics for range generation.

Note the Sort operator builds index rows directly. The output of the sort is a reference to an index row, not individual column values. This is an optimization that I mention in passing, it’s not important in the current context.

Example 2

Let’s see what happens if we build a nonclustered index using a column with a very skewed data distribution. The public Stack Overflow database scrubs voting data so the UserId column is almost always NULL.

EXECUTE dbo.DropIndexes;

GO

DBCC TRACEON (7349);

GO

CREATE NONCLUSTERED INDEX i

ON dbo.Votes (UserId)

WITH (MAXDOP = 0);

GO

DBCC TRACEOFF (7349);The execution plan with Clustered Index Scan row counts shows all 16 cores utilised:

However, the plan now includes a serial index insert. The scan is a regular cooperative parallel scan. Rows are sorted and merged at the Gather Streams exchange to present a single thread of sorted rows to the serial Index Insert operator.

The epic skew was enough to convince the optimizer that parallelism would not be effective. Almost all the index creation work would end up on a single thread due to the domination of nulls in the leading index key.

The execution time is much longer, not because the Gather Streams is slow, but because the single-threaded Index Insert simply can’t keep up with the rate of work provided by the parallel section of the plan.

Example 3

As a final illustration of the SQL Server 2000 strategy, let’s look at an index build on a slightly smaller table with a leading index key containing a good number of distinct values, since posts are well distributed among users creating them:

EXECUTE dbo.DropIndexes;

GO

DBCC TRACEON (7349);

GO

CREATE NONCLUSTERED INDEX i

ON dbo.Posts (OwnerUserId)

WITH (MAXDOP = 0);

GO

DBCC TRACEOFF (7349);The execution plan is:

The parallel Index Insert is back. A full scan of the 17 million row table is performed by each of 16 threads. This is not a regular cooperative parallel scan; it is 16 full scans totalling over 274 million rows. Each thread applies its range filter at the storage engine layer to produce the row counts shown in the Actual Number of Rows for All Executions counter.

There are 1,435,072 distinct values of OwnerUserId in the Posts table. This allows SQL Server to easily generate 16 ranges to distribute the work evenly over 16 threads. Each thread ends up sorting just over 1 million rows and building its disjoint section of the final 17 million row index.

SQL Server 2005 Improvement

The SQL Server 2000 strategy delivered a way to build a b-tree index using parallelism, which wasn’t possible before. It is still used for parallel online and resumable index builds, even in SQL Server 2022.

The main drawback is the full scan of the base table per thread, albeit with an efficient range filtering arrangement. This gets worse as the size of the table and DOP increases.

SQL Server 2005 improved on this arrangement for offline, unfiltered, and unpartitioned index builds by making use of something called Multi Sort. Though not exposed in showplan xml at the time, it is an optional property of the Sort operator.

The property is named Partition ID and is visible in showplan from SQL Server 2008 onward. When a Sort operator has the Partition ID property, it is a Multi Sort. The partition ID is the leading key column in parallel index plans. In other uses, it refers to a partition id as used in table and indexed view partitioning.

Multi Sort

A multi sort, as the name suggests, has multiple sort tables instead of just one. Primarily designed to support table partitioning, the multi sort found a creative usage in parallel index building plans.

If you think about it, building disjoint sections of an index in parallel is a lot like inserting rows into a partitioned table or index: We need to figure out which partition (= range) a row falls into before inserting it.

Instead of passing key value ranges to the storage engine and performing a full scan on each thread, SQL Server 2005 builds a temporary partitioning function based on the results of the histogram-based statistical analysis described previously. The temporary function isn’t persisted and doesn’t appear in any metadata. The multi sort uses the temporary partitioning function to route incoming rows to the correct sort table.

The primary advantage is that we can now use a regular cooperative parallel scan where each thread is given (pages of) rows from the base table on demand. Instead of DOP full scans, we can now have a single scan cooperatively performed by DOP threads. This is a big performance win.

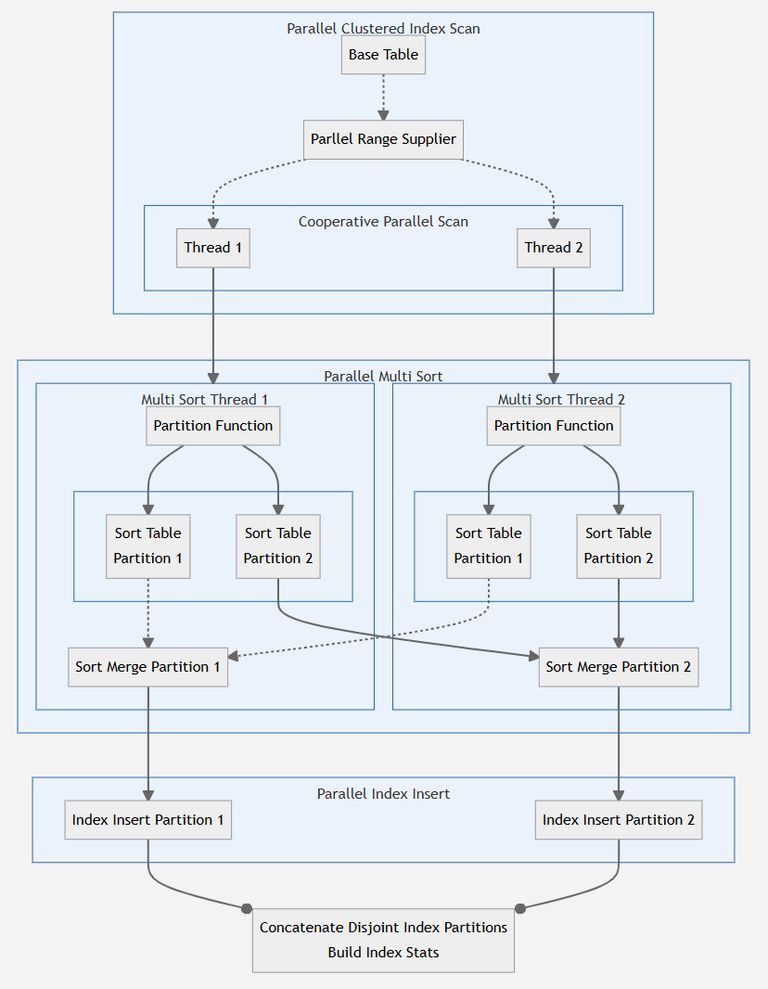

How It Works

Each thread taking part in the cooperative parallel scan receives some fraction of rows from the base table. The problem is the current thread will only end up building one part of the final index. It should therefore only deal with rows that fall within its assigned range.

The fundamental issue just seems to have moved from the scan to the sort, doesn’t it?

Well, no. The clever solution is the parallel multi sort. It’s a bit tricky to understand at first, so I’ll describe it in stages using an example of building a parallel index at DOP 16:

- A temporary partitioning function is built, describing 16 partitions.

- There are 16 serial instances of the multi sort operator in the parallel plan, one per thread, as normal.

- Each serial multi sort contains 16 sort tables. This is the key difference.

- There are 256 sort tables in total, 16 sets of 16, targeting partitions 1-16. We’re still sorting the same amount of data in total, so memory usage is much the same, just distributed differently.

- During the input phase of the sort, each thread receives rows as part of the cooperative parallel scan, essentially at random. It routes rows based on the temporary partitioning function to one of its private sort tables, numbered 1 to 16.

- Once the input phase of the sort is complete, all sort tables become public (accessible by any thread).

- When the sort operator transitions to its output phase, each thread merges rows from the 16 sort tables corresponding to its assigned partition. This will be one sort table from each of the 16 partitions.

The last part is the clever bit. Imagine you are thread #4, building the fourth part of the final index. All the data for your assigned range is spread across 16 muti sorts, but it’s guaranteed to be in sort table #4 of each.

All you need to do is read sorted data from each of the 16 sort tables associated with your partition number, preserving the desired sorted order. Merging multiple sorts is something SQL Server already does when it merges rows from spilled sort partitions, so we can just reuse the same external merge sort functionality for this.

The end result is that each thread receives sorted rows for just its part of the index building exercise from DOP sort tables. Once the 16 individual b-trees are built, they can be stitched together as before. Finally, statistics are built on the final index, and the process is complete.

Here is a visual representation of the process (at DOP 2 for space reasons):

Example

Let’s build the index in example 1 again, but without the trace flag.

EXECUTE dbo.DropIndexes;

GO

CREATE NONCLUSTERED INDEX i

ON dbo.Votes (VoteTypeId)

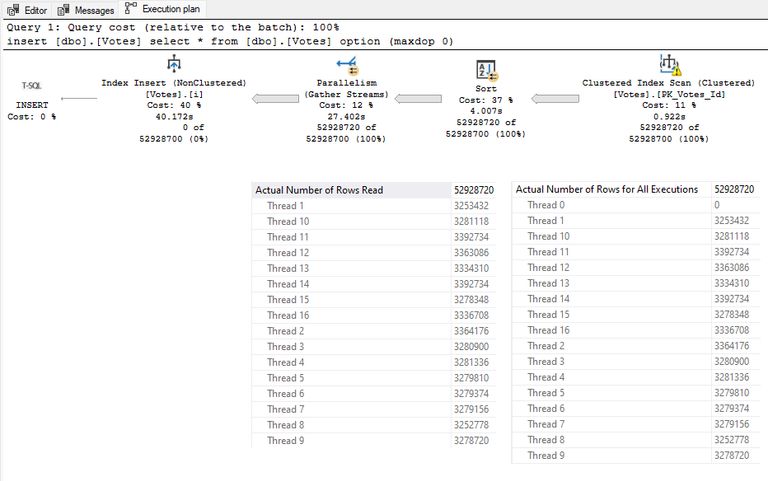

WITH (MAXDOP = 0);The execution plan is now:

The per-thread row counts at the scan now show a cooperative parallel scan. Each of 10 threads receives roughly 5.3 million rows from the approximately 53 million row table. This single parallel scan is much faster than scanning the full table 10 times and applying a storage engine filter.

The Sort operator is a Multi Sort, since it has a Partition ID attribute. The attribute indicates the leading key column used in the temporary partitioning function (VoteTypeId in this case).

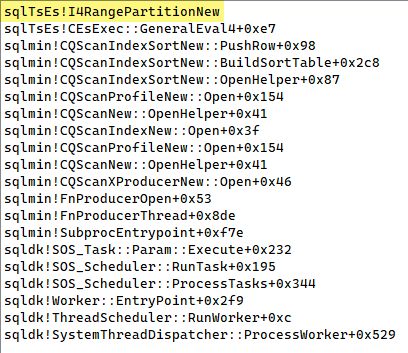

For those of you who enjoy seeing a SQL Server call stack, here’s one of the parallel threads calling the RangePartitionNew intrinsic to determine the partitioning function range for a row during sorting. The result of this call decides which sort table the row will be added to:

Key points:

- The Sort output shows the same imperfect distribution of rows as in the earlier example. Splitting rows using a partitioning function has the same effects as using storage engine filters because they use the same boundary points derived from the statistics histogram.

- DOP is reduced from 16 to 10 as before because only 10 partitions (ranges) are created using SQL Server’s algorithm.

- The parallel index creation process completes in around 14.4 seconds, compared with 28 seconds when using the SQL Server 2000 strategy. A very useful improvement.

The plan is an unusual one in that the distribution of rows among threads changes without a Repartition Streams exchange. The operation of the multi sort explains how and why this rearrangement occurs.

Online Index Builds

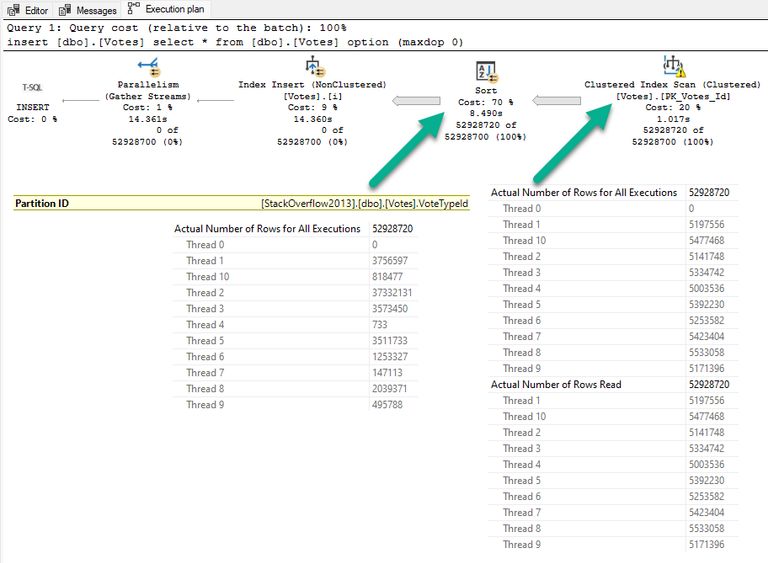

I’ve mentioned this already, but for the sake of completeness, here’s the same example without the trace flag but with an ONLINE index build specified:

EXECUTE dbo.DropIndexes;

GO

CREATE NONCLUSTERED INDEX i

ON dbo.Votes (VoteTypeId)

WITH (MAXDOP = 0, ONLINE = ON);The execution plan is:

This is much, much slower at over 52 seconds. Notice the 10 full scans and storage engine filtering. The sort does not have the Partition ID property. It is a regular parallel sort, not a multi sort.

Summary and Final Thoughts

SQL Server is able to build a b-tree index with parallelism, even though the core engine doesn’t directly support parallel write operations on b-trees. It does this by splitting the source data into disjoint ranges, building separate b-tree indexes on each thread, and stitching the component parts together at the end.

The original SQL Server 2000 strategy scanned the base table fully on each thread, applying a range filter in the storage engine to produce non-overlapping ranges per thread.

The new offline strategy uses a cooperative parallel scan, distributing rows using a temporary partition function at a Sort operator running in Multi Sort mode. The overall approach is very similar to building an aligned nonclustered index on a partitioned table (except the resulting index is not partitioned, of course).

The number of cores used and the distribution of rows among threads depends on the statistics histogram built only on the leading key of the index. SQL Server attempts to combine row counts across distinct values to achieve a good distribution of work, but the algorithm employed cannot always produce great results.

SQL Server can never use more cores than there are distinct values for the index leading key. It may use fewer cores than there are distinct values depending on how it chooses to combine adjacent key ranges from the statistics histogram.

It can never distribute rows with the same leading index key value across multiple threads. If one leading column key value dominates the row count, performance may be poor as that thread takes a long time to finish its assigned work.

SQL Server may choose a serial index insert if the skew is considered excessive, to the point that parallelism would only add overhead. A serial index building plan might still use parallelism in the scanning and sorting read-side portion of the execution plan. The serial index build will usually be a bottleneck in the plan, especially if your system configuration allows minimal logging.

The multi sort strategy described in this article applies only to unfiltered, offline, non-partitioned index builds. Online and resumable index builds still use the SQL Server 2000 strategy. Filtered indexes use range partitioning in a Repartition Streams exchange with a parallel scan and ordinary parallel sort.

Ideally, SQL Server would be enhanced to allow unrestricted parallel modifications to b-tree indexes in a single execution plan as, for example, Oracle has. This is the only way to address the skew issue. I understand this would be a very significant, and risky, body of work to complete so don’t hold your breath.

Thanks for reading.