The Lock Escalation Threshold—Part 1

Introduction

This article isn’t about the act of lock escalation itself, which is already well documented and generally well understood. Some myths (like row locks escalating to page locks) persist, but challenging those yet again probably wouldn’t change much. Instead, the question I’ll address here is exactly how and when lock escalation is triggered. Much of the documentation is incorrect or at least imprecise about this and I’ve been unable to find a correct description in other writings.

There are good reasons you haven’t seen a simple demo of lock escalation taking place at 5000 locks. I’ve seen suggestions such as lock escalation isn’t deterministic, or some types of locks don’t count toward the 5000 lock threshold. Neither of those assertions is true, but the details are interesting, as I’ll explain.

Testing environment

Let’s look at some examples using a fresh database. I happen to be using SQL Server 2019 CU16 but the details I’ll describe haven’t materially changed since partition level lock escalation was added to SQL Server 2008.

USE master;

-- Create a new test database

-- COLLATE clause only to remind people it exists

CREATE DATABASE Escalation

COLLATE Latin1_General_100_CI_AS;

-- Disable row-versioning isolation levels

-- to avoid the 14-byte row overhead

-- Not required, but it makes the later tests more precise

ALTER DATABASE Escalation SET

ALLOW_SNAPSHOT_ISOLATION OFF;

ALTER DATABASE Escalation SET

READ_COMMITTED_SNAPSHOT OFF;I’m creating a stored procedure to make it easier to run tests with different row counts while showing consistent diagnostic output. The procedure creates a test table, populates it with a configurable number of rows, runs a test statement inside a transaction, and displays information about locks and escalations.

USE Escalation;

GO

CREATE OR ALTER PROCEDURE dbo.RunTest

@Rows bigint,

@Clustered bit = 'false',

@HidePhysicalStats bit = 'false',

@HideLockDetail bit = 'false',

@HideLockSummary bit = 'false'

AS

SET NOCOUNT, XACT_ABORT ON;

-- Prevent plan caching for this procedure

-- See https://sql.kiwi/2022/03/simple-param-trivial-plans-1

GOTO Start

OPEN SYMMETRIC KEY Banana

DECRYPTION BY CERTIFICATE Banana;

Start:

-- Recreate the test table

DROP TABLE IF EXISTS dbo.LockTest;

CREATE TABLE dbo.LockTest

(

pk integer IDENTITY NOT NULL,

i bigint NOT NULL,

lob nvarchar(max) NOT NULL

);

-- Add data

INSERT dbo.LockTest

WITH (TABLOCKX)

(i, lob)

SELECT

i = ROW_NUMBER() OVER (ORDER BY @@SPID),

lob = CONVERT(nvarchar(max), N'big string')

FROM sys.all_columns AS AC1

CROSS JOIN sys.all_columns AS AC2

ORDER BY i

OFFSET 0 ROWS

FETCH NEXT @Rows ROWS ONLY;

-- Convert from heap to clustered if required

IF @Clustered = 'true'

BEGIN

ALTER TABLE dbo.LockTest

ADD CONSTRAINT [PK dbo.LockTest pk]

PRIMARY KEY CLUSTERED (pk)

WITH (ONLINE = OFF, MAXDOP = 1, FILLFACTOR = 100);

END;

-- Show table physical statistics

IF @HidePhysicalStats = 'false'

BEGIN

SELECT

IPS.index_type_desc,

IPS.alloc_unit_type_desc,

IPS.index_depth,

IPS.index_level,

IPS.page_count

FROM sys.dm_db_index_physical_stats

(

DB_ID(), OBJECT_ID(N'dbo.LockTest', 'U'), NULL, NULL, NULL

) AS IPS;

END;

-- Start a transaction

BEGIN TRANSACTION;

-- Test statement(s)

DECLARE @i bigint;

SELECT @i = LT.i

FROM dbo.LockTest AS LT

WITH (REPEATABLEREAD);

-- End test statement(s)

-- Show locks held by the current transaction

IF @HideLockDetail = 'false'

BEGIN

SELECT

DTL.resource_type,

DTL.resource_description,

DTL.resource_associated_entity_id,

DTL.request_mode,

DTL.request_status

FROM sys.dm_tran_locks AS DTL

WHERE

DTL.request_owner_type = N'TRANSACTION'

AND DTL.request_session_id = @@SPID

AND DTL.request_request_id = CURRENT_REQUEST_ID()

AND DTL.request_owner_id = CURRENT_TRANSACTION_ID()

ORDER BY

CASE DTL.resource_type

WHEN N'OBJECT' THEN 1

WHEN N'PAGE' THEN 2

WHEN N'KEY' THEN 3

WHEN N'RID' THEN 3

WHEN N'APPLICATION' THEN 999

END,

DTL.resource_description;

END;

-- Transaction lock summary

IF @HideLockSummary = 'false'

BEGIN

SELECT

TotalLockCount = COUNT_BIG(*),

HoBtLockCount =

SUM

(

IIF

(

DTL.resource_associated_entity_id = P.hobt_id,

1,

0

)

),

PageLocks =

SUM

(

IIF

(

DTL.resource_type = N'PAGE',

1,

0

)

),

RowLocks =

SUM

(

IIF

(

DTL.resource_type IN (N'RID', N'KEY'),

1,

0

)

),

TableLockType =

MAX

(

IIF

(

DTL.resource_type = N'OBJECT',

DTL.request_mode,

N''

)

)

FROM sys.partitions AS P

JOIN sys.dm_tran_locks AS DTL

ON DTL.resource_associated_entity_id IN

(P.[hobt_id], P.[object_id])

WHERE

P.[object_id] = OBJECT_ID(N'dbo.LockTest', 'U')

AND DTL.request_owner_type = N'TRANSACTION'

AND DTL.request_owner_id = CURRENT_TRANSACTION_ID()

AND DTL.request_session_id = @@SPID

AND DTL.request_request_id = CURRENT_REQUEST_ID();

END;

-- Index operational statistics

SELECT

IOS.row_lock_count,

IOS.page_lock_count,

IOS.index_lock_promotion_attempt_count,

IOS.index_lock_promotion_count

FROM sys.dm_db_index_operational_stats

(

DB_ID(), OBJECT_ID(N'dbo.LockTest', 'U'), NULL, NULL

) AS IOS;

ROLLBACK TRANSACTION;

DROP TABLE dbo.LockTest;

GOTest 1 with 6213 rows

My first test adds 6213 rows to a heap table and selects all rows using REPEATABLEREAD isolation to ensure shared locks are held to the end of the transaction.

EXECUTE dbo.RunTest @Rows = 6213;The outcome is essentially the same when using a clustered table. If you prefer that configuration, run the procedure with the optional @Clustered parameter set to true.

For a heap, example results are:

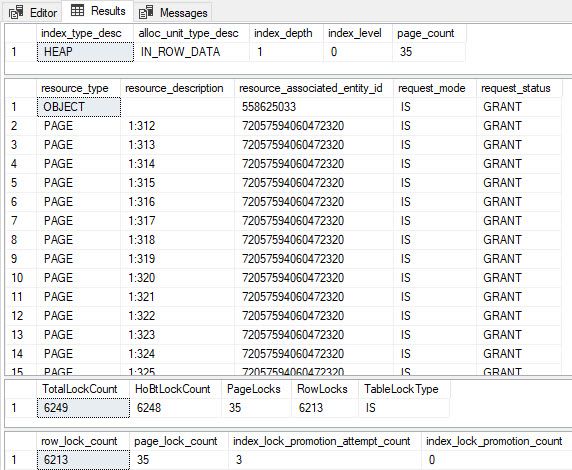

The first result set shows our test table is a heap with 35 allocated pages. The second set shows the locks held in detail. There are a large number of shared RID locks further down the list.

The third table shows a summary of the locks. There are a total of 6249 locks held: 6213 row locks, 35 page locks, and one table (OBJECT) lock.

The displayed HoBt (heap or b-tree) lock count (6248) includes all locks except the table lock. A HoBt (pronounced “hobbit”) is a partition. A table may have many partitions so a table-level lock is not associated with any particular partition.

The data comes from sys.dm_tran_locks, where the resource associated with a lock is a HoBt for KEY, RID, and PAGE locks. The associated resource for an OBJECT lock is an object id.

Lock escalation can be configured to escalate to the partition level instead of to the table. My test table isn’t partitioned, except in the technical sense, as all tables are partitioned with at least one partition. I won’t talk specifically about tables with multiple partitions for space reasons, but the details aren’t materially different.

The final result in the screenshot shows output from sys.dm_db_index_operational_stats. As expected, for a full scan of the 6213-row test table under repeatable read isolation, 6213 row locks were taken. Each shared (S) RID lock is covered by an intent-share (IS) lock on its immediate parent page (only) and an IS lock at the OBJECT level.

The last result set confirms no lock escalation occurred, but does indicate three lock promotion attempts were made.

This appears to contradict the documentation in several respects. First, no lock escalation occurred at 5000 held locks. Second, additional attempts are supposed to happen every subsequent 1250 locks. With 6249 locks held, we didn’t quite reach 5000 + 1250 = 6250 for the first ‘escalation retry’. I’ll explain these observations shortly.

Test 2 with 6214 rows

Let’s run a second test with one more row in the test table:

EXECUTE dbo.RunTest @Rows = 6214;The output is:

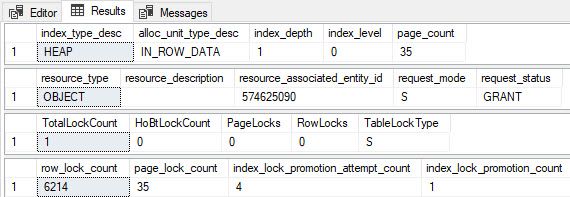

The output shows lock escalation turning the IS lock at the OBJECT level into an S lock and releasing all the RID and PAGE locks below it.

There were four lock escalation attempts, and one successful lock promotion according to the dynamic management views (DMVs).

There’s no difference between a ‘lock escalation’ and a ‘lock promotion’. The documentation and DMVs use different terminology to mean the same thing in different places.

Explanation

Lock escalation is described in several places in the documentation. The one coming closest to being completely accurate is in the (very long) Transaction locking and row versioning guide under the heading Escalation threshold for a Transact-SQL statement, where it says:

When the Database Engine checks for possible escalations at every 1,250 newly acquired locks, a lock escalation will occur if and only if a Transact-SQL statement has acquired at least 5,000 locks on a single reference of a table. Lock escalation is triggered when a Transact-SQL statement acquires at least 5,000 locks on a single reference of a table.

Careful reading is necessary to draw the correct inferences from those statements. It also helps if you already know what’s happening and why before reading. I’ll unpack the necessary details next.

Initial check for lock escalation

The engine does indeed check for possible lock escalation at (almost) every 1250 locks acquired by the transaction. Checking after every lock would create unacceptably high overhead since locks can be acquired and released with great frequency.

The SQL Server lock manager keeps track of the number of locks held by the current transaction. When a lock is acquired, the count is incremented. When a lock is released, the counter is decremented. Converting an existing lock, for example from U to X has no net effect on the counter. It’s the cumulative number of locks held by the transaction that’s important.

This counter isn’t limited to table/page/row locks on user objects: it includes all locks acquired via the lock manager count, including intent and application locks. System locks also count, but these are rarely held for long and aren’t associated with the user transaction.

When the number of locks held divides evenly by 1250, a check for lock escalation is started. Well, almost. A quirk of the implementation means no check is performed when the count of held locks is exactly 1250.

The sqlmin!lck_lockInternal locking code below checks if the number of locks is greater than 1250 (0x4E2 hexadecimal):

mov r8d,dword ptr [rcx+64h] -- load lock count

cmp r8d,4E2h -- compare with 1,250

ja sqlmin!lck_lockInternal+0x1070 -- jump if aboveThe branch is only taken if the lock count is greater than 1250, not if it’s equal. The first number of held locks that will take the branch is therefore 1251, not 1250. This seems like an oversight.

Integer division

When the branch is taken, SQL Server next divides the held lock count by 1250 using integer division, then multiplies the result by 1250. This implements the idea of checking once every 1250 newly-held locks.

For example, 1251 divided by 1250 gives 1 (using integer division), then 1250 after the multiplication. This result doesn’t match the original value of 1251, so we know the original didn’t evenly divide by 1250. When 2500 locks are held, the integer division results in 2, giving the original 2500 when multiplied by 1250.

When the number of held locks is evenly divisible by 1250, the engine continues to check for lock escalation by calling into sqlmin!XactLockInfo::EscalateLocks. Otherwise, no escalation occurs.

This means escalation checks occur in practice when the number of held locks is 2500, 3750, 5000, 6250, and so on. Notice the lack of a check at 1250 due to the code quirk I mentioned. Also, the checks don’t start at 5000 held locks.

As a side note, the compiled code doesn’t actually divide by 1250 since integer division is typically a much slower CPU operation than integer multiplication. Instead, it multiplies by 1/1250 using a technique known as Division by Invariant Multiplication:

mov eax,0D1B71759h -- 0.8192 in hexadecimal

mul eax,r8d -- multiply #locks

shr edx,0Ah -- shift right 10 (divide by 1024)

-- so we have multipled by 0.0008 = 1/1,250

imul eax,edx,4E2h -- multiply by 1,250

cmp r8d,eax -- did we get the original number back?Access methods

To understand the next part of the process accurately, I’ll first need to give an introduction to access methods.

When the SQL Server query processor needs to read or change data, it does so via one or more storage engine access methods. Whenever you see a data-accessing operator in an execution plan—like an update, insert, delete, seek, or scan—there’s at least one connection to the storage engine via access methods.

Each data-accessing plan operator has its own access methods. A plan accessing the same underlying object (heap or index) multiple times has different access methods for each operator. A common example of this would be a self-join using the same index both times.

Some individual plan operators can have multiple access methods. For example, an Update operator may have one for inserts and one for deletes if it is preceded by a Split operator. A Merge operator may have up to three access methods for inserts, deletes, and updates.

I like to think of access methods as an API used by the query processor to read or change data managed by the storage engine. These connections aren’t visible in execution plans.

The main access method elements relevant to this article are the heap and index dataset sessions sqlmin!HeapDataSetSession and sqlmin!IndexDataSetSession respectively. These are created when a data-accessing operator opens.

Each access method dataset session is associated with a particular HoBt and maintains a count of locks held. This is separate from the total lock count maintained by the lock manager per transaction.

Access method checks

In sqlmin!XactLockInfo::EscalateLocks SQL Server sequentially checks all active access methods associated with the current statement to see if any qualify for lock escalation.

As I mentioned, an access method typically becomes active when its parent query processor operator is opened during statement execution. If you need a refresher on how execution plans operate in detail see my article, Iterators, Query Plans, and Why They Run Backwards.

My tests have only a single HeapDataSetSession controlling the heap table scan so only one access method is checked in the present case.

Each access method check begins with a call to its ‘escalate locks’ method. In my heap example, this is a call to HeapDataSetSession::EscalateLocks. For an index, the call would be to IndexDataSetSession::EscalateLocks.

An important lock count disparity

The first escalation check in my example occurs when the lock manager counts 2500 locks associated with the current transaction. However, the call to HeapDataSetSession::EscalateLocks finds only 2498 locks associated with its HoBt at that time.

There are two reasons for this difference:

First, the HeapDataSetSession doesn’t count the intent-shared table lock because it isn’t a HoBt-level lock. Remember, a table can have several partitions. This accounts for one ‘missing’ lock.

Second, the HeapDataSetSession updates its count after the lock is acquired, while the lock escalation check happens in the lock manager during lock acquisition. The current lock is therefore included in the lock manager’s count but hasn’t yet been recorded by the access method. This accounts for the second lock ‘missing’ from the HoBt count.

These differences again feel unintentional to me.

Lock escalation attempts

The code in HeapDataSetSession::EscalateLocks next calls sqlmin!IsEscalationPossible to check if the current access method qualifies for lock escalation.

If documented trace flag 1211 is enabled, this method returns immediately, and no escalation takes place.

Otherwise, the current access method increments its lock escalation attempts counter. This happens regardless of the number of locks it currently holds. This means a ‘lock escalation attempt’ is really only a check, not an attempt per se. We’re still checking to see if we should try to escalate locks; we haven’t decided to do it yet.

Bear this in mind when interpretingindex_lock_promotion_attempt_count in sys.dm_db_index_operational_stats. The documentation describes this as the “Cumulative number of times the Database Engine tried to escalate locks”, but this really isn’t what it measures. Tools and scripts relying on this DMV may also report misleading information for this reason.

Whenever the lock manager’s total transaction locks counter divides evenly by 1250 (except at exactly 1250 locks as previously noted), each active access method associated with the current statement will have its ‘lock promotion attempt’ counter incremented. There’s no guarantee any access method will qualify for a lock promotion attempt at the time this check is made.

In my example, the lock manager’s count is currently 2500, so there’s no prospect of any lock escalation due to the lock threshold of 5000 being met. Nevertheless, the ‘lock promotion attempt’ counter for the heap table is incremented.

This explains why test 1 saw three ‘lock promotion attempts’ and test 2 saw four ‘attempts’. Checks were triggered at 2500, 3750, and 5000 held locks in both cases. Test 2 triggered an extra check when it reached 6250 held locks (6214 row locks, 35 page locks, and a table lock).

Lock escalation

After incrementing the ‘promotion attempts’ counter, the code in sqlmin!IsEscalationPossible next checks the number of locks held by the access method against the threshold for escalation. This threshold is exactly 5000 locks. The precise test is:

cmp esi,1388h -- compare locks held with 5000 (hex 1388)

jb sqlmin!IsEscalationPossible+0x222 -- jump if belowLock escalation is attempted if the number of HoBt locks held by the current access method is greater than or equal to 5000.

In test 1, this check failed at 2500, 3750, and 5000 held locks (according to the lock manager) because the HoBt only had 2498, 3748, and 4998 locks at those times. Remember, the HoBt-level counter doesn’t include the table lock or the current lock being acquired.

In test 2, the first three checks failed in exactly the same way. The last check at 6250 locks succeeded because the HoBt lock count was 6248, meeting the 5000-lock threshold.

In case you’re wondering why test 1 didn’t trigger lock escalation at 5002 held locks (with 5000 recorded against the HoBt at that time), it’s because 5002 doesn’t divide evenly by 1250. The next check after 5000 held locks doesn’t occur until 6250 total locks, and test 1 never quite hit that mark.

Final check

When the access method has 5000 or more locks, SQL Server checks trace flag 1224 to see if lock escalation due to the lock threshold has been disabled.

If not, the physical process of lock escalation begins in earnest with a call to sqlmin!HeapDataSetSession::ForceLockEscalation. This converts the IS table lock to S and releases the lower-level HoBt locks in a bulk operation.

End of Part 1

Summarizing the main points from this article:

- The total number of locks held is incremented when a lock of any type is acquired by the current transaction. The count is decremented when a lock is released.

- Lock escalation checking is triggered at 2500 held locks per transaction and every 1250 additional. No checks happen between these times.

- A separate count per HoBt is maintained for each active access method associated with each data-accessing plan operator.

- These HoBt counts don’t include the table-level intent lock or the current lock being acquired.

- A lock escalation ‘attempt’ is counted for all active access methods in the current statement whenever the total held lock count divides exactly by 1250, starting at 2500.

- The ‘attempt’ counter is misleadingly named. Escalation is not guaranteed to be attempted at the time it’s incremented. It indicates only that the HoBt access method counter is being checked.

- Escalation is attempted for any active HoBt access method in the current statement with at least 5000 held locks. Remember, this count doesn’t include the table lock or current lock.

- Simple tests will typically escalate at 6250 total held locks (where the single HoBt lock counter is 6248) despite the threshold being 5000. This is because the prior check at 5000 locks finds only 4998 locks on the HoBt.

The second and third parts of this series will continue my coverage of lock escalation thresholds with the impact of parallelism, a discussion of lock lifetimes, and some interesting edge case examples.