How Parallel Plans Start Up—Part 1

Setup

To provide a concrete basis for the analysis, we will follow how a particular parallel query starts execution. I used the public Stack Overflow 2013 database (download details). The desired plan shape can also be obtained against the smaller Stack Overflow 2010 data set if that is more convenient. It can be downloaded at the same link.

I added one nonclustered index:

CREATE NONCLUSTERED INDEX PP

ON dbo.Posts

(

PostTypeId ASC,

CreationDate ASC

);My test environment is SQL Server 2019 CU9 on a laptop with 8 cores and 16GB of memory allocated to the instance. Compatibility level 150 is used exclusively. I mention those details to help you reproduce the target plan if you wish. The fundamentals of row mode parallel execution haven’t changed since SQL Server 2005, so the following discussion is broadly applicable.

The test query returns the total number of questions and answers, grouped by month and year.:

WITH

MonthlyPosts AS

(

SELECT

P.PostTypeId,

CA.TheYear,

CA.TheMonth,

Latest = MAX(P.CreationDate)

FROM dbo.Posts AS P

CROSS APPLY

(

VALUES

(

YEAR(P.CreationDate),

MONTH(P.CreationDate)

)

) AS CA (TheYear, TheMonth)

GROUP BY

P.PostTypeId,

CA.TheYear,

CA.TheMonth

)

SELECT

rn = ROW_NUMBER() OVER (

ORDER BY Q.TheYear, Q.TheMonth),

Q.TheYear,

Q.TheMonth,

LatestQuestion = Q.Latest,

LatestAnswer = A.Latest

FROM MonthlyPosts AS Q

JOIN MonthlyPosts AS A

ON A.TheYear = Q.TheYear

AND A.TheMonth = Q.TheMonth

WHERE

Q.PostTypeId = 1

AND A.PostTypeId = 2

ORDER BY

Q.TheYear,

Q.TheMonth

OPTION

(

USE HINT ('DISALLOW_BATCH_MODE'),

USE HINT ('FORCE_DEFAULT_CARDINALITY_ESTIMATION'),

ORDER GROUP,

MAXDOP 2

);I have used hints to get a particular shape row mode plan. Execution is limited to DOP 2 to make some of the details shown later more concise.

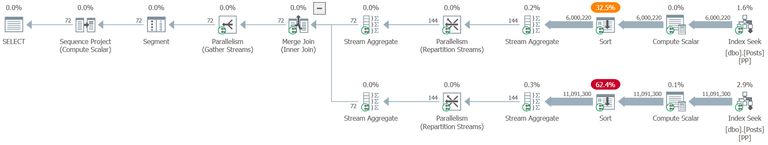

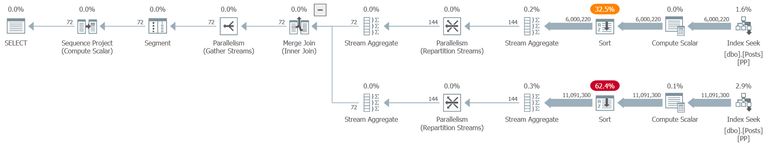

The estimated execution plan is:

Background

The query optimizer produces a single compiled plan for a batch. Each statement in the batch is marked for serial or parallel execution, depending on eligibility and estimated costs.

A parallel plan contains exchanges (parallelism operators). Exchanges may appear in distribute streams, repartition streams, or gather streams form. Each of these exchange types uses the same underlying components, just wired up differently, with a different number of inputs and outputs. For more background on row mode parallel execution see Parallel Execution Plans—Branches and Threads.

DOP downgrade

The degree of parallelism (DOP) for a parallel plan can be downgraded at runtime if necessary. A parallel query might start out requesting DOP 8, but be progressively downgraded to DOP 4, DOP 2, and finally DOP 1 due to a lack of system resources at that moment. If you would like to see that in action, see this short video by Erik Darling.

Running a parallel plan on a single thread can also happen when a cached parallel plan is reused by a session that is limited to DOP 1 by an environmental setting (e.g. affinity mask or resource governor). See Myth: SQL Server Caches a Serial Plan with every Parallel Plan for details.

Whatever the cause, DOP downgrade of a cached parallel plan does not result in a new serial plan being compiled. SQL Server reuses the existing parallel plan by disabling the exchanges. The result is a ‘parallel’ plan that executes on a single thread. The exchanges still appear in the plan, but they are bypassed at runtime.

SQL Server cannot promote a serial plan to parallel execution by adding exchanges at runtime. That would require a fresh compilation.

Parallel Plan Initialization

A parallel plan has all the exchanges needed to utilize extra worker threads, but there is additional setup work needed at runtime before parallel execution can begin. One obvious example is that additional worker threads must be allocated to specific tasks within the plan, but there is a lot more to it than that.

I am going to start at the point where a parallel plan has been retrieved from the plan cache. At this point, only the original thread processing the current request exists. This thread is sometimes called the “coordinator thread” in parallel plans, but I prefer the terms “parent task” or “parent worker”. There is otherwise nothing special about this thread; it is the same thread the connection uses to process client requests and run serial plans to completion.

To emphasize the point that only a single thread exists right now, I want you to visualize the plan at this point in time like this:

I will be using screenshots from Plan Explorer almost exclusively in this post, but for this first view only, I will also show the SSMS version:

In either representation, the key difference is the lack of parallelism icons on each operator, even though the exchanges are still present. Only the parent task exists right now, running on the original connection thread. No additional worker threads have been reserved, created, or assigned tasks yet. Keep the above plan representation front of mind as we go along.

Creating the executable plan

The plan at this point is essentially just a template that can be used as the basis for any future execution. To get it ready for a specific run, SQL Server needs to fill in runtime values like the current user, transaction context, parameter values, ids for any objects created at runtime (e.g. temporary tables and variables), and so on.

For a parallel plan, SQL Server needs to do quite a bit of additional preparatory work to get the internal machinery to the point where execution can start. The parent task’s worker thread is responsible for performing almost all this work (and certainly all the work we will cover in part 1).

The process of transforming the plan template for a specific run is known as creating the executable plan. It is sometimes difficult to keep the terminology straight, because terms are often overloaded and misapplied (even by Microsoft), but I will do my best to be as consistent as possible.

Execution contexts

You can think of an execution context as a plan template populated with all the specific runtime information needed by a particular thread. The executable plan for a serial statement consists of a single execution context, where a single thread runs the whole plan.

A parallel executable plan contains a collection of execution contexts: One for the parent task, and one per thread in each parallel branch. Each additional parallel worker thread runs its part of the overall plan within its own execution context. For example, a parallel plan with three branches running at DOP 8 has (1 + (3 * 8)) = 25 execution contexts. Serial execution contexts are cached for reuse, but additional parallel execution contexts are not.

The parent task always exists before any additional parallel tasks, so it is assigned execution context zero. The execution contexts used by parallel workers will be created later, after the parent context is fully initialized. The additional contexts are cloned from the parent context, then customized for their specific task (this is covered in part 2).

There are a number of activities involved in starting up execution context zero. It is impractical to attempt to list them all, but it will be useful to cover some of the main ones applicable to our test query. There will still be too many for a single list, so I will break them up into (somewhat arbitrary) sections:

1. Parent context initialization

When we submit the statement for execution, the parent task’s context (execution context zero) is initialized with:

- A reference to the base transaction (explicit, implicit, or auto-commit). Parallel workers will run sub-transactions, but they are all scoped within the base transaction.

- A list of statement parameters and their current values.

- A primary memory object (PMO) used to manage memory grants and allocations.

- A linked map of the operators (query nodes) in the executable plan.

- A factory for any required large object (blob) handles.

- Lock classes to keep track of multiple locks held for a period during execution. Not all plans require lock classes as fully streaming operators typically lock and unlock individual rows in sequence.

- The estimated memory grant for the query.

- Row mode memory grant feedback structures for each operator (SQL Server 2019).

Many of these things will be used or referenced by parallel tasks later on, so they need to exist at the parent scope first.

2. Parent context metadata

The next main tasks performed are:

- Checking the estimated query cost is within the limit set by the query governor cost limit configuration options.

- Updating index usage records – exposed through

sys.dm_db_index_usage_stats. - Creating cached expression values (runtime constants).

- Creating a list of profiling operators used to collect runtime metrics like row counts and timings, if this has been requested for the current execution. The profilers themselves are not created yet, just the list.

- Taking a snapshot of waits for the session waits feature exposed via

sys.dm_exec_session_wait_stats.

3. DOP and memory grant

The parent task context now:

- Calculates runtime degree of parallelism (DOP). This is affected by the number of free workers (see “DOP downgrade” earlier), where they can be placed among nodes, and a number of trace flags.

- Reserves the required number of threads. This step is pure accounting—the threads themselves may not exist at this point. SQL Server keeps track of the maximum number of threads it is allowed to have. Reserving threads subtracts from that number. When the threads are finished with, the maximum number is increased again.

- Sets memory grant timeout.

- Calculates the memory grant, including memory required for exchange buffers.

- Acquires the memory grant via the appropriate resource semaphore.

- Creates a manager object to handle parallel subprocesses. The parent task is the top-level process; additional tasks are also known as subprocesses.

While threads are ‘reserved’ at this point, SQL Server may still encounter THREADPOOL waits later on when it tries to use one of the ‘reserved’ threads. The reservation guarantees SQL Server will remain around its configured maximum number of threads at all times, but the physical thread may not be immediately available from the thread pool. When that happens, a new thread will need to be started by the operating system, which can take a little while. For more on that, see Unusual THREADPOOL Waits by Josh Darnell.

4. Query scan setup

Row mode plans execute in iterative fashion, starting at the root. The plan we have at the moment is not yet capable of this mode of execution. It is still largely a template, even if it does contain a fair amount of execution-specific information already.

SQL Server needs to convert the current structure to a tree of iterators, each with methods like Open, GetRow, and Close. The iterator methods are connected to their children via function pointers. For example, calling GetRow on the root recursively calls GetRow on child operators until a leaf level is reached and a row starts to ‘bubble up’ the tree. For a refresher on the details see Iterators, Query Plans, and Why They Run Backwards.

End of Part 1

We have made good progress setting up the execution context for the parent task. In part 2, we will follow along as SQL Server constructs the query scan tree necessary for iterative execution.