A bug with Halloween Protection and the OUTPUT Clause

Background

The OUTPUT clause can be used to return results from an INSERT, UPDATE, DELETE, or MERGE statement. The data can be returned to the client, inserted to a table, or both.

There are two ways to add OUTPUT data to a table:

- Using

OUTPUT INTO - With an outer

INSERTstatement.

For example:

-- Test table

DECLARE @Target table

(

id integer IDENTITY (1, 1) NOT NULL,

c1 integer NULL

);

-- Holds rows from the OUTPUT clause

DECLARE @Output table

(

id integer NOT NULL,

c1 integer NULL

);--

-- Using OUTPUT INTO

--

-- Insert to the target table

INSERT @Target

(c1)

-- Insert to the output table

OUTPUT

Inserted.id,

Inserted.c1

INTO @Output

(id, c1)

VALUES (1);--

-- Using outer INSERT

--

-- Insert to the output table

INSERT @Output

(id, c1)

SELECT

SQ1.id, SQ1.c1

FROM

(

-- Insert to the target table

INSERT @Target

(c1)

OUTPUT

-- Returns data to the outer INSERT

Inserted.id,

Inserted.c1

VALUES (1)

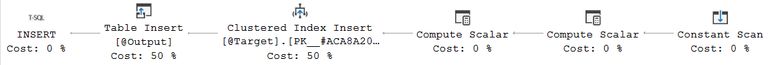

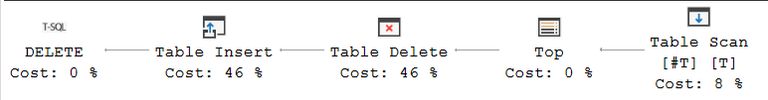

) AS SQ1;The execution plan is the same for both forms:

Notice there are two Insert operators. The new row is first added to the @Target table by the Clustered Index Insert, then added to the @Output table by the Table Insert operator.

Output to the same table

The OUTPUT table can be the same as the target table.

For example, this is valid code:

DECLARE @T table (c1 integer NULL);

-- Insert to the target table

INSERT @T (c1)

-- Also output to the same table

OUTPUT Inserted.c1

INTO @T (c1)

VALUES

(1),

(2),

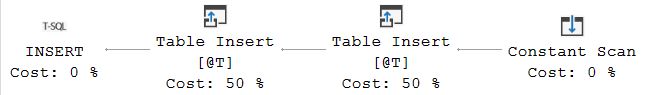

(3);Each source row will be inserted twice, using separate Insert operators:

The final contents of table @T are:

| c1 |

|---|

| 1 |

| 1 |

| 2 |

| 2 |

| 3 |

| 3 |

Delete and Insert on the Same Table

The OUTPUT INTO operation is always an insert, but the originating change can be an insert, update, delete, or merge.

Performing a delete and insert on the same table can even be useful. For example, consider that columns with the IDENTITY property cannot be updated.

To change an identity value (perhaps as part of ETL), we need to delete and re-insert the row. This can be done quite neatly using OUTPUT INTO:

DECLARE @T table

(

id integer IDENTITY NOT NULL PRIMARY KEY,

col1 integer NULL UNIQUE

);

-- Sample data

INSERT @T

(col1)

VALUES

(1234),

(2345),

(3456);

SELECT * FROM @T;| id | col1 |

|---|---|

| 1 | 1234 |

| 2 | 2345 |

| 3 | 3456 |

-- Delete and re-insert a row in a single statement

DELETE @T

OUTPUT Deleted.col1

INTO @T

WHERE

col1 = 2345;

SELECT * FROM @T;| id | col1 |

|---|---|

| 1 | 1234 |

| 4 | 2345 |

| 3 | 3456 |

Notice the id has ‘changed’ from 2 to 4. This technique is especially convenient when the source rows have many columns, making a separate DELETE and INSERT somewhat tedious to implement.

Halloween Protection

The OUTPUT INTO (or outer INSERT) is a data-changing operation that may require Halloween Protection to ensure correct processing and results.

As a brief refresher, the SQL standard specifies that the result of a data-changing operation must be the same as if it had been executed in three separate (non-overlapping) phases:

- Read: Find the records to be changed and the new values.

- Write: Apply changes to the affected records.

- Verify: check database consistency constraints.

One way to achieve this complete phase separation is to introduce an Eager Table Spool between the reading and writing sides of the execution plan. All data needed to make the changes is acquired and saved in the spool before modifications are started (so writes cannot affect the reads).

This is not always the most efficient approach, so SQL Server explores other plan options that deliver enough Halloween protection (see my article series for details). In short, the optimizer calculates the necessary level of Halloween Protection for each data-changing operator, and chooses the cheapest plan option that meets the need.

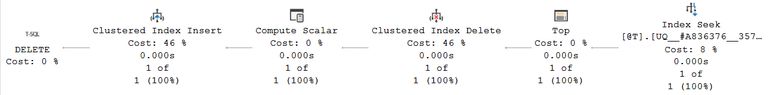

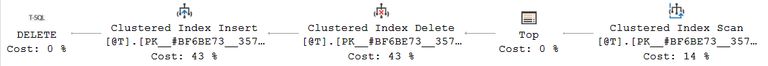

For example, in the immediately prior execution plan, the Top operator is present to provide Halloween Protection via a cardinality guarantee. Reading a guaranteed maximum of one row can make a spool or other blocking operator logically unnecessary.

Simplified example

Let’s look at a same-table delete & insert example with only a single column (and no identity):

DECLARE @T table (col1 integer NOT NULL PRIMARY KEY);

INSERT @T

(col1)

VALUES

(1),

(2),

(3);

DELETE @T

OUTPUT

Deleted.col1

INTO @T;

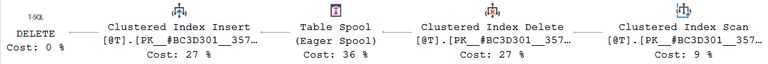

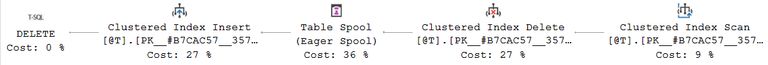

The optimizer recognizes that Halloween Protection is not required for the Clustered Index Delete operator because there isn’t a self-join of the target table. So nothing is needed between the Scan and Delete operators.

However, the Clustered Index Insert does require protection because the target table is the same as the source of the rows.

In this instance, the optimizer chose an Eager Table Spool to ensure that all reads from table @T complete before the inserts start (full phase separation).

A bug appears!

The following code correctly deletes and re-inserts a single row:

DECLARE @T table (col1 integer NOT NULL PRIMARY KEY);

INSERT @T (col1) VALUES (1);

DELETE @T

OUTPUT

Deleted.col1

INTO @T;

But if we add a TOP (1) to the delete statement…

DECLARE @T table

(

col1 integer NOT NULL

PRIMARY KEY CLUSTERED

);

INSERT @T (col1) VALUES (1);

DELETE TOP (1) @T

OUTPUT

Deleted.col1

INTO @T;…the optimizer determines that the Top operator will provide sufficient Halloween Protection for the insert (no spool needed):

Deleting and inserting one row using that code throws an exception:

Msg 2627, Level 14, State 1, Line 168

Violation of PRIMARY KEY constraint 'PK__#A33C781__357D0D3E3DC9DF29'.

Cannot insert duplicate key in object 'dbo.@T'.

The duplicate key value is (1).

The statement has been terminated.What went wrong?

Explanation

Looking at the execution plan, it is hard to see how deleting a row (at the Clustered Index Delete) then inserting it again (at the Clustered Index Insert) could possibly result in a duplicate key in the index. Remember there is only one row, one column, and one index.

Logically, the only way this error can occur is if the Delete operator does not delete the row.

That might sound a bit crazy, but it is the way the plan works:

- When the row arrives at the Delete, an exclusive lock is taken, but the base row is not deleted yet.

- Control passes to the parent Insert operator.

- The Insert throws the duplicate key error because the delete hasn’t happened yet.

If the Insert did not throw the error, execution would have continued as follows:

- Control would return from the Insert to the Delete operator.

- The Delete logs the delete, physically deletes the base row (marks it a ghost), and releases the exclusive lock

- The Delete asks for the next row to process from its child operator (the Top)…and so on.

This is the way the Delete works by design. Insert and Update operators perform their data-changing operations immediately. Delete waits to maintain the base row until the operator is just about to move on to the next row.

Buggy bug

To be clear, this deleting arrangement is necessary, and works perfectly well in normal circumstances. The issue is the optimizer doesn’t account for the edge case of inserting the same row in a unique clustered index after deleting it.

Halloween protection is needed between the Delete and Insert in this special case to avoid the incorrect key violation error. This could be provided by an Eager Table Spool or other blocking operator like a Sort; anything that ensures the delayed delete(s) are physically performed before the insert(s) would do.

The bug is not limited to TOP(1) deletes. That was just a convenient way to demonstrate the issue. Any plan that deletes and re-inserts to the same unique clustered index using the OUTPUT clause is potentially vulnerable. This includes MERGE statements with a DELETE action.

Whether the bug manifests in practice depends on the placement and degree of Halloween protection in the wider plan. The optimizer is not aware of the present issue, so any protection present will be for other reasons.

This bug reproduces on all versions of SQL Server from 2008 R2 SP3-GDR (build 6560) to SQL Server 2019 CU5 inclusive. It is also present on Azure Managed Instance and Azure SQL Database.

It may also exist on earlier SQL Server versions, but I no longer have those installed.

More details

I used the phrases “base row” and “unique clustered index” deliberately above. The erroneous duplicate key error does not occur with a unique nonclustered index:

DECLARE @T table

(

col1 integer NOT NULL

-- Nonclustered now

PRIMARY KEY NONCLUSTERED

);

INSERT @T (col1) VALUES (1);

DELETE TOP (1) @T

OUTPUT

Deleted.col1

INTO @T;

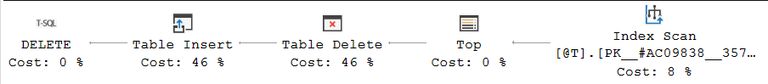

(note the Delete and Insert operators in this plan are narrow updates – they maintain the base table and one or more secondary indexes.)

Execution completes successfully. It is not because the optimizer chooses to read from the nonclustered index, rather:

- The Delete operator still does not delete the base row at first.

- It does delete the corresponding entry in the nonclustered index.

- The Insert then succeeds because the unique nonclustered index does not contain the inserted key (we just deleted it).

- When control returns to the Delete from the Insert, the base row is deleted.

To summarize, a narrow (per-row) Delete operator does maintain nonclustered (secondary) indexes immediately, but waits to delete the base row from the heap or clustered index until control returns from its parent operator(s).

Wide (per-index) plans may include a spool for the usual reasons, which provides full phase separation as a side-effect. For more details on this and narrow & wide plans in general, see my article Optimizing T-SQL queries that change data.

More examples

A couple more code examples are provided below if you want to satisfy yourself that reading from the nonclustered is not a factor, or that only a unique clustered index causes the problem.

These examples use a temporary table rather than a table variable because table variables (sadly) do not support index hints:

-- No error reading from the base table

DROP TABLE IF EXISTS #T;

CREATE TABLE #T

(

col1 integer NOT NULL

-- Nonclustered

PRIMARY KEY NONCLUSTERED

);

INSERT #T (col1) VALUES (1);

DELETE TOP (1) T

OUTPUT

Deleted.col1

INTO #T

FROM #T AS T WITH (INDEX(0));

-- No error with a non-unique clustered index

-- and unique nonclustered index

DROP TABLE IF EXISTS #T;

CREATE TABLE #T

(

col1 integer NOT NULL,

-- Nonunique clustered

INDEX c CLUSTERED (col1),

-- Unique nonclustered

UNIQUE NONCLUSTERED (col1)

);

INSERT #T (col1) VALUES (1);

DELETE TOP (1) T

OUTPUT

Deleted.col1

INTO #T

FROM #T AS T WITH (INDEX(0));

Final Thoughts

Summarizing the conditions needed to encounter the bug:

- Delete and insert on the same unique clustered index using the

OUTPUTclause. - Either a

DELETEor aDELETEaction in aMERGEstatement. - The optimizer must fail to place sufficient Halloween Protection between the Clustered Index Delete and Clustered Index Insert operators.

This bug has been reported to Microsoft. I don’t know if it will be considered important enough to fix.

If it is, they might choose to disable OUTPUT INTO (and outer INSERT) where the target of the output insert is the same as the delete and a unique clustered index is present. Or they might require full phase separation for the Insert. It’ll be interesting to see which way this goes.

The incorrect key violation must be rare in the wild if it hasn’t been reported since at least SQL Server 2008 R2, so I don’t feel bad writing about it before a fix is ready.

In any case, the point in writing this post was not to highlight the bug per se. It was a good chance to write about some of the less well-known details of delete execution plans. I hope you found it interesting.