Minimal Logging with INSERT…SELECT and Fast Load Context

Introduction

This post describes the detailed preconditions for minimal logging when using INSERT...SELECT with b-tree indexes. For the considerations that apply when the target table is a heap, see part one of this series. For empty clustered tables, see part two.

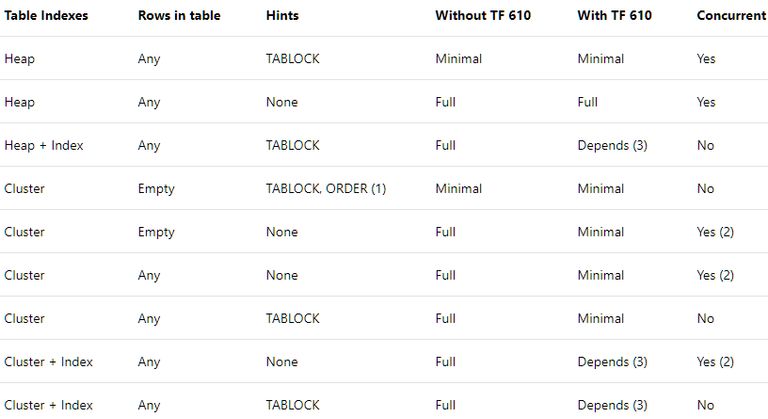

Summary Tables

The well-known Data Loading Performance Guide summarizes the conditions for minimal logging as follows:

The notes are:

(1) If you are using the

INSERT…SELECTmethod, the ORDER hint does not have to be specified, but the rows must be in the same order as the clustered index. If usingBULK INSERTthe order hint must be used.

(2) Concurrent loads only possible under certain conditions. See “Bulk Loading with the Indexes in Place”. Also, only rows written to newly allocated pages are minimally logged.

(3) Depending on the plan chosen by the optimizer, the nonclustered index on the table may either be fully- or minimally logged.

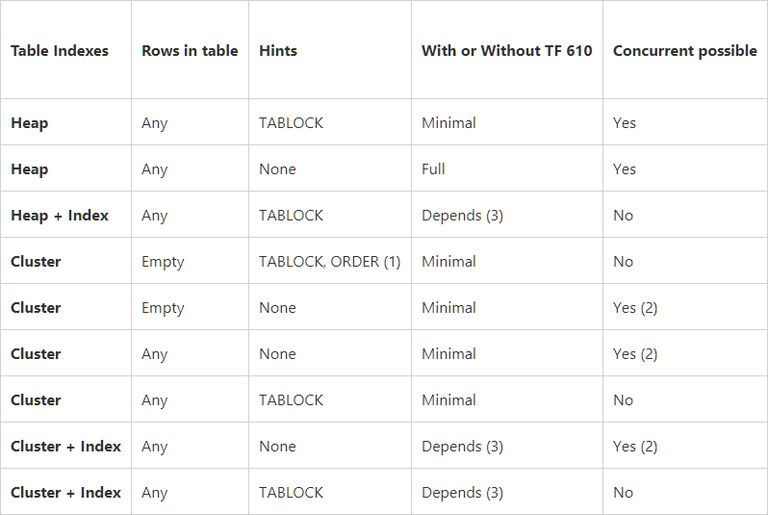

The preceding table applies to SQL Server versions from 2008 to 2014. The Tiger Team update for SQL Server 2016 onwards is:

The basic message for clustered tables is that minimal logging is available if:

- The target table is empty and the right hints are used; or

- Trace flag 610 is enabled, or SQL Server 2016+ is used.

Fast Load Context

As a quick reminder, RowsetBulk (covered in parts 1 and 2) enables minimally-logged bulk load for:

- Empty and non-empty heap tables with:

- Table locking; and

- No secondary indexes.

- Empty clustered tables, with:

- Table locking; and

- No secondary indexes; and

DMLRequestSort=trueon the Clustered Index Insert operator.

The FastLoadContext code path supports minimally-logged and concurrent bulk load for:

- Empty and non-empty clustered b-tree indexes.

- Empty and non-empty nonclustered b-tree indexes maintained by a dedicated Index Insert plan operator.

DMLRequestSort=trueon the corresponding plan operator.

You may have noticed the overlap between RowsetBulk and FastLoadContext for empty clustered tables with no secondary indexes. A TABLOCK hint is not required for fast load context, but it is not required to be absent either (though it does limit concurrency, and has other effects discussed later). A suitable insert with a TABLOCK hint may therefore still qualify for minimal logging and bulk load via FastLoadContext if it fails the detailed RowsetBulk tests.

Activation and monitoring

Fast load context is enabled using trace flag 610 from SQL Server 2008 to SQL Server 2014. From SQL Server 2016 onward, it is on by default, but can be disabled using documented trace flag 692.

The Debug channel Extended Event fastloadcontext_enabled can be used to monitor fast load context use per index partition (rowset). This event does not fire for RowsetBulk loads.

Mixed logging modes

A single INSERT...SELECT statement using fast load context may fully log some rows while minimally logging others.

Rows are inserted one at a time by the Index Insert operator and fully logged in the following cases:

- All rows added to the first index page, if the index was empty at the start of the operation.

- Rows added to existing index pages.

- Rows moved between pages by a page split.

Otherwise, rows from the ordered insert stream are directly added to a brand new page using an optimized, and minimally-logged code path. Once as many rows as possible are written to the new page, it is directly linked into the existing target index structure.

The newly added page will not necessarily be full (though obviously that is the best case) because SQL Server has to be careful not to add rows that logically belong on an existing index page. This is primarily an issue when adding new rows within the current index key range, rather than at the extreme start or end of the index.

It is still possible to add new pages within the existing index key range, but the new rows must sort higher than the highest key on the preceding existing index page and sort lower than the lowest key on the following existing index page. For the best chance of achieving minimal logging in these circumstances, ensure the inserted rows do not overlap with existing rows as far as possible.

DMLRequestSort Conditions

There are two main code paths that can set DMLRequestSort to true (required to enable fast load context) when inserting into a b-tree index. Either path returning true is sufficient.

1. FOptimizeInsert

The sqllang!CUpdUtil::FOptimizeInsert code requires:

- More than 250 rows estimated to be inserted; and

- More than 2 pages estimated insert data size; and

- The target index must have fewer than 3 leaf pages.

These conditions are the same as RowsetBulk on an empty clustered index, with an additional requirement for no more than two index leaf-level pages. Note carefully that this refers to the size of the existing index before the insert, not the estimated size of the data to be added.

The script below is a modification of the demo used in earlier parts in this series. It shows minimal logging when fewer than three index pages are populated before the test INSERT...SELECT runs. The test table schema is such that 130 rows can fit on a single 8KB page when row versioning is off for the database. The multiplier in the first TOP clause can be changed to determine the number of existing index pages before the test INSERT...SELECT is executed:

IF OBJECT_ID(N'dbo.Test', N'U') IS NOT NULL

BEGIN

DROP TABLE dbo.Test;

END;

GO

CREATE TABLE dbo.Test

(

id integer NOT NULL IDENTITY

CONSTRAINT [PK dbo.Test (id)]

PRIMARY KEY,

c1 integer NOT NULL,

padding char(45) NOT NULL

DEFAULT ''

);

GO

-- 130 rows per page for this table

-- structure with row versioning off

INSERT dbo.Test

(c1)

SELECT TOP (3 * 130) -- Change the 3 here

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV;

GO

-- Show physical index statistics

-- to confirm the number of pages

SELECT

DDIPS.index_type_desc,

DDIPS.alloc_unit_type_desc,

DDIPS.page_count,

DDIPS.record_count,

DDIPS.avg_record_size_in_bytes

FROM sys.dm_db_index_physical_stats

(

DB_ID(),

OBJECT_ID(N'dbo.Test', N'U'),

1, -- Index ID

NULL, -- Partition ID

'DETAILED'

) AS DDIPS

WHERE

DDIPS.index_level = 0; -- leaf level only

GO

-- Clear the plan cache

DBCC FREEPROCCACHE;

GO

-- Clear the log

CHECKPOINT;

GO

-- Main test

INSERT dbo.Test

(c1)

SELECT TOP (269)

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV;

GO

-- Show log entries

SELECT

FD.Operation,

FD.Context,

FD.[Log Record Length],

FD.[Log Reserve],

FD.AllocUnitName,

FD.[Transaction Name],

FD.[Lock Information],

FD.[Description]

FROM sys.fn_dblog(NULL, NULL) AS FD;

GO

-- Count the number of fully-logged rows

SELECT

[Fully Logged Rows] = COUNT_BIG(*)

FROM sys.fn_dblog(NULL, NULL) AS FD

WHERE

FD.Operation = N'LOP_INSERT_ROWS'

AND FD.Context = N'LCX_CLUSTERED'

AND FD.AllocUnitName = N'dbo.Test.PK dbo.Test (id)';

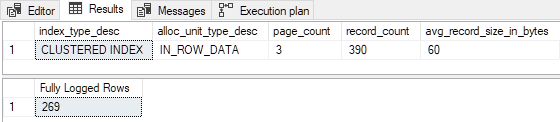

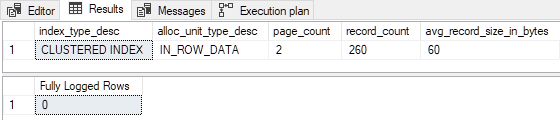

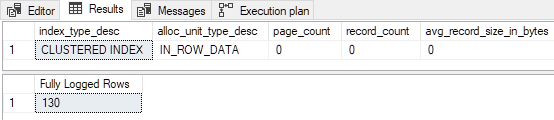

GOWhen the clustered index is preloaded with 3 pages, the test insert is fully logged (transaction log detail records omitted for brevity):

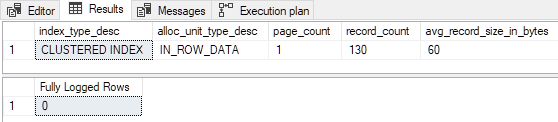

When the table is preloaded with only 1 or 2 pages, the test insert is minimally logged:

When the table is not preloaded with any pages, the test is equivalent to running the empty clustered table demo from part two, but without the TABLOCK hint:

The first 130 rows are fully logged. This is because the index was empty before we started, and 130 rows fit on the first page. Remember, the first page is always fully logged when fast load context is used and the index was empty beforehand. The remaining 139 rows are inserted with minimal logging.

If a TABLOCK hint is added to the insert, all pages are minimally logged (including the first one) since the empty clustered index load now qualifies for the RowsetBulk mechanism (at the cost of taking an Sch-M lock).

2. FDemandRowsSortedForPerformance

If the FOptimizeInsert tests fail, DMLRequestSort may still be set to true by a second set of tests in the sqllang!CUpdUtil::FDemandRowsSortedForPerformance code. These conditions are a little more complex, so it will be useful to define some parameters:

P– number of existing leaf-level pages in the target index.I– estimated number of rows to insert.R=P/I(target pages per inserted row).T– number of target partitions (1 for unpartitioned).

The logic to determine the value of DMLRequestSort is then:

- If

P <= 16return false, otherwise:- If

R < 8:- If

P > 524return true, otherwise false.

- If

- If

R >= 8:- If

T > 1andI > 250return true, otherwise false.

- If

- If

The above tests are evaluated by the query processor during plan compilation. There is a final condition evaluated by storage engine code (IndexDataSetSession::WakeUpInternal) at execution time:

DMLRequestSortis currently true; andI >= 100.

We will break all this logic down into manageable pieces next.

More than 16 existing target pages

The first test P <= 16 means that indexes with fewer than 17 existing leaf pages will not qualify for fast load context via this code path. To be absolutely clear on this point, P is the number of leaf-level pages in the target index before the INSERT...SELECT is executed.

To demonstrate this part of the logic, we will preload the test clustered table with 16 pages of data. This has two important effects (remember both code paths must return false to end up with a false value for DMLRequestSort):

- It ensures that the previous

FOptimizeInserttest fails, because the third condition is not met (P < 3). - The

P <= 16condition inFDemandRowsSortedForPerformancewill also not be met.

We therefore expect fast load context not to be enabled. The modified demo script is:

IF OBJECT_ID(N'dbo.Test', N'U') IS NOT NULL

BEGIN

DROP TABLE dbo.Test;

END;

GO

CREATE TABLE dbo.Test

(

id integer NOT NULL IDENTITY

CONSTRAINT [PK dbo.Test (id)]

PRIMARY KEY,

c1 integer NOT NULL,

padding char(45) NOT NULL

DEFAULT ''

);

GO

-- 130 rows per page for this table

-- structure with row versioning off

INSERT dbo.Test

(c1)

SELECT TOP (16 * 130) -- 16 pages

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV;

GO

-- Show physical index statistics

-- to confirm the number of pages

SELECT

DDIPS.index_type_desc,

DDIPS.alloc_unit_type_desc,

DDIPS.page_count,

DDIPS.record_count,

DDIPS.avg_record_size_in_bytes

FROM sys.dm_db_index_physical_stats

(

DB_ID(),

OBJECT_ID(N'dbo.Test', N'U'),

1, -- Index ID

NULL, -- Partition ID

'DETAILED'

) AS DDIPS

WHERE

DDIPS.index_level = 0; -- leaf level only

GO

-- Clear the plan cache

DBCC FREEPROCCACHE;

GO

-- Clear the log

CHECKPOINT;

GO

-- Main test

INSERT dbo.Test

(c1)

SELECT TOP (269)

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV1

CROSS JOIN master.dbo.spt_values AS SV2;

GO

-- Show log entries

SELECT

FD.Operation,

FD.Context,

FD.[Log Record Length],

FD.[Log Reserve],

FD.AllocUnitName,

FD.[Transaction Name],

FD.[Lock Information],

FD.[Description]

FROM sys.fn_dblog(NULL, NULL) AS FD;

GO

-- Count the number of fully-logged rows

SELECT

[Fully Logged Rows] = COUNT_BIG(*)

FROM sys.fn_dblog(NULL, NULL) AS FD

WHERE

FD.Operation = N'LOP_INSERT_ROWS'

AND FD.Context = N'LCX_CLUSTERED'

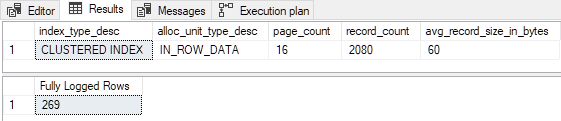

AND FD.AllocUnitName = N'dbo.Test.PK dbo.Test (id)';All 269 rows are fully logged as predicted:

Note that no matter how high we set the number of new rows to insert, the script above will never produce minimal logging because of the P <= 16 test (and P < 3 test in FOptimizeInsert).

If you choose to run the demo yourself with a larger number of rows, comment out the section that shows individual transaction log records, otherwise you will be waiting a very long time, and SSMS may crash. (To be fair, it might do that anyway, but why add to the risk.)

Pages per inserted row ratio

If there are 17 or more leaf pages in the existing index, the previous P <= 16 test will not fail. The next section of logic deals with the ratio of existing pages to newly inserted rows. This must also pass to achieve minimal logging. As a reminder, the relevant conditions are:

- Ratio

R=P/I. - If

R < 8:- If

P > 524return true, otherwise false.

- If

We must also remember the final storage engine test for at least 100 rows:

I >= 100.

Reorganizing those conditions a bit, all of the following must be true:

P > 524(existing index pages)I >= 100(estimated inserted rows)P / I < 8(ratioR)

There are multiple ways to meet those three conditions simultaneously. Let’s choose the minimal possible values for P (525) and I (100) giving an R value of (525 / 100) = 5.25. This satisfies the (R < 8 test), so we expect this combination to result in minimal logging:

IF OBJECT_ID(N'dbo.Test', N'U') IS NOT NULL

BEGIN

DROP TABLE dbo.Test;

END;

GO

CREATE TABLE dbo.Test

(

id integer NOT NULL IDENTITY

CONSTRAINT [PK dbo.Test (id)]

PRIMARY KEY,

c1 integer NOT NULL,

padding char(45) NOT NULL

DEFAULT ''

);

GO

-- 130 rows per page for this table

-- structure with row versioning off

INSERT dbo.Test

(c1)

SELECT TOP (525 * 130) -- 525 pages

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV1

CROSS JOIN master.dbo.spt_values AS SV2;

GO

-- Show physical index statistics

-- to confirm the number of pages

SELECT

DDIPS.index_type_desc,

DDIPS.alloc_unit_type_desc,

DDIPS.page_count,

DDIPS.record_count,

DDIPS.avg_record_size_in_bytes

FROM sys.dm_db_index_physical_stats

(

DB_ID(),

OBJECT_ID(N'dbo.Test', N'U'),

1, -- Index ID

NULL, -- Partition ID

'DETAILED'

) AS DDIPS

WHERE

DDIPS.index_level = 0; -- leaf level only

GO

-- Clear the plan cache

DBCC FREEPROCCACHE;

GO

-- Clear the log

CHECKPOINT;

GO

-- Main test

INSERT dbo.Test

(c1)

SELECT TOP (100)

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV1

CROSS JOIN master.dbo.spt_values AS SV2;

GO

-- Show log entries

SELECT

FD.Operation,

FD.Context,

FD.[Log Record Length],

FD.[Log Reserve],

FD.AllocUnitName,

FD.[Transaction Name],

FD.[Lock Information],

FD.[Description]

FROM sys.fn_dblog(NULL, NULL) AS FD;

GO

-- Count the number of fully-logged rows

SELECT

[Fully Logged Rows] = COUNT_BIG(*)

FROM sys.fn_dblog(NULL, NULL) AS FD

WHERE

FD.Operation = N'LOP_INSERT_ROWS'

AND FD.Context = N'LCX_CLUSTERED'

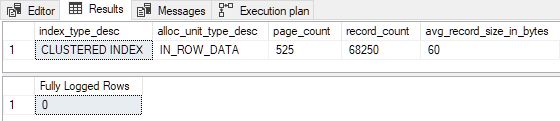

AND FD.AllocUnitName = N'dbo.Test.PK dbo.Test (id)';The 100-row INSERT...SELECT is indeed minimally logged:

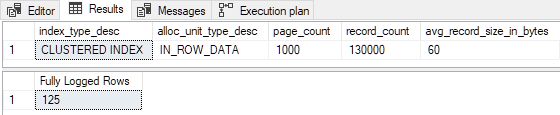

Reducing the estimated inserted rows to 99 (breaking I >= 100), and/or reducing the number of existing index pages to 524 (breaking P > 524) results in full logging. We could also make changes such that R is no longer less than 8 to produce full logging. For example, setting P = 1000 and I = 125 gives R = 8, with the following results:

The 125 inserted rows were fully logged as expected. (This is not due to first page full logging, since the index was not empty beforehand.)

Page ratio for partitioned indexes

If all the preceding tests fail, the one remaining test requires R >= 8 and can only be satisfied when the number of partitions (T) is greater than 1 and there are more than 250 estimated inserted rows (I). Recall:

- If

R >= 8:- If

T > 1andI > 250return true, otherwise false.

- If

One subtlety: For partitioned indexes, the rule that says all first-page rows are fully logged (for an initially empty index) applies per partition. For an object with 15,000 partitions, that means 15,000 fully logged ‘first’ pages.

Summary

The formulas and order of evaluation described in the body are based on code inspection using a debugger. They were presented in a form that closely represents the timing and order used in the real code.

It is possible to reorder and simplify those conditions a bit, to produce a more concise summary of the practical requirements for minimal logging when inserting into a b-tree using INSERT...SELECT. The refined expressions below use the following three parameters:

P= number of existing index leaf-level pages.I= estimated number of rows to insert.S= estimated insert data size in 8KB pages.

Rowset bulk load

- Uses

sqlmin!RowsetBulk. - Requires an empty clustered index target with

TABLOCK(or equivalent). - Requires

DMLRequestSort = trueon the Clustered Index Insert operator. DMLRequestSortis settrueifI > 250andS > 2.- All inserted rows are minimally logged.

- An

Sch-Mlock prevents concurrent table access.

Fast load context

- Uses

sqlmin!FastLoadContext. - Enables minimally-logged inserts to b-tree indexes:

- Clustered or nonclustered.

- With or without a table lock.

- Target index empty or not.

- Requires

DMLRequestSort = trueon the Insert plan operator. - Only rows written to brand new pages are bulk loaded and minimally logged.

- The first page of a previously empty index partition is always fully logged.

- Absolute minimum of

I >= 100. - Requires trace flag 610 before SQL Server 2016.

- Available by default from SQL Server 2016 (trace flag 692 disables).

DMLRequestSort is set true for:

- Any index (partitioned or not):

- If

I > 250andP < 3andS > 2 - or

I >= 100andP > 524andP < I * 8

- If

For partitioned indexes only (with > 1 partition), DMLRequestSort is also set true if:

I > 250andP > 16andP >= I * 8

Final Thoughts

There are a few interesting cases arising from those conditions:

- All inserts to a non-partitioned index with between 3 and 524 (inclusive) existing leaf pages will be fully logged regardless of the number and total size of the rows added. This will most noticeably affect large inserts to small (but not empty) tables.

- All inserts to a partitioned index with between 3 and 16 existing pages will be fully logged.

- Large inserts to large non-partitioned indexes may not be minimally logged due to the inequality

P < I * 8. WhenPis large, a correspondingly large estimated number of inserted rows (I) is required. For example, an index with 8 million pages cannot support minimal logging when inserting 1 million rows or fewer.

General remarks

Watch out for low cardinality estimates at the Index Insert operator, as these will affect the I and S parameters. If a threshold is not reached due to a cardinality estimation error, the insert will be fully logged.

Remember that DMLRequestSort is cached with the plan—it is not evaluated on each execution of a reused plan. This can introduce a form of the well-known Parameter Sensitivity Problem (also known as “parameter sniffing”).

The value of P (index leaf pages) is not refreshed at the start of every statement. The current implementation caches the value for the whole batch. This can have unexpected side-effects. For example, a TRUNCATE TABLE in the same batch as an INSERT...SELECT will not reset P to zero for the calculations described in this article—they will continue to use the pre-truncate value, and a recompilation will not help. A workaround is to submit large changes in separate batches.

Nonclustered indexes

The same considerations and calculations applied to clustered indexes in the demos apply to nonclustered b-tree indexes as well, as long as the index is maintained by a dedicated plan operator (a wide, or per-index plan). Nonclustered indexes maintained by a base table operator (e.g. Clustered Index Insert) are not eligible for fast load context.

Note that the formula parameters need to be evaluated afresh for each nonclustered index operator—calculated row size, number of existing index pages, and cardinality estimate.

Trace flags

It is possible to force FDemandRowsSortedForPerformance to return true by setting undocumented and unsupported trace flag 2332, as I wrote in Optimizing T-SQL queries that change data. When TF 2332 is active, the number of estimated rows to insert still needs to be at least 100. TF 2332 affects the minimal logging decision for fast load context only (it is effective for partitioned heaps as far as DMLRequestSort is concerned, but has no effect on the heap itself, since fast load context only applies to indexes).

A wide/per-index plan shape for nonclustered index maintenance can be forced for rowstore tables using trace flag 8790 (not officially documented, but mentioned in a Knowledge Base article as well as in my article as linked for TF 2332 just above).

Thanks for reading.