Locking and Performance

Introduction

The type and number of locks acquired and released during query execution can have a surprising effect on performance (when using a locking isolation level like the default read committed) even where no waiting or blocking occurs. There is no information in execution plans to indicate the amount of locking activity during execution, which makes it harder to spot when excessive locking is causing a performance problem.

To explore some less well-known locking behaviours in SQL Server, I will reuse the queries and sample data from my post on calculating medians. In that post, I mentioned that the OFFSET grouped median solution needed an explicit PAGLOCK locking hint to avoid losing badly to the nested cursor solution, so let us start by having a look at the reasons for that in detail.

OFFSET Grouped Median

The grouped median test reused the sample data from Aaron Bertrand’s earlier article. The script below recreates this million-row setup, consisting of 10,000 records for each of a hundred imaginary sales people:

CREATE TABLE dbo.Sales

(

SalesPerson integer NOT NULL,

Amount integer NOT NULL

);

WITH X AS

(

SELECT TOP (100)

V.number

FROM master.dbo.spt_values AS V

GROUP BY

V.number

)

INSERT dbo.Sales WITH (TABLOCKX)

(

SalesPerson,

Amount

)

SELECT

X.number,

ABS(CHECKSUM(NEWID())) % 99

FROM X

CROSS JOIN X AS X2

CROSS JOIN X AS X3;

CREATE CLUSTERED INDEX cx

ON dbo.Sales

(SalesPerson, Amount);The SQL Server 2012 (and later) OFFSET solution created by Peter Larsson is as follows (without any locking hints):

DECLARE @s datetime2 = SYSUTCDATETIME();

DECLARE @Result AS table

(

SalesPerson integer PRIMARY KEY,

Median float NOT NULL

);

INSERT @Result

(SalesPerson, Median)

SELECT

d.SalesPerson,

w.Median

FROM

(

SELECT SalesPerson, COUNT(*) AS y

FROM dbo.Sales

GROUP BY SalesPerson

) AS d

CROSS APPLY

(

SELECT AVG(0E + Amount)

FROM

(

SELECT z.Amount

FROM dbo.Sales AS z

WHERE z.SalesPerson = d.SalesPerson

ORDER BY z.Amount

OFFSET (d.y - 1) / 2 ROWS

FETCH NEXT 2 - d.y % 2 ROWS ONLY

) AS f

) AS w (Median);

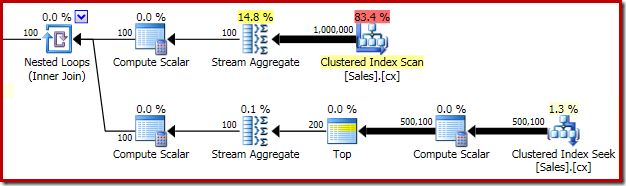

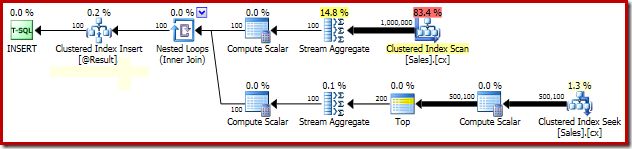

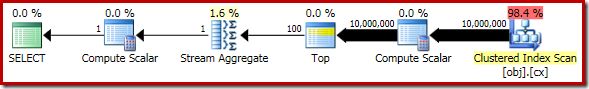

SELECT Peso = DATEDIFF(MILLISECOND, @s, SYSUTCDATETIME());The important parts of the post-execution plan are shown below:

With all required data in memory, this query executes in 580 ms on average on my laptop (running SQL Server 2014 Service Pack 1). The performance of this query can be improved to 320 ms simply by adding a page granularity locking hint to the Sales table in the apply subquery:

DECLARE @s datetime2 = SYSUTCDATETIME();

DECLARE @Result AS table

(

SalesPerson integer PRIMARY KEY,

Median float NOT NULL

);

INSERT @Result

(SalesPerson, Median)

SELECT

d.SalesPerson,

w.Median

FROM

(

SELECT SalesPerson, COUNT(*) AS y

FROM dbo.Sales

GROUP BY SalesPerson

) AS d

CROSS APPLY

(

SELECT AVG(0E + Amount)

FROM

(

SELECT z.Amount

FROM dbo.Sales AS z WITH (PAGLOCK) -- NEW!

WHERE z.SalesPerson = d.SalesPerson

ORDER BY z.Amount

OFFSET (d.y - 1) / 2 ROWS

FETCH NEXT 2 - d.y % 2 ROWS ONLY

) AS f

) AS w (Median);

SELECT Peso = DATEDIFF(MILLISECOND, @s, SYSUTCDATETIME());The execution plan is unchanged (well, aside from the locking hint text in showplan XML of course):

Grouped median locking

The explanation for the dramatic improvement in performance due to the PAGLOCK hint is quite simple, at least initially.

If we manually monitor locking activity while this query executes, we see that without the page locking granularity hint, SQL Server acquires and releases over half a million row level locks while seeking the clustered index. There is no blocking to blame; simply acquiring and releasing this many locks adds a substantial overhead to this query’s execution. Requesting page level locks reduces the locking activity greatly, resulting in much improved performance.

This particular plan’s locking performance issue is confined to the clustered index seek in the plan above. The full scan of the clustered index (used to compute the number of rows present for each sales person) uses page level locks automatically. This is an interesting point. The detailed locking behaviour of the SQL Server engine is not documented in Books Online to any great extent, but various members of the SQL Server team have made a few general remarks over the years, including the fact the unrestricted scans tend to start off taking page locks, whereas smaller operations tend to start off with row locks.

The query optimizer does make some information available to the storage engine, including cardinality estimates, internal hints for isolation level and locking granularity, which internal optimizations may be safely applied and so on. Again, these details are not documented in Books Online. In the end, the storage engine uses a variety of information to decide which locks are required at run time, and at which granularity they should be taken.

As a side note, and remembering that we are talking about a query executing under the default locking read committed transaction isolation level, note that the row locks taken without the granularity hint will not escalate to a table lock in this case. This is because the normal behaviour under read committed is to release the previous lock just before acquiring the next lock, meaning that only a single shared row lock (with its associated higher-level intent-shared locks) will be held at any particular moment. Since the number of concurrently-held row locks never reaches the threshold, no lock escalation is attempted.

OFFSET Single Median

The performance test for a single median calculation uses a different set of sample data, again reproduced from Aaron’s earlier article. The script below creates a table with ten million rows of pseudo-random data:

CREATE TABLE dbo.obj

(

id integer NOT NULL IDENTITY(1,1),

val integer NOT NULL

);

INSERT dbo.obj WITH (TABLOCKX)

(val)

SELECT TOP (10000000)

AO.[object_id]

FROM sys.all_columns AS AC

CROSS JOIN sys.all_objects AS AO

CROSS JOIN sys.all_objects AS AO2

WHERE AO.[object_id] > 0

ORDER BY

AC.[object_id];

CREATE UNIQUE CLUSTERED INDEX cx

ON dbo.obj(val, id);The OFFSET solution is:

DECLARE @Start datetime2 = SYSUTCDATETIME();

DECLARE @Count bigint = 10000000

--(

-- SELECT COUNT_BIG(*)

-- FROM dbo.obj AS O

--);

SELECT

Median = AVG(1.0 * SQ1.val)

FROM

(

SELECT O.val

FROM dbo.obj AS O

ORDER BY O.val

OFFSET (@Count - 1) / 2 ROWS

FETCH NEXT 1 + (1 - @Count % 2) ROWS ONLY

) AS SQ1;

SELECT Peso = DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());The post-execution plan is:

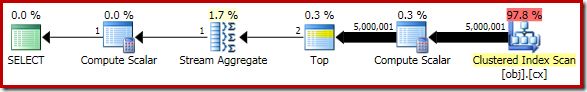

This query executes in 910 ms on average on my test machine. Performance is unchanged if a PAGLOCK hint is added, but the reason for that is not what you might be thinking.

Single median locking

You might be expecting the storage engine to choose page-level shared locks due to the clustered index scan, explaining why a PAGLOCK hint has no effect. In fact, monitoring the locks taken while this query executes reveals that no shared locks (S) are taken at all, at any granularity. The only locks taken are intent-shared (IS) at the object and page level.

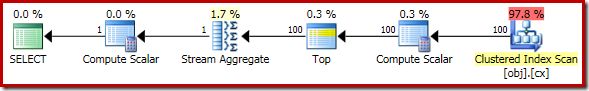

The explanation for this behaviour comes in two parts. The first thing to notice is that the Clustered Index Scan is below a Top operator in the execution plan. This has an important effect on cardinality estimates, as shown in the pre-execution (estimated) plan:

The OFFSET and FETCH clauses in the query reference an expression and a variable, so the query optimizer guesses at the number of rows that will be needed at runtime. The standard guess for Top is one hundred rows. This is a terrible guess of course, but it is enough to convince the storage engine to lock at row granularity instead of at the page level.

If we disable the “row goal” effect of the Top operator using documented trace flag 4138, the estimated number of rows at the scan changes to ten million (still wrong, but in the other direction). This is enough to change the storage engine’s locking granularity decision, so that page-level shared locks (note, not intent-shared locks) are taken:

DECLARE @Start datetime2 = SYSUTCDATETIME();

DECLARE @Count bigint = 10000000

--(

-- SELECT COUNT_BIG(*)

-- FROM dbo.obj AS O

--);

SELECT

Median = AVG(1.0 * SQ1.val)

FROM

(

SELECT O.val

FROM dbo.obj AS O

ORDER BY O.val

OFFSET (@Count - 1) / 2 ROWS

FETCH NEXT 1 + (1 - @Count % 2) ROWS ONLY

) AS SQ1

OPTION (QUERYTRACEON 4138); -- NEW!

SELECT Peso = DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());The estimated execution plan produced under trace flag 4138 is:

Missing locks

Returning to the main example, the hundred-row estimate due to the guessed row goal means that the storage engine elects to lock at the row level. However, we only observe intent-shared (IS) locks at the table and page level. These higher-level locks would be quite normal if we did see row-level shared (S) locks, so where did they go?

The answer is that the storage engine contains another optimization which can skip the row-level shared locks in certain circumstances. When this optimization is applied, the higher-level intent-shared locks are still acquired.

Summary

To summarize, for the single-median query:

- The use of a variable and expression in the

OFFSETclause means the optimizer guesses cardinality. - The low estimate means the storage engine decides on a row-level locking strategy.

- An internal optimization means the row-level S locks are skipped at runtime, leaving only the IS locks at the page and object level.

The single median query would have had the same row-locking performance problem as the grouped median (due to the query optimizer’s inaccurate estimate) but it was saved by a separate storage engine optimization that resulted in only intent-shared page and table locks being taken at runtime.

Grouped Median Revisited

You may be wondering why the Clustered Index Seek in the grouped median test did not take advantage of the same storage engine optimization to skip row-level shared locks. Why were so many shared row locks used, making the PAGLOCK hint necessary? The short answer is that this optimization is not available for INSERT...SELECT queries. If we run the SELECT on its own (i.e. without writing the results to a table), and without a PAGLOCK hint, the row lock skipping optimization is applied:

DECLARE @s datetime2 = SYSUTCDATETIME();

--DECLARE @Result AS table

--(

-- SalesPerson integer PRIMARY KEY,

-- Median float NOT NULL

--);

--INSERT @Result

-- (SalesPerson, Median)

SELECT

d.SalesPerson,

w.Median

FROM

(

SELECT SalesPerson, COUNT(*) AS y

FROM dbo.Sales

GROUP BY SalesPerson

) AS d

CROSS APPLY

(

SELECT AVG(0E + Amount)

FROM

(

SELECT z.Amount

FROM dbo.Sales AS z

WHERE z.SalesPerson = d.SalesPerson

ORDER BY z.Amount

OFFSET (d.y - 1) / 2 ROWS

FETCH NEXT 2 - d.y % 2 ROWS ONLY

) AS f

) AS w (Median);

SELECT Peso = DATEDIFF(MILLISECOND, @s, SYSUTCDATETIME());Only table- and page-level intent-shared (IS) locks are utilised, and performance increases to the same level as when we use the PAGLOCK hint. You will not find this behaviour in the documentation and it could change at any time. Still, it is good to be aware of. Also, in case you were wondering, trace flag 4138 has no effect on the storage engine’s locking granularity choice in this case because the estimated number of rows at the seek is too low (per apply iteration) even with the row goal disabled.

Before drawing conclusions about the performance of a query, be sure to check the number and type of locks it is taking during execution. Though SQL Server usually chooses the ‘right’ granularity, there are times when it can get things wrong, sometimes with dramatic effects on performance.

Thanks for reading.