The Read Committed Isolation Level

Logical Guarantees

Read committed is the second weakest of the four isolation levels defined by the SQL standard. Nevertheless, it is the default isolation level for many database engines, including on-premises SQL Server.

The SQL standard requires that a transaction running under read committed isolation reads only committed data. It expresses this requirement by forbidding the concurrency phenomenon known as a dirty read. A dirty read occurs where a transaction reads data that has been written by another transaction, before that second transaction completes. Another way of expressing this is to say that a dirty read occurs when a transaction reads uncommitted data.

The standard also mentions that a transaction running at read committed isolation might encounter the concurrency phenomena known as non-repeatable reads and phantoms. Though many books explain these phenomena in terms of a transaction being able to see changed or new data items if data is subsequently re-read, this explanation can reinforce the misconception that concurrency phenomena can only occur inside an explicit transaction that contains multiple statements. This is not so. A single statement without an explicit transaction is just as vulnerable to the non-repeatable read and phantom phenomena, as we we will see shortly.

That is pretty much all the standard has to say on the subject of read committed isolation. At first sight, reading only committed data seems like a pretty good guarantee of sensible behaviour, but as always the devil is in the detail. As soon as you start to look for potential loopholes in this definition, it becomes only too easy to find instances where a read committed transaction might not produce the results we expect. Again, we will discuss these in more detail in a moment or two.

Differing physical implementations

There are at least two things that mean the observed behaviour of the read committed isolation level might be quite different on different database engines. First, the SQL standard requirement to read only committed data does not necessarily mean that the committed data read by a transaction will be the most-recently committed data.

A database engine is allowed to read a committed version of a row from any point in the past and still comply with the SQL standard definition. Several popular database products implement read committed isolation this way. Query results obtained under this implementation of read committed isolation might be arbitrarily outdated when compared with the current committed state of the database. We will cover this topic as it applies to SQL Server in the next post in the series.

The second thing I want to draw your attention to is that the SQL standard definition does not preclude a particular implementation from providing additional concurrency-effect protections beyond preventing dirty reads. The standard only specifies that dirty reads are not allowed—it does not require that other concurrency phenomena must be allowed or observed at any given isolation level.

To be clear about this second point, a standards-compliant database engine could implement all isolation levels using serializable semantics if it so chose. Some major commercial database engines also provide an implementation of read committed that goes well beyond simply preventing dirty reads (though none go as far as providing complete Isolation in the ACID sense).

In addition to that, for several popular products read committed isolation is the lowest isolation level available—their implementations of read uncommitted isolation are exactly the same as read committed. This is allowed by the standard, but these sorts of differences do add complexity to the already difficult task of migrating code from one platform to another. When talking about the behaviours of an isolation level, it is usually important to specify the particular platform as well.

As far as I know, SQL Server is unique among the major commercial database engines in providing two implementations of the read committed isolation level, each with very different physical behaviours. This article covers the first of these, locking read committed.

Locking Read Committed

If the database option READ_COMMITTED_SNAPSHOT is off, SQL Server uses a locking implementation of the read committed isolation level where shared locks are taken to prevent a concurrent transaction from concurrently modifying data. Such modifications would require an exclusive lock, which is not compatible with a shared lock.

The key difference between SQL Server locking read committed and locking repeatable read (which also takes shared locks when reading data) is that read committed releases the shared lock as soon as possible. Repeatable read holds these shared locks to the end of the enclosing transaction.

When locking read committed acquires locks at row granularity, the shared lock taken on a row is generally released when a shared lock is taken on the next row. At page granularity, the shared page lock is released when the first row on the next page is read. Unless a lock-granularity hint is supplied with the query, the database engine decides what level of lock granularity to start with. Note that granularity hints are only treated as suggestions by the engine—a less granular lock than requested might still be taken initially. Locks might also be escalated during execution from row or page level to partition or table level depending on system configuration.

The important point here is that shared locks are typically held for only a very short time while the statement is executing. To address one common misconception explicitly, locking read committed does not usually hold shared locks to the end of the statement.

Locking behaviours

The short-term shared locks used by the SQL Server locking read committed implementation provide very few of the guarantees commonly expected of a database transaction by T-SQL programmers. In particular, a statement running under locking read committed isolation:

- Can encounter the same row multiple times.

- Can miss some rows completely.

- Does not provide a point-in-time view of the data.

That list might seem more like a description of the weird behaviours you might associate more with the use of NOLOCK hints, but all these things really can, and do happen when using locking read committed isolation.

Example

Consider the task of counting the rows in a table using the obvious single-statement query. Under locking read committed isolation with row-locking granularity, our query will take a shared lock on the first row, read data, release the shared lock, move on to the next row, and so on until it reaches the end of the structure it is reading. For the sake of this example, assume our query is reading an index b-tree in ascending key order (though it could just as well use a descending order, or any other strategy).

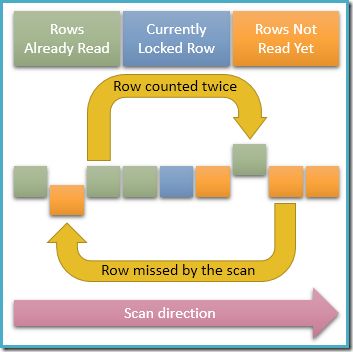

Since only a single row is share-locked at any given moment in time, it is clearly possible for concurrent transactions to modify the unlocked rows in the index our query is traversing. If these concurrent modifications change index key values, they will cause rows to move around within the index structure. With that possibility in mind, the diagram below illustrates two problematic scenarios that can occur:

The uppermost arrow shows a row we have already counted having its index key concurrently modified so that the row moves ahead of the current scan position in the index, meaning the row will be counted twice. The second arrow shows a row our scan has not encountered yet moving behind the scan position, meaning the row will not be counted at all.

Not a point-in-time view

The previous section showed how locking read committed can miss data completely or count the same item multiple times (more than twice, if we are unlucky). The third bullet point in the list of unexpected behaviours stated that locking read committed does not provide a point-in-time view of the data either.

The reasoning behind that statement should now be easy to see. Our counting query, for example, could easily read data that was inserted by concurrent transactions after our query started executing. Equally, data that our query sees might be modified by concurrent activity after our query starts and before it completes. Finally, data we have read and counted might be deleted by a concurrent transaction before our query completes.

Clearly, the data seen by a statement or transaction running under locking read committed isolation corresponds to no single committed state of the database at any particular point in time. The data we encounter might well be from a variety of different points in time, with the only common factor being that each item represented the latest committed value of that data at the time it was read (though it might well have changed or disappeared since).

How serious are these problems?

This all might seem like a pretty woolly state of affairs if you are used to thinking of your single-statement queries and explicit transactions as logically executing instantaneously, or as running against a single committed point-in-time state of the database when using the default SQL Server isolation level. It certainly does not fit well with the concept of isolation in the ACID sense.

Given the apparent weakness of the guarantees provided by locking read committed isolation, you might start to wonder how any of your production T-SQL code has ever worked properly! Of course, we can accept that using an isolation level below serializable means we give up full ACID transaction isolation in return for other potential benefits, but just how serious can we expect these issues to be in practice?

Missing and double-counted rows

These first two issues essentially rely on concurrent activity changing keys in an index structure that we are currently scanning. Note that scanning here includes the partial range scan portion of an index seek as well as the familiar unrestricted index or table scan.

If we are (range) scanning an index structure whose keys are not typically modified by any concurrent activity, these first two issues should not be much of a practical problem. It is difficult to be certain about this though because query plans can change to use a different access method and the new searched index might incorporate volatile keys.

We also have to bear in mind that many production queries only really need an approximate or best-effort answer to some types of question anyway. The fact that some rows are missing or double-counted might not matter much in the broader scheme of things. On a system with many concurrent changes, it might even be difficult to be sure that the result was inaccurate, given that the data changes so frequently. In that sort of situation, a roughly-correct answer might be good enough for the purposes of the data consumer.

No point-in-time view

The third issue (the question of a so-called ‘consistent’ point-in-time view of the data) also comes down to the same sort of considerations. For reporting purposes, where inconsistencies tend to result in awkward questions from the data consumers, a snapshot view is frequently preferable. In other cases, the sort of inconsistencies arising from the lack of a point-in-time view of the data may well be tolerable.

Problematic scenarios

There are also plenty of cases where the listed concerns will be important. For example, if you write code that enforces business rules in T-SQL, you need to be careful to select an isolation level (or take other suitable action) to guarantee correctness. Many business rules can be enforced using foreign keys or constraints, where the intricacies of isolation level selection are handled automatically for you by the database engine. As a general rule of thumb, using the built-in set of declarative integrity features is preferable to building your own rules in T-SQL.

There is another broad class of query that does not quite enforce a business rule per se, but which nevertheless might have unfortunate consequences when run at the default locking read committed isolation level. These scenarios are not always as obvious as the often-quoted examples of transferring money between bank accounts, or ensuring that the balance over a number of linked accounts never drops below zero. For example, consider the following query that identifies overdue invoices as an input to some process that sends out sternly-worded reminder letters:

INSERT dbo.OverdueInvoices

SELECT I.InvoiceNumber

FROM dbo.Invoices AS INV

WHERE INV.TotalDue >

(

SELECT SUM(P.Amount)

FROM dbo.Payments AS P

WHERE P.InvoiceNumber = I.InvoiceNumber

);Clearly we would not want to send a letter to someone who had fully paid their invoice in instalments simply because concurrent database activity at the time our query ran meant we calculated an incorrect sum of payments received. Real queries on real production systems are frequently much more complex than the simple example above, of course.

Final Thoughts

To finish up for today, take a look at the following query and see if you can spot how many opportunities there are for something unintended to occur, if several such queries are run concurrently at the locking read committed isolation level (perhaps while other unrelated transactions are also modifying the Cases table):

-- Allocate the oldest unallocated case ID to

-- the current case worker, while ensuring

-- the worker never has more than three

-- active cases at once.

UPDATE dbo.Cases

SET WorkerID = @WorkerID

WHERE

CaseID =

(

-- Find the oldest unallocated case ID

SELECT TOP (1)

C2.CaseID

FROM dbo.Cases AS C2

WHERE

C2.WorkerID IS NULL

ORDER BY

C2.DateCreated DESC

)

AND

(

SELECT COUNT_BIG(*)

FROM dbo.Cases AS C3

WHERE C3.WorkerID = @WorkerID

) < 3;Once you start looking for all the little ways a query can go wrong at this isolation level, it can be hard to stop. Bear in mind the caveats noted previously around the real need for completely isolated and point-in-time accurate results. It is perfectly fine to have queries that return good enough results, so long as you are aware of the trade-offs you are making by using read committed.

Next time

The next part in this series covers the second physical implementation of read committed isolation available in SQL Server, read committed snapshot isolation (RCSI).