Why Doesn’t Partition Elimination Work?

Given a partitioned table and a simple SELECT query that compares the partitioning column to a single literal value, why does SQL Server read all the partitions when it seems obvious that only one partition needs to be examined?

Example

The following script creates a table, partitioned on the char(3) column named Div, and populates it with 100,000 rows of data:

USE Sandpit;

GO

CREATE PARTITION FUNCTION PF (char(3))

AS RANGE RIGHT FOR VALUES

(

'1', '2', '3', '4', '5', '6', '7', '8', '9',

'A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I',

'J', 'K', 'L', 'M', 'N', 'O', 'P', 'Q', 'R',

'S', 'T', 'U', 'V', 'W', 'X', 'Y', 'Z'

);

GO

CREATE PARTITION SCHEME PS

AS PARTITION PF

ALL TO ([PRIMARY]);

GO

CREATE TABLE dbo.Test

(

Div char(3) NOT NULL,

[Data] char(50) NOT NULL,

)

ON PS(Div);

GO

INSERT dbo.Test WITH (TABLOCKX)

(Div, [Data])

SELECT TOP (100000)

CONVERT(char(3), CONVERT(char(36), NEWID())),

CONVERT(char(50), REPLICATE('X', 50))

FROM sys.columns AS C

CROSS JOIN sys.columns AS C2

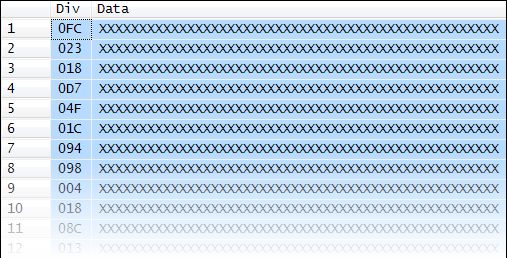

CROSS JOIN sys.columns AS C3;The created data looks like this:

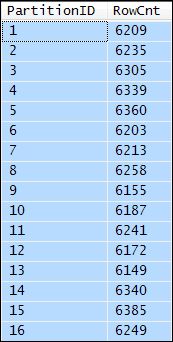

The Div column is pseudo-random so your results will be slightly different, but this is the distribution across partitions I saw:

SELECT

PartitionID = F.PartitionID,

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WITH (TABLOCK)

CROSS APPLY

(

VALUES

($PARTITION.PF(T.Div))

) AS F(PartitionID)

GROUP BY

F.PartitionID

ORDER BY

F.PartitionID;

The partition function defines a total of 36 partitions, but only 16 are used in the example.

The Test Query

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

T.Div = 'ABF';Since Div is the partitioning column, the expectation is that only one partition will be scanned to count the rows that match the WHERE clause predicate.

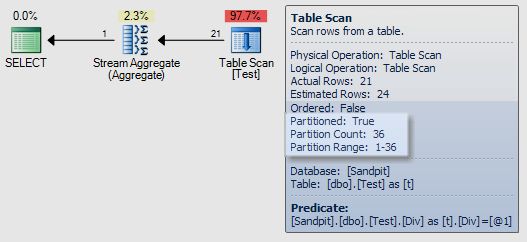

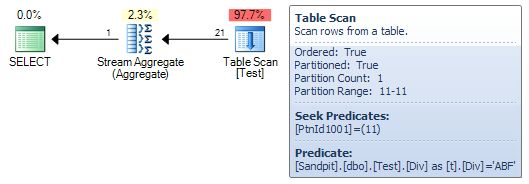

However, the execution plan shows that query execution scans all 36 partitions (a full table scan):

Why did SQL Server not just look at the one partition it knows the value ‘ABF’ must lie in?

Here’s Why:

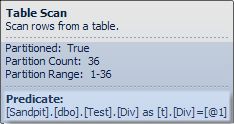

The answer lies in the predicate:

The value ‘ABF’ is nowhere to be seen; it has been replaced by [@1]. This means the query has been considered for simple parameterization by SQL Server to promote query plan reuse.

The presence of the [@1] does not mean that simple parameterization was successful (considered safe for all possible values by the optimizer). We have to check the plan cache to see if an associated prepared plan exists and is reused for different ‘parameter’ values.

It turns out that this time the simple parameterization attempt is considered safe, so a prepared plan is created and is reused for different values. The full parameterized text is:

(@1 varchar(8000))

SELECT COUNT_BIG(*) [RowCnt]

FROM [dbo].[Test] [T]

WHERE [T].[Div]=@1Static Partition Elimination

The reason SQL Server cannot apply static partition elimination (determining the partition number at compile time) is that the plan now contains a parameter. Subsequent executions that reuse the plan might specify a different value for the parameter, so static elimination would not be safe.

It is not possible to disable simple parameterization, but we can prevent it applying to this query in a number of ways. One way is to incorporate an inequality predicate of the form expression <> constant:

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

T.Div = 'ABF'

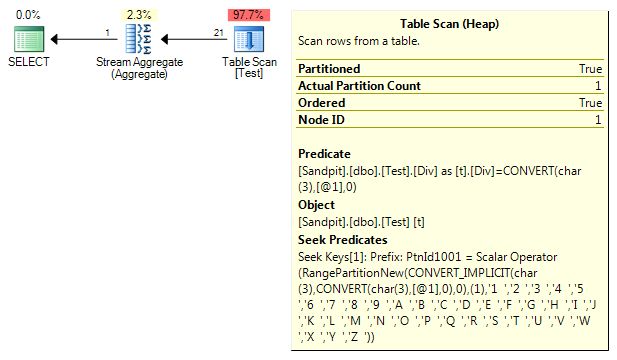

AND 1 <> 2;The redundant 1 <> 2 predicate is completely removed by the optimizer, and the query still qualifies for TRIVIAL optimization as it did previously:

There are a number of interesting things to notice here:

- The query is no longer parameterized (the literal value ‘ABF’ appears).

- Only one partition is touched (partition 11).

- This unindexed heap table has a seek predicate.

- The ‘ordered’ property has changed from

FalsetoTrue.

The problem with this plan is that it cannot be reused for different predicate values—we could end up with a separate cached plan for each possible literal value.

Adding OPTION (RECOMPILE) also defeats simple parameterization and therefore achieves static elimination. In an ad-hoc SQL batch, OPTION (RECOMPILE) also means the query plan will not be cached (stored procedures are not so lucky). The downside is a fresh trip through the optimizer on each call.

This query does qualify for a trivial plan, so optimization time is minimal, but more complex queries with the same desire for partition elimination might well require full optimization on each execution.

Dynamic Partition Elimination

Ideally, we would like a query plan that is reusable but still only touches one partition for any given string literal value.

What we need is dynamic partition elimination. In case the concept is new to you, the optimizer can produce plans that determine the correct partition at execution time (rather than at compile-time, as for static elimination).

To achieve this, the optimizer builds a plan that uses an internal function called RangePartitionNew(). One might think the original simple-parameterized plan ought to have included this feature; the reason it didn’t is quite interesting.

When SQL Server parameterizes a query using simple parameterization, it has to decide on a data type for the parameter. For string literals, it always chooses varchar(8000) for non-Unicode strings and nvarchar(4000) for Unicode strings. If it chose a more specific type like varchar(3), we could end up with the same plan cache bloating and non-reuse problem as for the non-parameterized case.

Remember, there are potentially 8000 different lengths for varchar. Also, prior to SQL Server 2005 SP2, the parameter-declaration part of the query text was not included in the hash value used to decide in which cache bucket to store the plan. This could result in hundreds of same-text plans (with different parameters) occupying the same hash bucket (this does not work well).

Anyway, faced with the parameterized predicate Div = [@1], where Div is char(3) and [@1] is varchar(8000), dynamic partition elimination does not occur because the value of [@1] might be truncated. One way to address this is to explicitly convert the to-be-parameterized string literal to the same type as the target column (char(3) in this case):

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

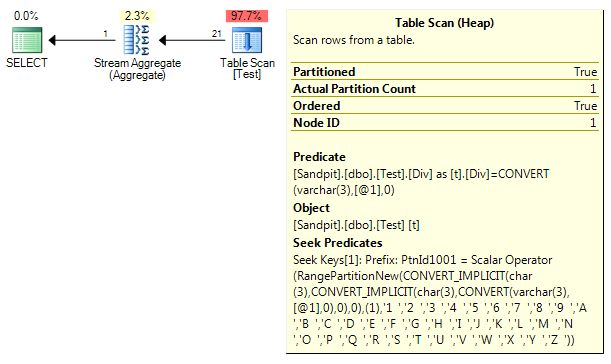

T.Div = CONVERT(char(3), 'CDC');Now we have a parameterized query plan that uses dynamic partition elimination:

This plan has all the interesting features of the static elimination plan, but the heap seek no longer selects a hard-coded partition ID. The single partition searched is the one returned at runtime by the call to RangePartitionNew().

The explicit convert prevents data truncation when the parameterized value is compared with the partition boundary values (defined as char(3) in the partition function). The extra CONVERT_IMPLICIT to char(3) is required for internal reasons, and only appears with non-Unicode partition function types.

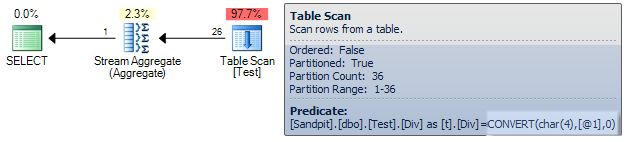

If we explicitly convert to char(4) instead of char(3), the possibility of truncation arises again and we are back to scanning all 36 partitions:

Using varchar(3) instead of char(3) also results in dynamic elimination, which may be surprising:

Implicit Conversions and Precedence

An alternative explanation I have seen for the original behaviour is that the string literal ‘ABF’ is implicitly typed as a varchar(3) by SQL Server and data type precedence means the char(3) Div column has to be implicitly converted to varchar(3) to perform the comparison. This is incorrect, but it is interesting to look at why:

Literal Types

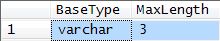

We can see the implicit type of the string literal ‘ABF’ using a query:

SELECT

BaseType = SQL_VARIANT_PROPERTY(V.v, 'BaseType'),

[MaxLength] = SQL_VARIANT_PROPERTY(V.v, 'MaxLength')

FROM

(

VALUES

(

CONVERT(sql_variant, 'ABF')

)

) AS V (v);The output is:

The implied type of ‘ABF’ does seem to be varchar(3), so that much checks out.

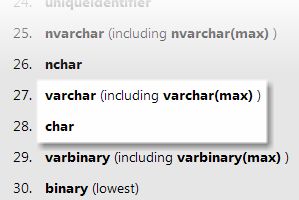

Type Precedence

The data type precedence table in the product documentation shows that varchar does indeed have a higher precedence than char:

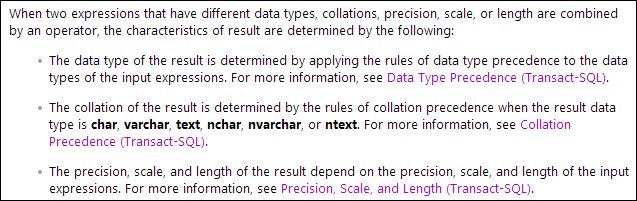

Also, from the documentation entry Data types (Transact-SQL):

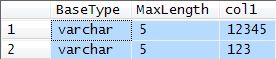

The example below uses a UNION ALL of char(5) and varchar(3) columns:

DECLARE @T1 AS table (col1 char(5) NOT NULL);

DECLARE @T2 AS table (col1 varchar(3) NOT NULL);

INSERT @T1 VALUES ('12345');

INSERT @T2 VALUES ('123');

SELECT

BaseType =

SQL_VARIANT_PROPERTY(CONVERT(sql_variant, T.col1), 'BaseType'),

[MaxLength] =

SQL_VARIANT_PROPERTY(CONVERT(sql_variant, T.col1), 'MaxLength'),

T.col1

FROM

(

SELECT col1 FROM @T1

UNION ALL

SELECT col1 FROM @T2

) AS T;The output is:

The result column col1 has to have a well-defined type. As expected, the result is varchar(5) since varchar has a higher precedence than char, and 5 is the maximum length of the result. All good so far.

Strings Are Special

We get used to seeing (and preferably avoiding) implicit conversions in query plans where two mismatched types are compared:

DECLARE @T1 AS table (col1 integer NULL);

DECLARE @v tinyint;

SELECT T.col1

FROM @T1 AS T

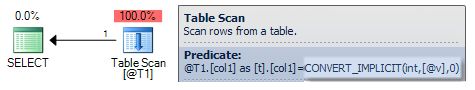

WHERE T.col1 = @v;The execution plan is:

This shows the tinyint variable being implicitly converted to the higher-precedence integer data type, as expected.

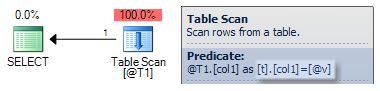

Now let’s do the same thing with varchar and char (perhaps expecting char to be converted to the higher-precedence varchar):

DECLARE @T1 AS table (col1 varchar(5) NULL);

DECLARE @v char(3);

SELECT T.col1

FROM @T1 AS T

WHERE T.col1 = @v;The execution plan is:

Note the lack of an implicit conversion in the predicate.

Important

SQL Server can compare char with varchar without performing a conversion. They are fundamentally the same type, it’s just that one is fixed-length and the other is variable-length.

The SQL Standard requires padding for the character strings used in comparisons so that their lengths match before comparing, thereby removing the only distinction between char and varchar.

The same optimization can apply to a comparison between nchar and nvarchar (though not to Unicode versus non-Unicode comparisons, since they are genuinely different types).

The present case involves a predicate WHERE Div = ‘ABF’. This is a comparison that can use this optimization, so there is no implicit conversion. This is also the reason the explicit conversion to varchar(3) works in the main example—the precedence rules do not need to be invoked, and the data column is not converted from char(3) to varchar(3).

Why Use a Partitioned Heap?

In case you were wondering, the only reason I chose a partitioned heap for this post is just because I could (it’s more interesting in many ways). All the demonstrations work just as well for a partitioned clustered table. A complete script using a clustered partitioned table is below:

USE Sandpit;

GO

CREATE PARTITION FUNCTION PF (char(3))

AS RANGE RIGHT FOR VALUES

(

'1', '2', '3', '4', '5', '6', '7', '8', '9',

'A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I',

'J', 'K', 'L', 'M', 'N', 'O', 'P', 'Q', 'R',

'S', 'T', 'U', 'V', 'W', 'X', 'Y', 'Z'

);

GO

CREATE PARTITION SCHEME PS

AS PARTITION PF

ALL TO ([PRIMARY]);

GO

CREATE TABLE dbo.Test

(

ID integer IDENTITY NOT NULL,

Div char(3) NOT NULL,

[Data] char(50) NOT NULL,

CONSTRAINT PK__dbo_Test_Div_ID

PRIMARY KEY CLUSTERED (Div, ID)

ON PS(Div)

);

GO

INSERT dbo.Test

WITH (TABLOCKX)

(Div, [Data])

SELECT TOP (100000)

CONVERT(char(3), CONVERT(char(36), NEWID())),

CONVERT(char(50), REPLICATE('X', 50))

FROM sys.columns AS C1

CROSS JOIN sys.columns AS C2

CROSS JOIN sys.columns AS C3;

GO

-- Show the distribution of data

SELECT

PartitionID = F.PartitionID,

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WITH (TABLOCK)

CROSS APPLY

(

VALUES

(

$PARTITION.PF(T.Div)

)

) AS F (PartitionID)

GROUP BY

F.PartitionID

ORDER BY

F.PartitionID;

GO

-- Seek on all 36 partitions

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

T.Div = 'ABF';

GO

-- Static elimination = 1 hard-coded partition ID

-- Cached plan unlikely to be reused

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

T.Div = 'ABF'

AND 1 <> 2;

GO

-- Static elimination

-- Compilation overhead

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

T.Div = 'ABF'

OPTION (RECOMPILE);

GO

-- Dynamic elimination with convert to char(3)

-- Reusable plan

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

T.Div = CONVERT(char(3), 'ABF');

GO

-- Dynamic elimination with convert to varchar(3)

-- Data type precedence not applied:

-- varchar(3) convert does not cause the char(3)

-- column Div to be converted (would prevent a seek)

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

T.Div = CONVERT(varchar(3), 'ABF');

GO

-- char(4) means truncation could occur when

-- comparing with the char(3) partition function

-- No partition elimination, seek on 36 partitions

SELECT

RowCnt = COUNT_BIG(*)

FROM dbo.Test AS T

WHERE

T.Div = CONVERT(char(4), 'ABF');

GO

-- Clean up

DROP TABLE dbo.Test;

DROP PARTITION SCHEME PS;

DROP PARTITION FUNCTION PF;Final Thoughts

This post applies to SQL Server 2008 and later, including SQL Server 2017. Partitioned table query execution was very different in 2005. As far as I can tell, SQL Server 2005 did not attempt simple parameterization on a partitioned table.

I was in two minds whether the SQL Server 2008+ behaviour was a bug, an oversight, or an undesirable consequence of fixing something else, so I opened a Connect item (archive link) for it. The report was closed as Won’t Fix:

The reasons for closing this bug is because the scenario reported in the bug are not common enough and due to the risk of implementing a fix it unfortunately does not meet the bar for the current version of the product.

Sean Broadley raised an important practical point in the original comments on this post: The issue highlighted here only occurs when a literal value is used. By correctly parameterizing your queries (including application code and dynamic SQL) you will avoid simple parameterization, achieve dynamic partition elimination, and cache a more reusable query plan.

Acknowledgement

Thanks to Jonathan Kehayias (blog | X) who first altered me to the MSDN forum question (archive link) that prompted this post.