Undocumented Query Plans: Equality Comparisons

Finding Changed Rows

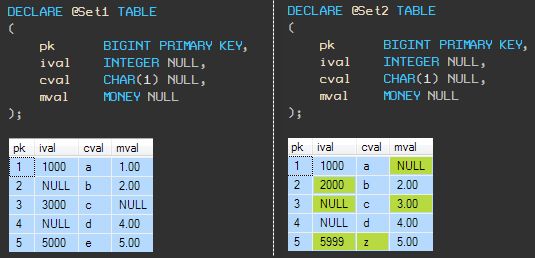

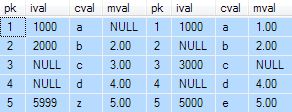

The diagram below shows two data sets, with differences highlighted:

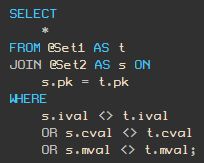

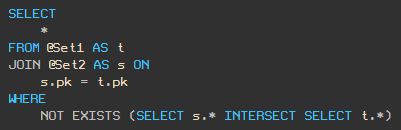

To find changed rows using T-SQL, we might write a query like this:

The logic is clear: Join rows from the two sets together on the primary key column, and return rows where a change has occurred in one or more data columns.

Unfortunately, this query only finds one of the expected four rows:

The problem is that our query does not correctly handle nulls.

The ‘not equal to’ operators <> and != do not evaluate to TRUE if one or both of the expressions concerned is NULL.

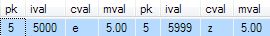

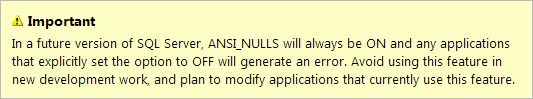

In this example, that statement is always true because we are comparing column references. In other circumstances, the behaviour might depend on the ANSI_NULLS setting. I am not going to go into that here, partly because new code should be written to assume SET ANSI_NULLS ON:

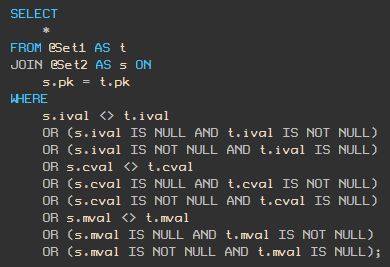

One obvious way to handle the NULLs in our example is to write all the conditions out in full:

That query produces the correct results (only the row with PK = 4 is identical in both sets):

Even with just three columns to check, the query is already getting quite long. It is also quite easy to miss one of the combinations or to misplace a parenthesis.

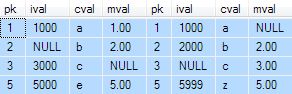

In an attempt to reduce the size of the query, we might be tempted to use ISNULL or COALESCE:

The idea there is to replace any NULLs in the data with a particular non-null value that allows the comparison to work as we expect.

This produces the correct result (with the test data) but there are a number of reasons to dislike this approach.

For one thing, we have to be careful to choose a special replacement value that can never appear in the real data, now or at any time in the future. Taking this approach is no different, in principle, from choosing not to store NULLs in the first place, and using the chosen special value instead.

Leaving the ‘to null or not to null’ debate to one side, another issue is that COALESCE returns a value typed according to the input expression that has the highest data type precedence.

Many times, this will not matter, but it is possible to introduce subtle bugs this way. Using ISNULL instead would avoid this issue by ensuring that the data type of the first expression is used, but the problem of choosing appropriate special values remains.

The final objection I have to this method is a bit more subjective: although the query looks simpler than before, COALESCE is just shorthand for a CASE expression.

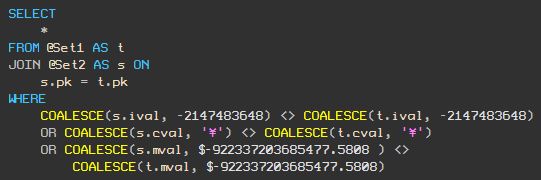

Let’s compare the execution plans for the two queries:

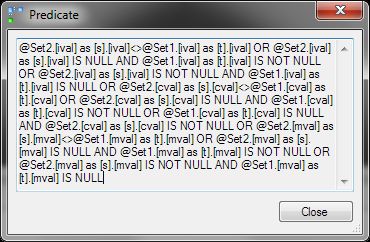

That’s the plan for the query with all the conditions written out in full. The Filter operator contains this mess:

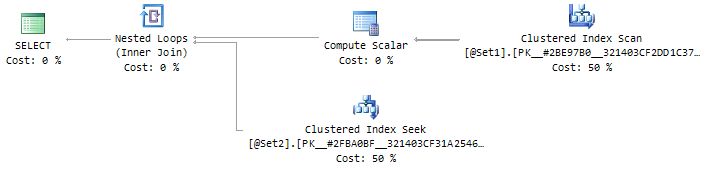

The COALESCE form execution plan looks like this:

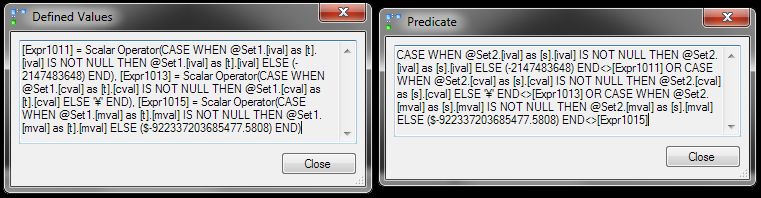

Now, the complexity is split between two operators. The Compute Scalar contains the definitions shown below on the left, and the Clustered Index Seek contains the residual predicate shown on the right:

Using ISNULL instead makes some difference—the graphical plan is visually identical to that obtained by using COALESCE, but the defined values and residual predicate are somewhat more concise:

An Alternative Syntax

We are looking for rows that join based on the value of the PK column, but which contain a difference in at least one column.

Another way to state that is to say:

For each row that joins, the intersection of the two rows should be empty.

If the two rows are identical, the intersection of the two rows will be a single row. Conversely if the two rows are different in any way, the intersection will be empty.

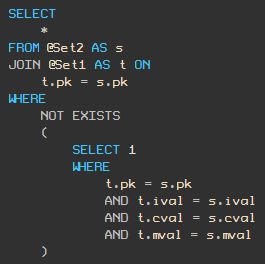

Writing that logic in T-SQL results in this query form:

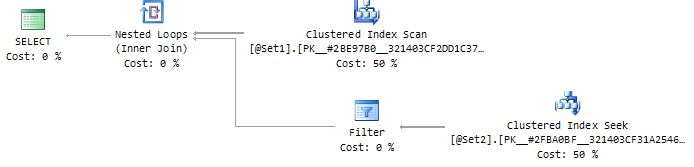

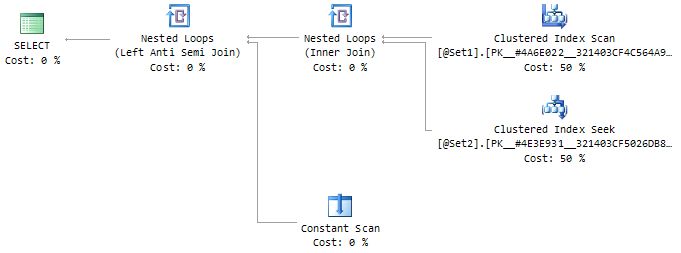

The query accounts for NULLs correctly, and produces the correct result. The query plan looks like this:

There are no surprises in the Clustered Index Scan, Clustered Index Seek, or Inner Join. In particular, none of these operators define any expressions or apply any residual predicates.

The seek is the one we would expect: It seeks to the row with the matching PK column value.

Looking at the Constant Scan reveals nothing, literally. This operator produces a single in-memory row with no columns. There really is nothing to see there, so we will move on to the last remaining operator: the Left Anti Semi Join.

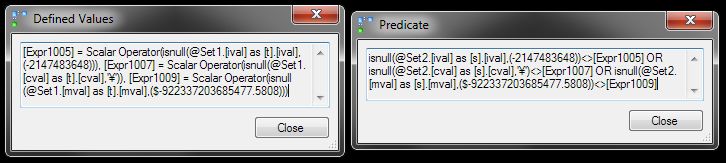

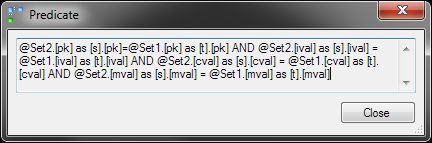

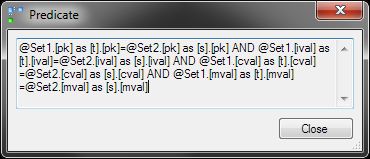

If you were expecting to see complex logic similar to the CASE expressions seen earlier, prepare for a disappointment. The anti semi join contains the following:

Aside from a (redundant) check that the two PK columns match, this predicate just checks that all three data column values match, using an equality (=) comparison. (As a side note, we can avoid the redundant check on PK values by specifying just the data columns in the INTERSECT sub-query, rather than using the star * syntax).

To see how this works, consider that a left anti semi join passes rows through where no row is found on the inner input.

In this case, a row (with no columns) is always provided by the Constant Scan, but the predicate shown above is applied to it before the anti semi join decides whether to pass the source row on or not.

If all the conditions evaluate to TRUE, the no-column row from the Constant Scan is retained. The anti semi join finds that row on its inner input, and the source row does not pass on to the query output.

The net effect is that if the two rows match in all columns, no row appears on the output. In all other cases (where at least one difference exists) the current row is returned by the execution plan.

This is the correct semantic, so the query returns the correct result.

NULL handling trickery

At this point, you might be wondering how this query plan manages to handle NULLs correctly.

Consider the rows in the source tables with PK = 4. Both rows are identical, but only if the NULLs present in the ival column compare equal.

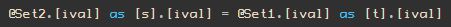

The relevant part of the predicate shown above is:

In the case where both columns contain NULL, we would expect this equality comparison to return UNKNOWN, not the TRUE needed to ensure that the anti semi join does not pass the source row to the output.

In other words, unless this equality comparison is doing something unusual, we would expect the query to incorrectly return a row for PK = 4 because the NULLs in the ival column should not compare equal.

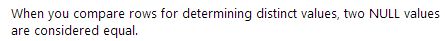

The reason it works lies in the way INTERSECT handles NULLs. According to the documentation:

That explains why the INTERSECT query produces correct results, but it does not say how the query plan achieves this.

Before we see the details of that, let’s look at what happens if we try to write the query using the same logic as the INTERSECT plan:

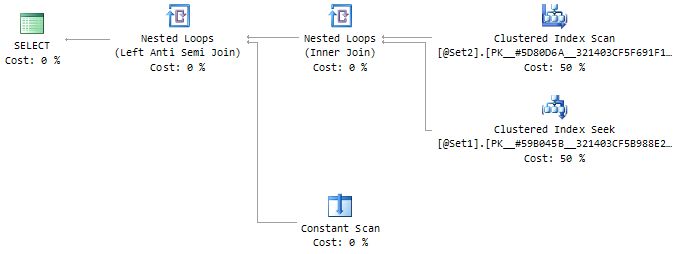

That query produces the exact same graphical query plan as the INTERSECT query:

Even the predicate on the Anti Semi Join is identical:

Despite the identical plan, this new query produces wrong results! It includes the row with PK = 4 in the output, due to the problem comparing the NULLs in those rows:

The Answer

Although the graphical query plan (and even the extra detail available in the SSMS Properties window) shows no difference between the INTERSECT and NOT EXISTS queries, there is a difference—one that implements the different comparison semantics involved.

In the INTERSECT form, the equality comparison must compare two NULLs as equal. In the NOT EXISTS form, we are using a regular = comparison, one that should return UNKNOWN when two NULLs are compared.

To see this difference, we have to look deeper into the execution plan than the graphical form or Properties window can take us.

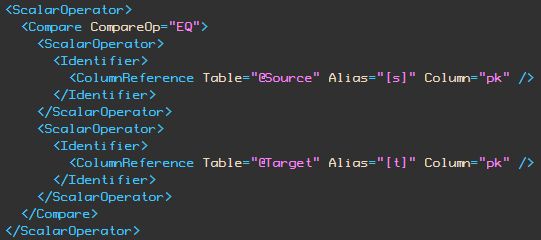

Inspecting the XML behind the graphical plan, we see the following logic in both cases for the test on the PK column values:

Notice the compare operation is EQ—a test for equality between the two column references. The EQ test does not return true for NULLs.

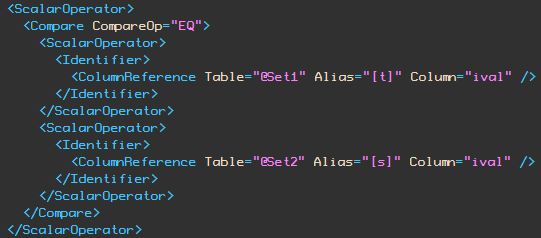

In the NOT EXISTS form of the query, the other columns are compared in exactly the same way, using EQ comparisons. For example, this is the test on the ival column:

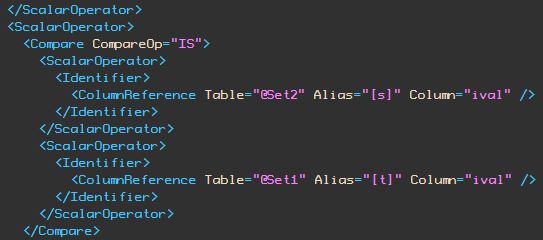

Now look at the XML for the ival column comparison in the INTERSECT query:

Now the compare operation is shown as IS instead of EQ.

This is the reason that NULLs compare equal in the INTERSECT test. It is using the comparison semantic familiar to T-SQL users from expressions like WHERE x IS NULL.

This is the SQL language IS DISTINCT FROM feature—implemented in the query processor, but not available in the T-SQL language before SQL Server 2022.

Thanks for reading.

Complete test script

USE Sandpit;

DECLARE @Set1 table

(

pk bigint PRIMARY KEY,

ival integer NULL,

cval char(1) NULL,

mval money NULL

);

DECLARE @Set2 TABLE

(

pk bigint PRIMARY KEY,

ival integer NULL,

cval char(1) NULL,

mval money NULL

);

INSERT @Set1

(pk, ival, cval, mval)

VALUES

(1, 1000, 'a', $1),

(2, NULL, 'b', $2),

(3, 3000, 'c', NULL),

(4, NULL, 'd', $4),

(5, 5000, 'e', $5);

INSERT @Set2

(pk, ival, cval, mval)

VALUES

(1, 1000, 'a', NULL),

(2, 2000, 'b', $2),

(3, NULL, 'c', $3),

(4, NULL, 'd', $4),

(5, 5999, 'z', $5);

-- Incorrect results, doesn't account for NULLs

SELECT

*

FROM @Set1 AS t

JOIN @Set2 AS s ON

s.pk = t.pk

WHERE

s.ival <> t.ival

OR s.cval <> t.cval

OR s.mval <> t.mval;

-- Correct, but verbose and error-prone

SELECT

*

FROM @Set1 AS t

JOIN @Set2 AS s ON

s.pk = t.pk

WHERE

s.ival <> t.ival

OR (s.ival IS NULL AND t.ival IS NOT NULL)

OR (s.ival IS NOT NULL AND t.ival IS NULL)

OR s.cval <> t.cval

OR (s.cval IS NULL AND t.cval IS NOT NULL)

OR (s.cval IS NOT NULL AND t.cval IS NULL)

OR s.mval <> t.mval

OR (s.mval IS NULL AND t.mval IS NOT NULL)

OR (s.mval IS NOT NULL AND t.mval IS NULL);

-- COALESCE: Correct results, but problematic

SELECT

*

FROM @Set1 AS t

JOIN @Set2 AS s ON

s.pk = t.pk

WHERE

COALESCE(s.ival, -2147483648) <> COALESCE(t.ival, -2147483648)

OR COALESCE(s.cval, '¥') <> COALESCE(t.cval, '¥')

OR COALESCE(s.mval, $-922337203685477.5808 ) <> COALESCE(t.mval, $-922337203685477.5808);

-- ISNULL: Correct results, but problematic

SELECT

*

FROM @Set1 AS t

JOIN @Set2 AS s ON

s.pk = t.pk

WHERE

ISNULL(s.ival, -2147483648) <> ISNULL(t.ival, -2147483648)

OR ISNULL(s.cval, '¥') <> ISNULL(t.cval, '¥')

OR ISNULL(s.mval, $-922337203685477.5808 ) <> ISNULL(t.mval, $-922337203685477.5808);

-- INTERSECT:

-- Correct results in a compact form

SELECT

*

FROM @Set1 AS t

JOIN @Set2 AS s ON

s.pk = t.pk

WHERE

NOT EXISTS (SELECT s.* INTERSECT SELECT t.*);

-- NOT EXISTS:

-- Same query plan, but wrong results!

SELECT

*

FROM @Set2 AS s

JOIN @Set1 AS t ON

t.pk = s.pk

WHERE

NOT EXISTS

(

SELECT 1

WHERE

t.pk = s.pk

AND t.ival = s.ival

AND t.cval = s.cval

AND t.mval = s.mval

);