The Impact of Non‑Updating Updates

Introduction

From time to time, I encounter a system design that always issues an UPDATE against the database after a user has finished working with a record—without checking to see if any of the data was in fact altered. The prevailing wisdom seems to be “the database will sort it out”. This raises an interesting question: How smart is SQL Server in these circumstances?

In this article, I’ll look at a generalisation of this problem: What is the impact of updating a column to the value it already contains?

The specific questions I want to answer are:

- Does this kind of

UPDATEgenerate any log activity? - Do data pages get marked as dirty (and so eventually get written out to disk)?

- Does SQL Server bother doing the update at all?

To answer each of these questions, we will need a test database and way to measure each of the potential effects.

The Test Database

The following code creates a table with a single row of data, in a brand new database:

USE master;

GO

CREATE DATABASE [5B4F379C-7B88-45C8-9CF2-662BC4CCCDE7];

GO

ALTER DATABASE [5B4F379C-7B88-45C8-9CF2-662BC4CCCDE7]

SET ALLOW_SNAPSHOT_ISOLATION OFF;

GO

ALTER DATABASE [5B4F379C-7B88-45C8-9CF2-662BC4CCCDE7]

SET READ_COMMITTED_SNAPSHOT OFF;

GO

USE [5B4F379C-7B88-45C8-9CF2-662BC4CCCDE7];

GO

CREATE TABLE dbo.SomeTable

(

some_column integer NOT NULL,

some_data integer NOT NULL,

);

GO

INSERT dbo.SomeTable

(some_column, some_data)

VALUES

(1, 999);Measuring log activity

To assess the impact on the transaction log, we can use the undocumented (but widely known and used) system table-valued function sys.fn_dblog—a handy function that provides a way to read the active part of the transaction log. To make it easy to see just the log records generated by our test UPDATE statement, we’ll run a CHECKPOINT immediately before the test:

CHECKPOINT;

GO

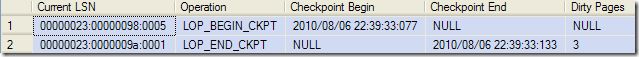

SELECT

L.[Current LSN],

L.[Operation],

L.[Checkpoint Begin],

L.[Checkpoint End],

L.[Dirty Pages]

FROM sys.fn_dblog (NULL, NULL) AS L;Sample output:

As you can see, the transaction log just contains records concerning the CHECKPOINT operation itself. We can now run test UPDATE queries and examine the log again afterwards to see the changes.

If you were wondering if the recovery model of the new database makes any difference here, the answer is ‘no’. All newly-created databases run in log auto-truncate mode. If the recovery model is full or bulk-logged, this continues until the first full backup is taken. A database running the simple recovery model always operates in log auto-truncate mode.

Measuring Dirty Pages

The dynamic management view sys.dm_os_buffer_descriptors shows the pages held in SQL Server’s buffer pool. One of the columns returned from this view (is_modified) shows if a page has been changed since it was read from disk.

We can write a query to show the state of all pages associated with our test table:

SELECT

BUF.database_id,

BUF.file_id,

BUF.page_id,

BUF.page_type,

BUF.is_modified,

AU.type_desc

FROM sys.dm_os_buffer_descriptors AS BUF

JOIN sys.allocation_units AS AU

ON AU.allocation_unit_id = BUF.allocation_unit_id

JOIN sys.partitions AS PAR

ON AU.container_id =

CASE

WHEN AU.type = 2 THEN PAR.partition_id

ELSE PAR.hobt_id

END

WHERE

BUF.database_id = DB_ID()

AND PAR.object_id = OBJECT_ID(N'dbo.SomeTable', N'U');The CHECKPOINT we run to clear the log will also ensure that all dirty pages are written to disk.

After running a test UPDATE, we can run the above script to see if any pages were dirtied (and so will need to be flushed to disk at some point).

Measuring Update Activity

Finally, we need a way to determine if SQL Server performed the update, or skipped it entirely. We can use the sys.dm_db_index_operational_stats dynamic management view for this.

Despite the name, it works with heaps as well as clustered tables:

SELECT

IOS.index_id,

IOS.partition_number,

IOS.leaf_insert_count,

IOS.leaf_update_count,

IOS.leaf_delete_count,

IOS.leaf_ghost_count

FROM sys.dm_db_index_operational_stats

(

DB_ID(),

OBJECT_ID(N'dbo.SomeTable', N'U'),

NULL,

NULL

) AS IOS;This will show the number of index (or heap) operations performed at the leaf level.

A Simple Update

There are two basic ways to write an UPDATE that does not change the stored column values.

-

We can explicitly set a column to the value we know is stored there.

UPDATE dbo.SomeTable SET some_data = 999; -

We can write an statement that simply sets a column equal to itself:

UPDATE dbo.SomeTable SET some_data = some_data;

Heap

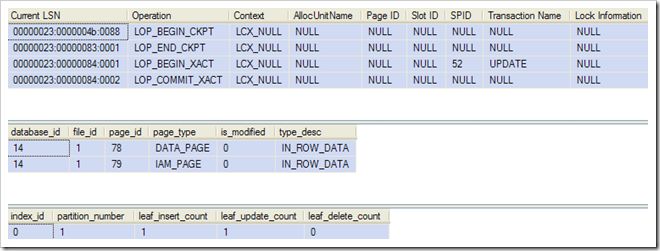

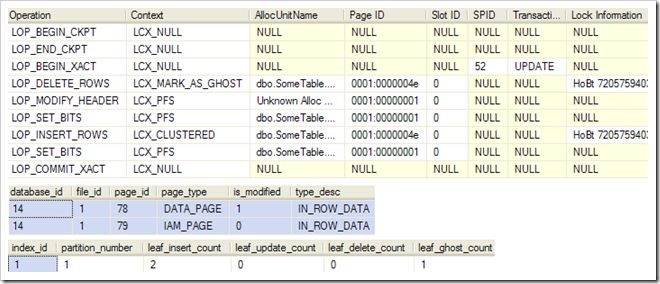

In this test, both syntaxes produce the same results:

The logging test results show that two log records were written, to log the start and end of the implicit transaction wrapping the UPDATE statement. There are no data modification log records. The buffer pool test shows that neither of the two pages associated with the table were marked dirty by the UPDATE. No buffer pages will need to be flushed to disk as a result of the update. The index operations test shows one insert (caused by the initial data load) and one update operation against index zero (the heap).

This all confirms that the update was physically performed (rather than skipped altogether) when using either UPDATE syntax.

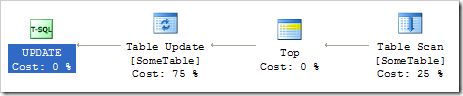

The query plans for the two syntax variations are not quite identical, though they do look the same at first glance:

When we specify a constant value (999) in the SET clause, the Table Scan iterator does not pass any column references up the plan. It just passes a bookmark so the Table Update knows which row to modify. When we use the column name syntax in the SET clause, the Table Scan passes the same bookmark plus the value currently stored in the some_data column.

Clustered table

If we perform the same tests, but with a clustered index on some_column, we get the same results—except the index_id in the index operations test is one instead of zero. The logging and buffer pool results are identical.

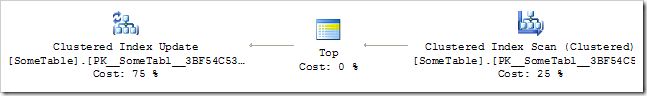

The query plan is naturally slightly different:

In addition to the different icons, the Clustered Index Scan passes a reference to some_column to serve as the bookmark. Essentially, though, it’s the same plan.

Updating Both Columns

Things get more interesting if we update both the some_column and some_data columns:

UPDATE dbo.SomeTable

SET some_column = 1,

some_data = 999;

-- or

UPDATE dbo.SomeTable

SET some_column = some_column,

some_data = some_data;Heap

When our test table is configured as a heap table, we get the same results as before. Just the implicit transaction is logged, the buffer pool pages are not marked dirty, and the index operations test shows that a physical update was performed. This remains the case even if we create a nonclustered primary key on some_column.

Clustered table

Performing the same tests on a clustered table produces a rather different output:

The logging test shows that SQL Server now logs marking the existing row as a ghost and inserting a new row, along with adjustments to the Page Free Space (PFS) record to record the fact that the page now contains at least one ghost record. The buffer pool test shows that the data page associated with the clustered table is now marked as dirty. The 8KB page will be written to disk shortly (by the checkpoint process, the lazy writer, or an about-to-suspend worker thread). The index operations test results show one row-ghosting operation and an insert, as indicated in the transaction log records.

The important observation is:

A clustered table will always produce full logging and dirty buffer pages if (any part of) the clustering key is updated.

Effects of a LOB Column

For our third test scenario, we’ll add an nvarchar(max) column to the table:

CREATE TABLE dbo.SomeTable

(

some_column integer NOT NULL,

some_data integer NOT NULL,

big_data nvarchar(max) NOT NULL,

);The presence of the LOB column does not affect the previous results (whether we ‘update’ the LOB column or not) with one important exception: If the LOB column contains more than 8000 bytes of data the effects seen depend on the syntax we use. If the SET column_name = column_name syntax is used, we can still see minimal transaction logging and clean buffer pool pages.

Let’s modify the test rig to use the explicit-value syntax instead:

INSERT dbo.SomeTable

(

some_column,

some_data,

big_data

)

VALUES

(

1,

999,

REPLICATE(CONVERT(nvarchar(max), N'X'), 4001)

);

GO

UPDATE dbo.SomeTable

SET big_data =

REPLICATE(CONVERT(nvarchar(max), N'X'), 4001);That produces the fully-logged, dirty-page-creating behaviour, regardless of whether the table is a heap or clustered, but only if the LOB data exceeds 8000 bytes (4000 Unicode characters in the above example). The only visible difference in the query plan is an extra Compute Scalar iterator, used to add in the result of the REPLICATE expression.

Row Versioning

If either of the database options ALLOW_SNAPSHOT_ISOLATION or READ_COMMITTED_SNAPSHOT are ON even if neither is being used we always get the fully-logged, page-dirtying behaviour, regardless of anything that has been said so far. This is because the engine starts generating and storing row versions for all data modification operations. Adding or updating the row versioning information on a page inevitably results in page changes which must be logged and flushed to disk at some stage.

Summary

SQL Server contains a number of optimizations to avoid unnecessary logging or page flushing when processing an UPDATE operation that will not result in any change to the persistent database.

-

Non-updating updates to a clustered table generally avoid extra logging and page flushing, unless a column that forms (part of) the clustering key is affected by the update operation.

-

If any part of the cluster key is ‘updated’ to the same value, the operation is logged as if data had changed, and the affected pages are marked as dirty in the buffer pool. This is a consequence of the conversion of the

UPDATEto a delete-then-insert operation. -

Heap tables behave the same as clustered tables, except they do not have a cluster key to cause any extra logging or page flushing.

This remains the case even where a non-clustered primary key exists on the heap. Non-updating updates to a heap therefore generally avoid the extra logging and flushing (but see below).

-

Both heaps and clustered tables will suffer the extra logging and flushing for any row where a LOB column containing more than 8000 bytes of data is updated to the same value using any syntax other than

SET column_name = column_name. -

Enabling either type of row versioning isolation level on a database always causes the extra logging and flushing. This occurs regardless of the isolation level in effect for the update transaction.

Further Reading

SQL Server 2005 and later include a further important optimization that can eliminate the overhead of updating non-clustered indexes where column values have not changed. See Optimized Non-clustered Index Maintenance by Craig Freedman.

Acknowledgements

My thanks to Josef Richberg and Mladen Prajdic for their contributions to the #sqlhelp discussion that prompted this blog entry.