Another Interesting MERGE Bug

I came across a Connect item (archive link) describing how using a combination of relatively new features can produce incorrect results, or even an access violation inside SQL Server.

Note: This bug was fixed in SQL Server 2012

Reproducing the bug

We will look first at how MERGE can produce an incorrect output.

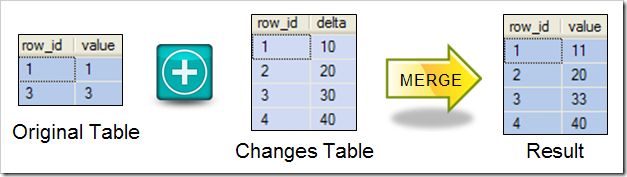

We will need two tables: One that contains two rows of existing data, and a second that contains four rows of change information.

The process looks like this in outline:

This is a classic MERGE (or ‘upsert’) requirement: If the row already exists, the new value is added to it, otherwise a new row is inserted.

Here’s the code to generate the sample tables and data:

DECLARE @Target TABLE

(

row_id integer NOT NULL PRIMARY KEY,

[value] integer NOT NULL

);

DECLARE @Delta TABLE

(

row_id integer NOT NULL PRIMARY KEY,

delta integer NOT NULL

);

-- Existing records (1 & 3)

INSERT @Target

(row_id, [value])

VALUES

(1, 1),

(3, 3);

-- Change table:

-- Updates rows 1 & 3

-- Inserts rows 2 & 4

INSERT @Delta

(row_id, delta)

VALUES

(1, 10),

(2, 20),

(3, 30),

(4, 40);We can easily write a MERGE query to perform the actions needed. Let’s also include an OUTPUT clause to show the effects on each row:

MERGE @Target AS T

USING @Delta AS D

ON D.row_id = T.row_id

WHEN MATCHED

THEN UPDATE SET T.[value] += D.delta

WHEN NOT MATCHED BY TARGET

THEN INSERT VALUES

(D.row_id, D.delta)

OUTPUT

D.row_id,

$action,

DELETED.[value] AS old_value,

INSERTED.[value] AS new_value;If we examine the @Target table after executing this query, we get exactly the results shown in the diagram. All good so far.

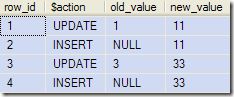

The problem comes when we look at the rows produced by the OUTPUT clause:

When the action is INSERT the new_value column contains an incorrect value. Instead of reporting the value actually inserted into the target table, it repeats the value associated with the last UPDATE operation.

If we change the data so that multiple INSERTs are performed after the UPDATE, all the INSERTs get the same new_value associated with the prior UPDATE.

The @Target table always contains the correct data. It’s just that the OUTPUT clause disagrees.

The Query Plan

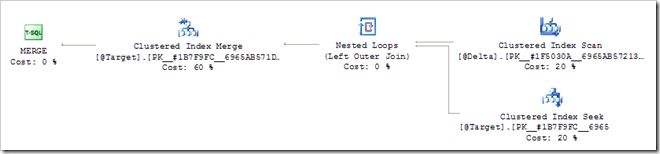

The original Connect item states that this issue only affects table variables, but there is more to see here. Let’s start by taking a look at the execution plan for the MERGE statement above:

I have omitted several Compute Scalar iterators to make it easier to see what’s going on.

There’s a scan of the @Delta table, followed by an Insert to the @Target table, and finally a Merge into the @Target table.

If you are familiar with the sort of plans MERGE normally produces, you might find that arrangement a bit odd. Even if MERGE is new to you, you might think that an Insert followed by a Merge is surprising.

In a normal MERGE, we would expect to see an outer join between the source and target tables, followed by a Merge:

SQL Server performs an outer join so that the Clustered Index Merge knows whether the current row already exists in the target table or not. If it exists, it is updated, otherwise a new row is inserted.

The optimization

The idea behind the optimization is to read a row from the @Delta table and immediately try to insert it into the @Target table. If that succeeds, the row didn’t already exist, and no further work is required. In particular, the Merge operator is not executed.

If the row did exist, the PRIMARY KEY on @Target will cause a duplicate key violation to occur. The relational engine suppresses that error and execution continues on down to the Clustered Index Merge iterator, which performs the necessary UPDATE using the conflicting row found by the insert.

This is a very useful (and perfectly safe) query optimization if the conditions are right. There are rather a lot of conditions, the most important of which are:

- The target of the merge must have a suitable clustered unique or primary key constraint.

- The changes (delta) table must have some sort of unique constraint (can be non-clustered) on the join column.

- The plan must be already using a nested loops join.

- The

MERGEmust include aNOT MATCHEDcondition plus at least oneMATCHEDclause. - Equivalent columns in the two tables must have exactly the same type-–-no implicit conversions allowed.

- There can be no chance of a constraint violation-–-including

NOT NULLconstraints. - The query must not update the clustered key.

If any of the required conditions do not apply, the optimization is not applied and the query plan retains the outer join. You can read more about this optimization in part 3 of my Halloween Protection series.

Bug Conditions

In order for the MERGE to return incorrect results:

- The

MERGEtarget must be a table variable (the delta table can be anything). - The optimizer must produce a final plan that includes the transformation described above.

- The

MERGEstatement must include anOUTPUTclause that references the inserted pseudo table. - The query has to perform an

INSERTrow action after anUPDATE(in clustered index order).

Importantly, it is only rows that result in an INSERT that cause problems. If only UPDATE or DELETE operations result from the MERGE, everything works perfectly—even with a table variable as the target.

Producing an access violation

The example data given for the @Delta table was carefully constructed to ensure that incorrect results were returned. With different sample data, we will get an Access Violation (AV) which terminates the connection and produces a stack dump on the SQL Server. The simplest way (based on the test rig above) is to change the @Delta table contents to just include a single row that will result in an INSERT:

INSERT @Delta

(row_id, delta)

VALUES

(2, 2);There is no row in @Target with row_id = 2, so this results in an INSERT.

To expand our test rig to encompass DELETE operations, we need to add an extra condition to the original MERGE statement. The new code will delete a record if its new value is zero (after applying the delta):

MERGE @Target AS T

USING @Delta AS D

ON D.row_id = T.row_id

WHEN

-- New

MATCHED

AND T.[value] = -D.delta

THEN DELETE

WHEN MATCHED

THEN UPDATE

SET T.[value] += D.delta

WHEN NOT MATCHED BY TARGET

THEN INSERT VALUES

(D.row_id, D.delta)

OUTPUT

D.row_id,

$action,

DELETED.value AS old_value,

INSERTED.value AS new_value;With that modification in place, the following values in the @Delta table will update the row with row_id = 1 and delete the one with row_id = 3:

INSERT @Delta

(row_id, delta)

VALUES

(1, 10),

(3, -3);This combination works correctly because there is no INSERT operation. Any number of UPDATEs and DELETEs can be performed without issue, so long as no INSERTs occur.

Workarounds

The easiest workarounds involve preventing the query optimization from happening in the first place. This is quite easy since there are so many conditions to satisfy.

One way is to prevent the plan from using a nested loops join with an OPTION (HASH JOIN, MERGE JOIN) hint. There are many other alternatives in the same vein, of course.

A second workaround is to apply trace flag 8758. Unfortunately that disables a number of optimizations, not just the one above, so it’s not really recommended.

A third workaround is to remove the unique key contraint on the target table.

Final Thoughts

This isn’t a bug that will affect everyone, but it does show that your chances of turning up a bug increase as you push the limits of the optimizer and new features like MERGE. It will be interesting to see how the Connect item goes. Will Microsoft fix this, or will they just say that MERGE was never intended for use with table variables? We’ll have to wait and see.

My thanks to SQLServerCentral user ALZDBA for reporting this problem. Personally, I see the prospect of returning incorrect data from the OUTPUT clause as more serious than the access violation. The OUTPUT clause is often used to record audit information, so producing incorrect data with no error is highly undesirable.

Update

Microsoft did fix this bug in SQL Server 2012 (but not 2008 R2) and responded to the Connect item with:

Posted by Microsoft on 11/4/2010 at 1:17 PM

The problem is that we were trying to apply a particular optimization that doesn’t work with table variables. The bug has been fixed for the next release.

You can work around the issue by disabling the unique key constraint on your table variable; that will disable the faulty optimization. If that is not acceptable, and you need the full fix now, please contact customer support.

Andrew Richardson Developer, SQL Server Query Optimizer.